|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

檢索增強生成 (RAG) 透過整合知識庫中的特定知識來增強大型語言模型 (LLM)。這種方法利用向量嵌入來有效地檢索相關資訊並增強法學碩士的背景。 RAG 透過在回答問題期間提供對特定資訊的存取來解決法學碩士的局限性,例如過時的知識和幻覺。

Introduction: Enhancing Large Language Models with Retrieval-Augmented Generation (RAG)

簡介:透過檢索增強生成 (RAG) 增強大型語言模型

Large Language Models (LLMs) have demonstrated remarkable capabilities in comprehending and synthesizing vast amounts of knowledge encoded within their numerous parameters. However, they possess two significant limitations: limited knowledge beyond their training dataset and a propensity to generate fictitious information when faced with specific inquiries.

大型語言模型 (LLM) 在理解和綜合編碼在其眾多參數中的大量知識方面表現出了非凡的能力。然而,它們有兩個顯著的限制:訓練資料集以外的知識有限,以及在面對特定查詢時產生虛構資訊的傾向。

Retrieval-Augmented Generation (RAG)

檢索增強生成 (RAG)

Researchers at Facebook AI Research, University College London, and New York University introduced the concept of Retrieval-Augmented Generation (RAG) in 2020. RAG leverages pre-trained LLMs with additional context in the form of specific relevant information, enabling them to generate informed responses to user queries.

Facebook AI Research、倫敦大學學院和紐約大學的研究人員於 2020 年引入了檢索增強生成 (RAG) 的概念。RAG 利用預先訓練的法學碩士以及特定相關信息形式的附加上下文,使他們能夠生成明智的信息對使用者查詢的回應。

Implementation with Hugging Face Transformers, LangChain, and Faiss

使用 Hugging Face Transformers、LangChain 和 Faiss 實現

This article provides a comprehensive guide to implementing Google's LLM Gemma with RAG capabilities using Hugging Face transformers, LangChain, and the Faiss vector database. We will delve into the theoretical underpinnings and practical aspects of the RAG pipeline.

本文提供了使用 Hugging Face 轉換器、LangChain 和 Faiss 向量資料庫實現具有 RAG 功能的 Google LLM Gemma 的綜合指南。我們將深入研究 RAG 管道的理論基礎和實踐方面。

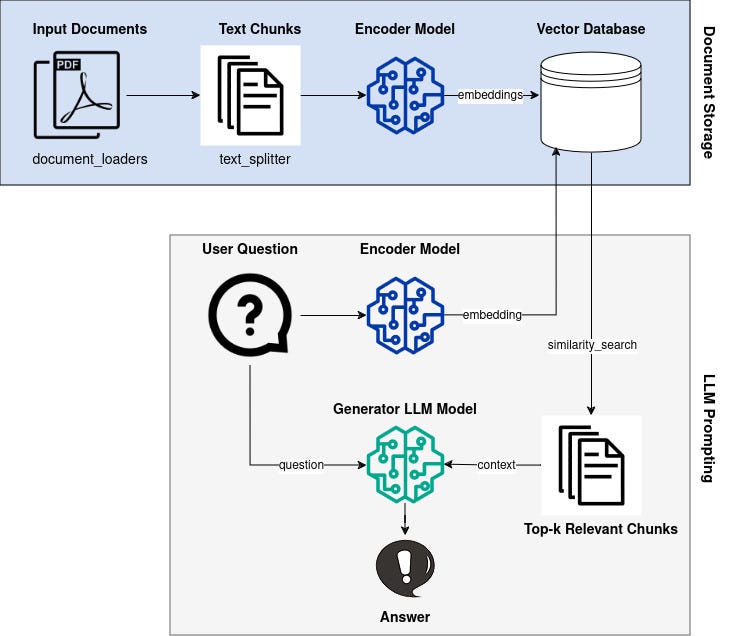

Overview of the RAG Pipeline

RAG 管道概述

The RAG pipeline comprises the following steps:

RAG管道包括以下步驟:

- Knowledge Base Vectorization: Encode a knowledge base (e.g., Wikipedia documents) into dense vector representations (embeddings).

- Query Vectorization: Convert user queries into vector embeddings using the same encoder model.

- Retrieval: Identify embeddings in the knowledge base that are similar to the query embedding based on a similarity metric.

- Generation: Generate a response using the LLM, augmented with the retrieved context from the knowledge base.

Knowledge Base and Vectorization

知識庫向量化:將知識庫(例如維基百科文件)編碼為密集向量表示(嵌入)。查詢向量化:使用相同的編碼器模型將使用者查詢轉換為向量嵌入。檢索:識別知識庫中與基於相似性度量的查詢嵌入。產生:使用LLM 產生回應,並使用從知識庫檢索到的上下文進行擴充。知識庫和向量化

We begin by selecting an appropriate knowledge base, such as Wikipedia or a domain-specific corpus. Each document z_i in the knowledge base is converted into an embedding vector d(z) using an encoder model.

我們首先選擇適當的知識庫,例如維基百科或特定領域的語料庫。使用編碼器模型將知識庫中的每個文件 z_i 轉換為嵌入向量 d(z)。

Query Vectorization

查詢矢量化

When a user poses a question x, it is also transformed into an embedding vector q(x) using the same encoder model.

當使用者提出問題 x 時,它也會使用相同的編碼器模型轉換為嵌入向量 q(x)。

Retrieval

恢復

To identify relevant documents from the knowledge base, we utilize a similarity metric to measure the distance between q(x) and all available d(z). Documents with similar embeddings are considered relevant to the query.

為了從知識庫中識別相關文檔,我們利用相似性測量來測量 q(x) 和所有可用 d(z) 之間的距離。具有相似嵌入的文件被認為與查詢相關。

Generation

世代

The LLM is employed to generate a response to the user query. However, unlike traditional LLMs, Gemma is augmented with the retrieved context. This enables it to incorporate relevant information from the knowledge base into its response, improving accuracy and reducing hallucinations.

LLM 用於產生對使用者查詢的回應。然而,與傳統的法學碩士不同,Gemma 透過檢索到的上下文進行了增強。這使得它能夠將知識庫中的相關資訊納入其回應中,從而提高準確性並減少幻覺。

Conclusion

結論

By leveraging the Retrieval-Augmented Generation (RAG) technique, we can significantly enhance the capabilities of Large Language Models. By providing LLMs with access to specific relevant information, we can improve the accuracy and consistency of their responses, making them more suitable for real-world applications that require accurate and informative knowledge retrieval.

透過利用檢索增強生成(RAG)技術,我們可以顯著增強大型語言模型的能力。透過為法學碩士提供特定相關資訊的存取權限,我們可以提高他們回答的準確性和一致性,使他們更適合需要準確和資訊豐富的知識檢索的現實應用。

免責聲明:info@kdj.com

所提供的資訊並非交易建議。 kDJ.com對任何基於本文提供的資訊進行的投資不承擔任何責任。加密貨幣波動性較大,建議您充分研究後謹慎投資!

如果您認為本網站使用的內容侵犯了您的版權,請立即聯絡我們(info@kdj.com),我們將及時刪除。

-

-

- 比特幣(BTC)顯示出從歷史最高高的30%以上後的恢復跡象

- 2025-04-12 08:50:12

- 在其歷史最高水平並短暫下跌75,000美元以上的30%以上之後,比特幣顯示出恢復的跡象。

-

- 標題:溫哥華市長肯·西姆(Ken Sim

- 2025-04-12 08:45:13

- SIM一直在一系列十字軍東征,使人們理解他在許多複雜而神秘的概念背後的動機。

-

-

- 隨著牛市的回報和創新,充滿了動力

- 2025-04-12 08:40:12

- 隨著牛市的回報和創新,向前付出了充分的動力,Web3信徒們問了一個真正的問題:哪些項目實際上正在構建

-

-

-

- 加密沒有發現問題,它有一個信任問題。 Beluga解決了。

- 2025-04-12 08:35:13

- 項目仍在發起,幾十個流動性又回來了,CT比以往任何時候都更大。但是,當模因飛行和市場帽泵時,一個安靜的真相懸掛

-

- 以太坊2鏈占主導地位的仲裁和基礎,

- 2025-04-12 08:30:13

- 仲裁和基礎為以太坊第2層鏈鋪平了道路,以主導穩定活性。他們共同處理超過2200萬美元的月刊。