|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Cryptocurrency News Articles

Unlocking Enhanced Language Models: Retrieval-Augmented Generation Unveiled

Apr 01, 2024 at 03:04 am

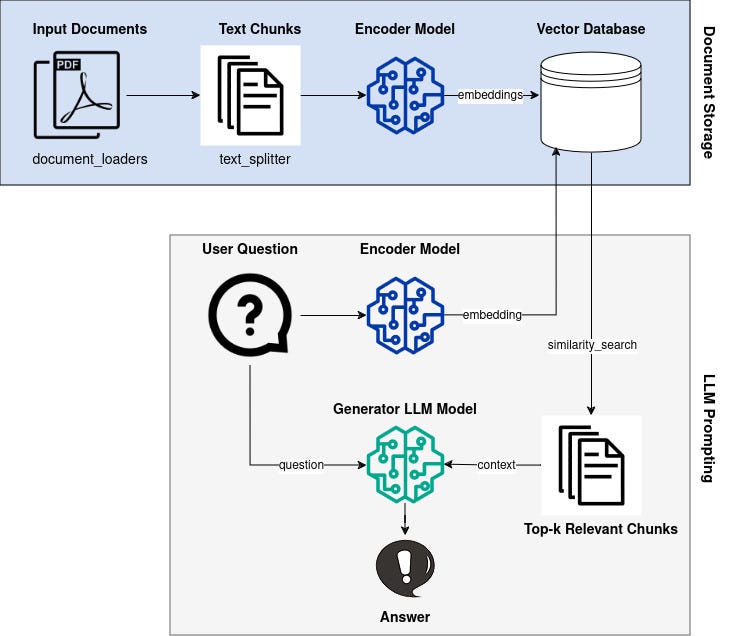

Retrieval-Augmented Generation (RAG) enhances Large Language Models (LLMs) by integrating specific knowledge from a knowledge base. This approach leverages vector embeddings to efficiently retrieve relevant information and augment the LLM's context. RAG addresses limitations of LLMs, such as outdated knowledge and hallucination, by providing access to specific information during question answering.

Introduction: Enhancing Large Language Models with Retrieval-Augmented Generation (RAG)

Large Language Models (LLMs) have demonstrated remarkable capabilities in comprehending and synthesizing vast amounts of knowledge encoded within their numerous parameters. However, they possess two significant limitations: limited knowledge beyond their training dataset and a propensity to generate fictitious information when faced with specific inquiries.

Retrieval-Augmented Generation (RAG)

Researchers at Facebook AI Research, University College London, and New York University introduced the concept of Retrieval-Augmented Generation (RAG) in 2020. RAG leverages pre-trained LLMs with additional context in the form of specific relevant information, enabling them to generate informed responses to user queries.

Implementation with Hugging Face Transformers, LangChain, and Faiss

This article provides a comprehensive guide to implementing Google's LLM Gemma with RAG capabilities using Hugging Face transformers, LangChain, and the Faiss vector database. We will delve into the theoretical underpinnings and practical aspects of the RAG pipeline.

Overview of the RAG Pipeline

The RAG pipeline comprises the following steps:

- Knowledge Base Vectorization: Encode a knowledge base (e.g., Wikipedia documents) into dense vector representations (embeddings).

- Query Vectorization: Convert user queries into vector embeddings using the same encoder model.

- Retrieval: Identify embeddings in the knowledge base that are similar to the query embedding based on a similarity metric.

- Generation: Generate a response using the LLM, augmented with the retrieved context from the knowledge base.

Knowledge Base and Vectorization

We begin by selecting an appropriate knowledge base, such as Wikipedia or a domain-specific corpus. Each document z_i in the knowledge base is converted into an embedding vector d(z) using an encoder model.

Query Vectorization

When a user poses a question x, it is also transformed into an embedding vector q(x) using the same encoder model.

Retrieval

To identify relevant documents from the knowledge base, we utilize a similarity metric to measure the distance between q(x) and all available d(z). Documents with similar embeddings are considered relevant to the query.

Generation

The LLM is employed to generate a response to the user query. However, unlike traditional LLMs, Gemma is augmented with the retrieved context. This enables it to incorporate relevant information from the knowledge base into its response, improving accuracy and reducing hallucinations.

Conclusion

By leveraging the Retrieval-Augmented Generation (RAG) technique, we can significantly enhance the capabilities of Large Language Models. By providing LLMs with access to specific relevant information, we can improve the accuracy and consistency of their responses, making them more suitable for real-world applications that require accurate and informative knowledge retrieval.

Disclaimer:info@kdj.com

The information provided is not trading advice. kdj.com does not assume any responsibility for any investments made based on the information provided in this article. Cryptocurrencies are highly volatile and it is highly recommended that you invest with caution after thorough research!

If you believe that the content used on this website infringes your copyright, please contact us immediately (info@kdj.com) and we will delete it promptly.

-

-

-

-

-

-

-

-

- Publicly Traded Bitcoin Miners Pop Friday—But YTD Losses Still Cut Deep

- Apr 12, 2025 at 08:45 am

- Financial markets shimmered with cautious optimism as U.S. equities closed positively Friday, with the Nasdaq Composite rising 2.06% and the digital asset sector vaulting 3.72% to a $2.63 trillion valuation.

-