|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

检索增强生成 (RAG) 通过集成知识库中的特定知识来增强大型语言模型 (LLM)。这种方法利用向量嵌入来有效地检索相关信息并增强法学硕士的背景。 RAG 通过在回答问题期间提供对特定信息的访问来解决法学硕士的局限性,例如过时的知识和幻觉。

Introduction: Enhancing Large Language Models with Retrieval-Augmented Generation (RAG)

简介:通过检索增强生成 (RAG) 增强大型语言模型

Large Language Models (LLMs) have demonstrated remarkable capabilities in comprehending and synthesizing vast amounts of knowledge encoded within their numerous parameters. However, they possess two significant limitations: limited knowledge beyond their training dataset and a propensity to generate fictitious information when faced with specific inquiries.

大型语言模型 (LLM) 在理解和综合编码在其众多参数中的大量知识方面表现出了非凡的能力。然而,它们有两个显着的局限性:训练数据集之外的知识有限,以及在面对特定查询时生成虚构信息的倾向。

Retrieval-Augmented Generation (RAG)

检索增强生成 (RAG)

Researchers at Facebook AI Research, University College London, and New York University introduced the concept of Retrieval-Augmented Generation (RAG) in 2020. RAG leverages pre-trained LLMs with additional context in the form of specific relevant information, enabling them to generate informed responses to user queries.

Facebook AI Research、伦敦大学学院和纽约大学的研究人员于 2020 年引入了检索增强生成 (RAG) 的概念。RAG 利用预先训练的法学硕士以及特定相关信息形式的附加上下文,使他们能够生成明智的信息对用户查询的响应。

Implementation with Hugging Face Transformers, LangChain, and Faiss

使用 Hugging Face Transformers、LangChain 和 Faiss 实现

This article provides a comprehensive guide to implementing Google's LLM Gemma with RAG capabilities using Hugging Face transformers, LangChain, and the Faiss vector database. We will delve into the theoretical underpinnings and practical aspects of the RAG pipeline.

本文提供了使用 Hugging Face 转换器、LangChain 和 Faiss 矢量数据库实现具有 RAG 功能的 Google LLM Gemma 的综合指南。我们将深入研究 RAG 管道的理论基础和实践方面。

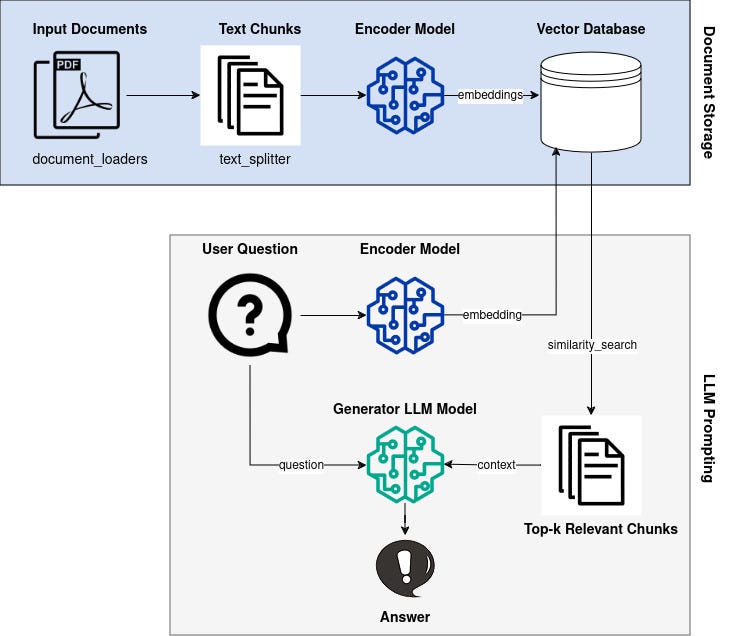

Overview of the RAG Pipeline

RAG 管道概述

The RAG pipeline comprises the following steps:

RAG管道包括以下步骤:

- Knowledge Base Vectorization: Encode a knowledge base (e.g., Wikipedia documents) into dense vector representations (embeddings).

- Query Vectorization: Convert user queries into vector embeddings using the same encoder model.

- Retrieval: Identify embeddings in the knowledge base that are similar to the query embedding based on a similarity metric.

- Generation: Generate a response using the LLM, augmented with the retrieved context from the knowledge base.

Knowledge Base and Vectorization

知识库向量化:将知识库(例如维基百科文档)编码为密集向量表示(嵌入)。查询向量化:使用相同的编码器模型将用户查询转换为向量嵌入。检索:识别知识库中与基于相似性度量的查询嵌入。生成:使用 LLM 生成响应,并使用从知识库检索到的上下文进行扩充。知识库和矢量化

We begin by selecting an appropriate knowledge base, such as Wikipedia or a domain-specific corpus. Each document z_i in the knowledge base is converted into an embedding vector d(z) using an encoder model.

我们首先选择适当的知识库,例如维基百科或特定领域的语料库。使用编码器模型将知识库中的每个文档 z_i 转换为嵌入向量 d(z)。

Query Vectorization

查询向量化

When a user poses a question x, it is also transformed into an embedding vector q(x) using the same encoder model.

当用户提出问题 x 时,它也会使用相同的编码器模型转换为嵌入向量 q(x)。

Retrieval

恢复

To identify relevant documents from the knowledge base, we utilize a similarity metric to measure the distance between q(x) and all available d(z). Documents with similar embeddings are considered relevant to the query.

为了从知识库中识别相关文档,我们利用相似性度量来测量 q(x) 和所有可用 d(z) 之间的距离。具有相似嵌入的文档被认为与查询相关。

Generation

一代

The LLM is employed to generate a response to the user query. However, unlike traditional LLMs, Gemma is augmented with the retrieved context. This enables it to incorporate relevant information from the knowledge base into its response, improving accuracy and reducing hallucinations.

LLM 用于生成对用户查询的响应。然而,与传统的法学硕士不同,Gemma 通过检索到的上下文进行了增强。这使得它能够将知识库中的相关信息纳入其响应中,从而提高准确性并减少幻觉。

Conclusion

结论

By leveraging the Retrieval-Augmented Generation (RAG) technique, we can significantly enhance the capabilities of Large Language Models. By providing LLMs with access to specific relevant information, we can improve the accuracy and consistency of their responses, making them more suitable for real-world applications that require accurate and informative knowledge retrieval.

通过利用检索增强生成(RAG)技术,我们可以显着增强大型语言模型的能力。通过为法学硕士提供特定相关信息的访问权限,我们可以提高他们回答的准确性和一致性,使他们更适合需要准确和信息丰富的知识检索的现实应用。

免责声明:info@kdj.com

所提供的信息并非交易建议。根据本文提供的信息进行的任何投资,kdj.com不承担任何责任。加密货币具有高波动性,强烈建议您深入研究后,谨慎投资!

如您认为本网站上使用的内容侵犯了您的版权,请立即联系我们(info@kdj.com),我们将及时删除。

-

-

- 比特币在市值达到1.88吨时占主导地位

- 2025-04-26 14:25:13

- 当天增长0.92%后,目前的加密货币市值目前为2.99吨。总加密交易量增加了0.92%

-

-

- 靠近协议(接近)价格弹回,当钻头记录ETF附近的位置

- 2025-04-26 14:20:14

- 本周,随着关税战争的恐慌平息,加密货币市场见证了看涨的转变。因此,比特币的价格在95,000美元的障碍物上弹起

-

- POL价格预测:POL在2025年会达到$ 1吗?

- 2025-04-26 14:15:13

- 加密货币市场正经历着一个动荡的时期,但是在谨慎下降的情况下,一个令牌引起了投资者的注意:POL(以前称为Matic)。

-

-

- 4最佳加密货币在2025年投资:为什么不固定,链接,Avax和Ada值得关注

- 2025-04-26 14:10:13

- 加密不再只是投机性资产类别,现在它被用来真正赚钱。从全球汇款和实时商务到协议级集成

-

-