Researchers from Carnegie Mellon University and Meta propose Content-Adaptive Tokenization (CAT), a pioneering framework for content-aware image tokenization

In the realm of AI-driven image modeling, one of the critical challenges that have yet to be fully addressed is the inability to effectively account for the diversity present in image content complexity. Existing tokenization methods largely employ static compression ratios, treating all images equally without considering their varying complexities. As a result of this approach, complex images often undergo excessive compression, leading to the loss of crucial information, while simpler images remain under-compressed, wasting valuable computational resources. These inefficiencies directly impact the performance of subsequent operations, such as the reconstruction and generation of images, where accurate and efficient representation plays a pivotal role.

Current techniques for tokenizing images fall short in appropriately addressing the variation in complexity. Fixed ratio tokenization approaches, such as resizing images to standard sizes, fail to account for the varying content complexities. While Vision Transformers do adapt patch size dynamically, they rely on image input and lack the flexibility required for text-to-image applications. Other compression techniques, such as JPEG, are specifically designed for traditional media and lack optimization for deep learning-based tokenization. Recent work, such as ElasticTok, has explored random token length strategies but lacked consideration of the intrinsic content complexity during training time, leading to inefficiencies in quality and computational cost.

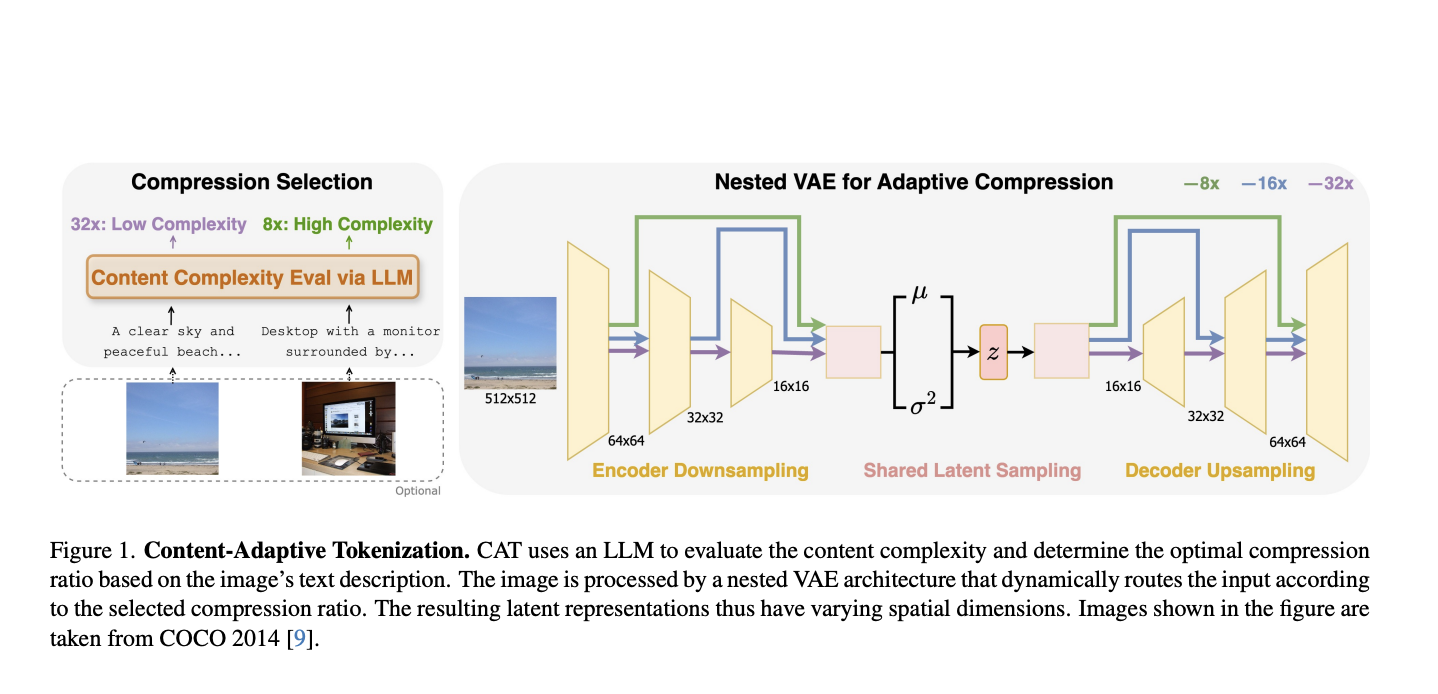

To address these limitations, researchers from Carnegie Mellon University and Meta have proposed Content-Adaptive Tokenization (CAT), a pioneering framework for content-aware image tokenization that introduces a dynamic approach to allocating representation capacity based on content complexity. This innovation enables large language models to assess the complexity of images from captions and perception-based queries while classifying images into three compression levels: 8x, 16x, and 32x. Furthermore, it utilizes a nested VAE architecture that generates variable-length latent features by dynamically routing intermediate outputs based on the complexity of the images. The adaptive design reduces training overhead and optimizes image representation quality to overcome the inefficiencies of fixed-ratio methods. Notably, CAT enables adaptive and efficient tokenization using text-based complexity analysis without requiring image inputs at inference.

CAT evaluates complexity with captions produced from LLMs that consider both semantic, visual, and perceptual features while determining compression ratios. Such a caption-based system is observed to be superior to traditional methods, including JPEG size and MSE in mimicking human perceived importance. The adaptive nested VAE design achieves this with channel-matched skip connections that dynamically alter latent space across varying compression levels. Shared parameterization guarantees consistency across scales, while training is performed by a combination of reconstruction error, perceptual loss (e.g., LPIPS), and adversarial loss to reach optimal performance. CAT was trained on a dataset of 380 million images and tested on the benchmarks of COCO, ImageNet, CelebA, and ChartQA, demonstrating its applicability to different image types.

This approach achieves highly significant performance improvements in both image reconstruction and generation by adapting compression to content complexity. For reconstruction tasks, it significantly improves the rFID, LPIPS, and PSNR metrics. It delivers a 12% quality improvement for the reconstruction of CelebA and a 39% enhancement for ChartQA, all while keeping the quality comparable to those of datasets such as COCO and ImageNet with fewer tokens and efficiency. For class-conditional ImageNet generation, CAT outperforms the fixed-ratio baselines with an FID of 4.56 and improves inference throughput by 18.5%. This adaptive tokenization framework serves as the new benchmark for further improvement.

CAT presents a novel approach to image tokenization by dynamically modulating compression levels based on the complexity of the content. It integrates LLM-based assessments with an adaptive nested VAE, eliminating persistent inefficiencies associated with fixed-ratio tokenization, thereby significantly improving performance in reconstruction and generation tasks. The adaptability and effectiveness of CAT make it a revolutionary asset in AI-oriented image modeling, with potential applications extending to video and multi-modal domains.