|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

卡內基美隆大學和 Meta 的研究人員提出了內容自適應標記化 (CAT),這是內容感知影像標記化的開創性框架

In the realm of AI-driven image modeling, one of the critical challenges that have yet to be fully addressed is the inability to effectively account for the diversity present in image content complexity. Existing tokenization methods largely employ static compression ratios, treating all images equally without considering their varying complexities. As a result of this approach, complex images often undergo excessive compression, leading to the loss of crucial information, while simpler images remain under-compressed, wasting valuable computational resources. These inefficiencies directly impact the performance of subsequent operations, such as the reconstruction and generation of images, where accurate and efficient representation plays a pivotal role.

在人工智慧驅動的影像建模領域,尚未完全解決的關鍵挑戰之一是無法有效解釋影像內容複雜性中存在的多樣性。現有的標記化方法主要採用靜態壓縮比,平等地對待所有影像,而不考慮它們不同的複雜性。由於這種方法,複雜的圖像通常會經歷過度壓縮,導致關鍵資訊遺失,而較簡單的圖像仍然壓縮不足,浪費寶貴的計算資源。這些低效率直接影響後續操作的性能,例如影像的重建和生成,其中準確和高效的表示起著關鍵作用。

Current techniques for tokenizing images fall short in appropriately addressing the variation in complexity. Fixed ratio tokenization approaches, such as resizing images to standard sizes, fail to account for the varying content complexities. While Vision Transformers do adapt patch size dynamically, they rely on image input and lack the flexibility required for text-to-image applications. Other compression techniques, such as JPEG, are specifically designed for traditional media and lack optimization for deep learning-based tokenization. Recent work, such as ElasticTok, has explored random token length strategies but lacked consideration of the intrinsic content complexity during training time, leading to inefficiencies in quality and computational cost.

目前用於標記影像的技術不足以適當地解決複雜性的變化。固定比例標記化方法(例如將影像大小調整為標準尺寸)無法考慮不同的內容複雜度。雖然 Vision Transformer 確實可以動態調整補丁大小,但它們依賴圖像輸入,並且缺乏文字到圖像應用程式所需的靈活性。其他壓縮技術(例如 JPEG)是專門為傳統媒體設計的,缺乏基於深度學習的標記化的最佳化。最近的工作,例如 ElasticTok,探索了隨機令牌長度策略,但缺乏對訓練期間內在內容複雜性的考慮,導致品質和計算成本效率低下。

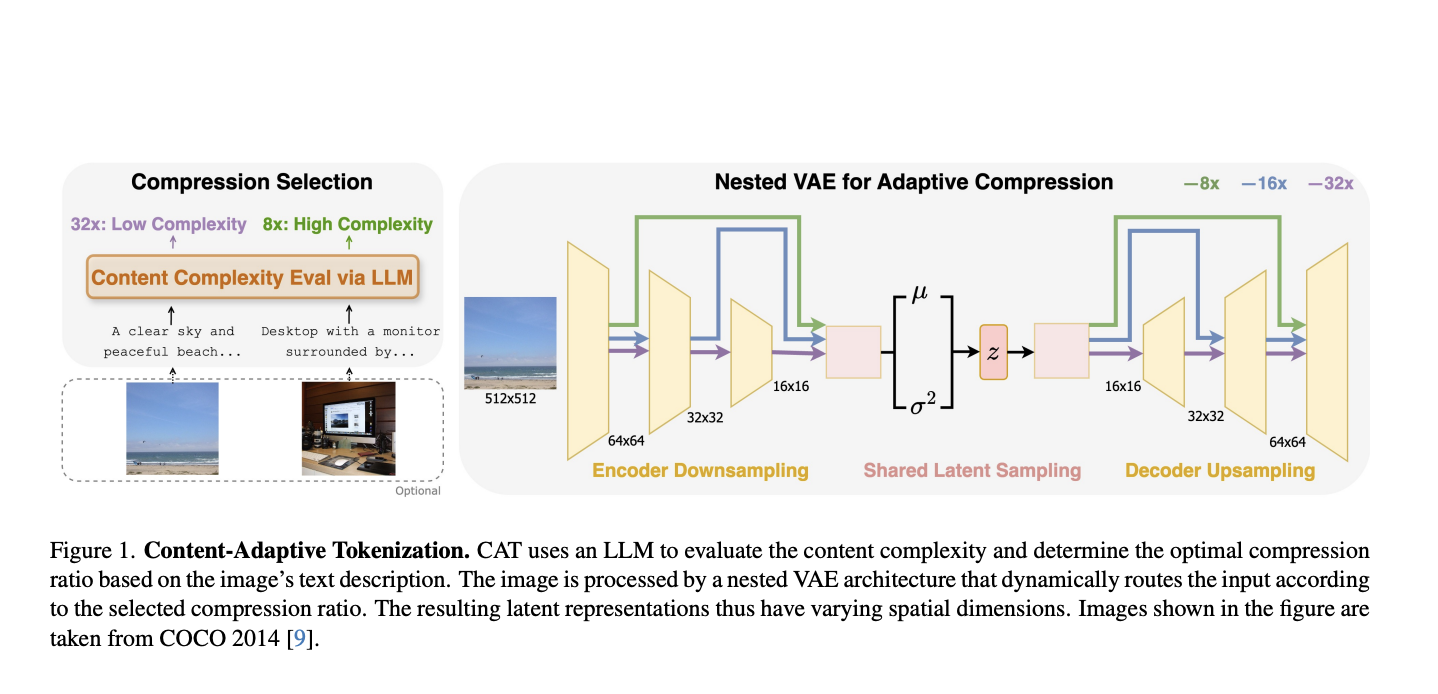

To address these limitations, researchers from Carnegie Mellon University and Meta have proposed Content-Adaptive Tokenization (CAT), a pioneering framework for content-aware image tokenization that introduces a dynamic approach to allocating representation capacity based on content complexity. This innovation enables large language models to assess the complexity of images from captions and perception-based queries while classifying images into three compression levels: 8x, 16x, and 32x. Furthermore, it utilizes a nested VAE architecture that generates variable-length latent features by dynamically routing intermediate outputs based on the complexity of the images. The adaptive design reduces training overhead and optimizes image representation quality to overcome the inefficiencies of fixed-ratio methods. Notably, CAT enables adaptive and efficient tokenization using text-based complexity analysis without requiring image inputs at inference.

為了解決這些限制,卡內基美隆大學和Meta 的研究人員提出了內容自適應標記化(CAT),這是一種內容感知影像標記化的開創性框架,引入了一種根據內容複雜性分配表示容量的動態方法。這項創新使大型語言模型能夠評估來自字幕和基於感知的查詢的圖像的複雜性,同時將圖像分為三個壓縮等級:8x、16x 和 32x。此外,它利用嵌套 VAE 架構,根據影像的複雜性動態路由中間輸出,產生可變長度的潛在特徵。自適應設計減少了訓練開銷並優化了影像表示質量,以克服固定比率方法的低效率。值得注意的是,CAT 使用基於文字的複雜性分析來實現自適應且高效的標記化,而無需在推理時輸入圖像。

CAT evaluates complexity with captions produced from LLMs that consider both semantic, visual, and perceptual features while determining compression ratios. Such a caption-based system is observed to be superior to traditional methods, including JPEG size and MSE in mimicking human perceived importance. The adaptive nested VAE design achieves this with channel-matched skip connections that dynamically alter latent space across varying compression levels. Shared parameterization guarantees consistency across scales, while training is performed by a combination of reconstruction error, perceptual loss (e.g., LPIPS), and adversarial loss to reach optimal performance. CAT was trained on a dataset of 380 million images and tested on the benchmarks of COCO, ImageNet, CelebA, and ChartQA, demonstrating its applicability to different image types.

CAT 使用法學碩士產生的字幕來評估複雜性,在確定壓縮比時考慮語義、視覺和感知特徵。據觀察,這種基於字幕的系統在模仿人類感知重要性方面優於傳統方法,包括 JPEG 大小和 MSE。自適應嵌套 VAE 設計透過通道匹配的跳躍連接來實現這一點,該連接可以在不同的壓縮等級上動態改變潛在空間。共享參數化保證了跨尺度的一致性,而訓練是透過重建誤差、感知損失(例如,LPIPS)和對抗性損失的組合來執行的,以達到最佳性能。 CAT 在 3.8 億張影像的資料集上進行了訓練,並在 COCO、ImageNet、CelebA 和 ChartQA 的基準上進行了測試,證明了其對不同影像類型的適用性。

This approach achieves highly significant performance improvements in both image reconstruction and generation by adapting compression to content complexity. For reconstruction tasks, it significantly improves the rFID, LPIPS, and PSNR metrics. It delivers a 12% quality improvement for the reconstruction of CelebA and a 39% enhancement for ChartQA, all while keeping the quality comparable to those of datasets such as COCO and ImageNet with fewer tokens and efficiency. For class-conditional ImageNet generation, CAT outperforms the fixed-ratio baselines with an FID of 4.56 and improves inference throughput by 18.5%. This adaptive tokenization framework serves as the new benchmark for further improvement.

這種方法透過根據內容複雜性調整壓縮,在影像重建和生成方面實現了非常顯著的性能改進。對於重建任務,它顯著改善了 rFID、LPIPS 和 PSNR 指標。它為 CelebA 的重建提供了 12% 的品質改進,為 ChartQA 提供了 39% 的增強,同時保持與 COCO 和 ImageNet 等資料集相當的質量,同時具有更少的標記和效率。對於類別條件 ImageNet 生成,CAT 的 FID 為 4.56,優於固定比率基線,並將推理吞吐量提高了 18.5%。這種自適應標記化框架可以作為進一步改進的新基準。

CAT presents a novel approach to image tokenization by dynamically modulating compression levels based on the complexity of the content. It integrates LLM-based assessments with an adaptive nested VAE, eliminating persistent inefficiencies associated with fixed-ratio tokenization, thereby significantly improving performance in reconstruction and generation tasks. The adaptability and effectiveness of CAT make it a revolutionary asset in AI-oriented image modeling, with potential applications extending to video and multi-modal domains.

CAT 透過根據內容的複雜性動態調整壓縮級別,提出了一種新穎的影像標記化方法。它將基於 LLM 的評估與自適應嵌套 VAE 集成,消除了與固定比率標記化相關的持續低效問題,從而顯著提高了重建和生成任務的性能。 CAT 的適應性和有效性使其成為 AI 導向的影像建模的革命性資產,潛在應用擴展到視訊和多模態領域。

免責聲明:info@kdj.com

所提供的資訊並非交易建議。 kDJ.com對任何基於本文提供的資訊進行的投資不承擔任何責任。加密貨幣波動性較大,建議您充分研究後謹慎投資!

如果您認為本網站使用的內容侵犯了您的版權,請立即聯絡我們(info@kdj.com),我們將及時刪除。

-

- LivePeer將於4月7日舉行社區電話,重點介紹其鏈財政部的治理,資金和戰略方向。

- 2025-04-03 10:35:13

- LivePeer是一項分散的協議,利用以太坊區塊鏈使視頻處理領域民主化。

-

-

- PI網絡未能列入二手列表

- 2025-04-03 10:30:12

- 當Binance列出倡議的投票開始時,該交易所已第二次轉移了PI網絡。

-

-

-

-

-

- 隨著鯨魚的積累,比特幣(BTC)所有權動態變化,較小的持有人卸載

- 2025-04-03 10:20:12

- 來自加密分析公司玻璃節的數據揭示了比特幣(BTC)所有權動態的重大變化。

-