|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

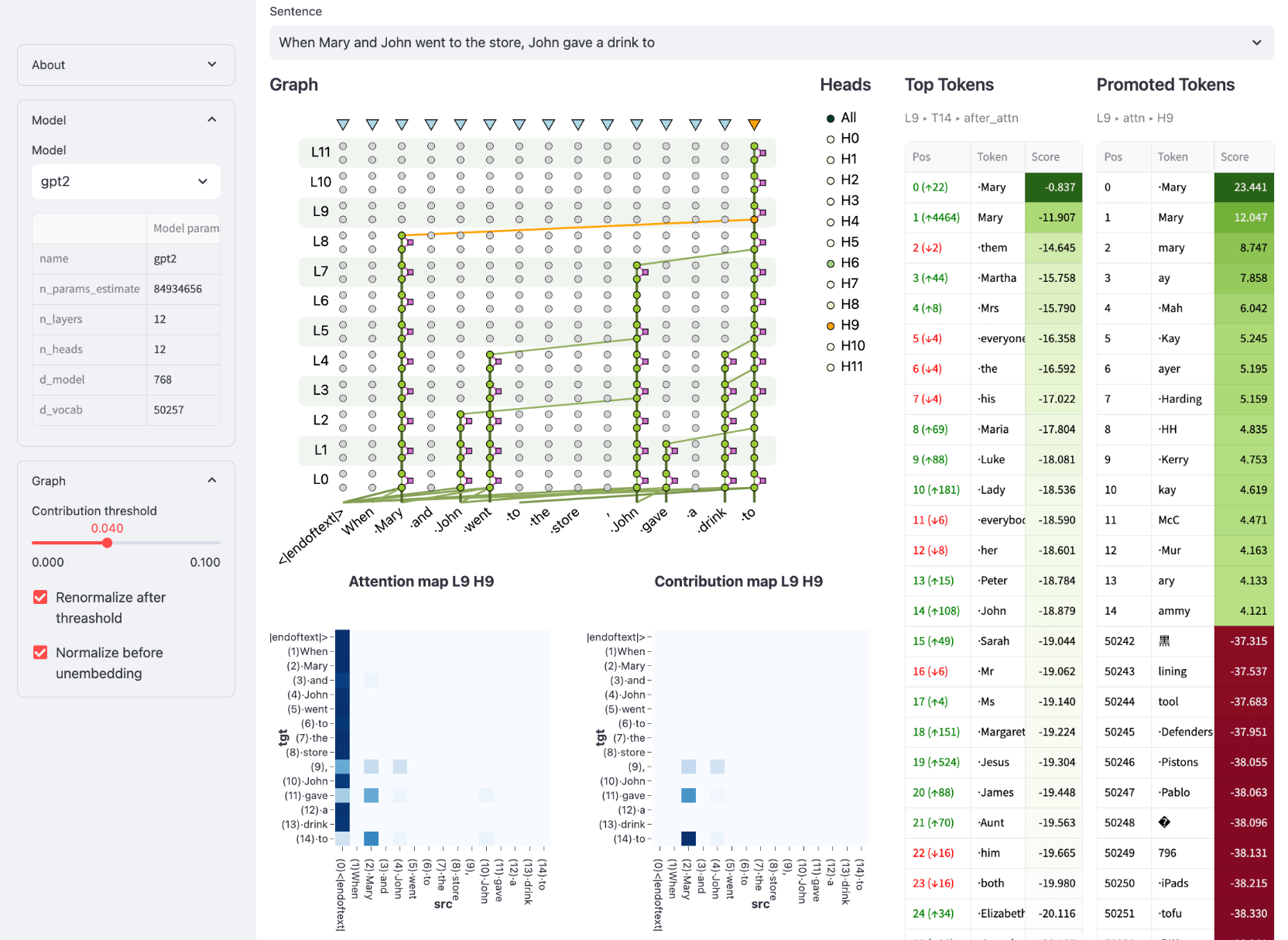

Meta Research 開發的大型語言模型透明度工具 (LLM-TT) 為基於 Transformer 的語言模型的決策過程提供了前所未有的可視性。這個開源工具包可以檢查資訊流,使用戶能夠探索注意力頭的貢獻和神經元的影響。該工具透過促進模型行為驗證、偏差檢測以及與標準的一致性,支援開發更道德、更值得信賴的人工智慧部署。

Demystifying Complex Language Models with the Pioneering LLM-TT Tool: Enhancing Transparency and Accountability in AI

使用開創性的 LLM-TT 工具揭開複雜語言模型的神秘面紗:增強人工智慧的透明度和問責制

In the burgeoning realm of artificial intelligence (AI), large language models (LLMs) have emerged as powerful tools, capable of tasks ranging from natural language processing to content generation. However, their intricate inner workings have remained largely opaque, hindering efforts to ensure their fairness, accountability, and alignment with ethical standards.

在新興的人工智慧 (AI) 領域,大型語言模型 (LLM) 已成為強大的工具,能夠執行從自然語言處理到內容生成的各種任務。然而,他們錯綜複雜的內部運作在很大程度上仍然不透明,阻礙了確保其公平性、問責制和符合道德標準的努力。

Enter the Large Language Model Transparency Tool (LLM-TT), a groundbreaking open-source toolkit developed by Meta Research. This innovative tool brings unprecedented transparency to LLMs, empowering users to dissect their decision-making processes and uncover hidden biases. By providing a comprehensive view into the flow of information within an LLM, LLM-TT empowers researchers, developers, and policymakers alike to foster more ethical and responsible AI practices.

輸入大型語言模型透明度工具 (LLM-TT),這是由 Meta Research 開發的突破性開源工具包。這項創新工具為法學碩士帶來了前所未有的透明度,使用戶能夠剖析他們的決策過程並發現隱藏的偏見。透過提供對 LLM 內資訊流的全面了解,LLM-TT 使研究人員、開發人員和政策制定者能夠促進更道德和負責任的人工智慧實踐。

Bridging the Gap of Understanding and Oversight

彌合理解和監督的差距

The development of LLM-TT stems from the growing recognition that the complexity of LLMs poses significant challenges for understanding and monitoring their behavior. As these models are increasingly deployed in high-stakes applications, such as decision-making processes and content moderation, the need for methods to ensure their fairness, accuracy, and adherence to ethical principles becomes paramount.

LLM-TT 的發展源於人們日益認識到 LLM 的複雜性對理解和監控其行為帶來了重大挑戰。隨著這些模型越來越多地部署在決策過程和內容審核等高風險應用中,確保其公平性、準確性和遵守道德原則的方法的需求變得至關重要。

LLM-TT addresses this need by providing a visual representation of the information flow within a model, allowing users to trace the impact of individual components on model outputs. This capability is particularly crucial for identifying and mitigating potential biases that may arise from the training data or model architecture. With LLM-TT, researchers can systematically examine the model's reasoning process, uncover hidden assumptions, and ensure its alignment with desired objectives.

LLM-TT 透過提供模型內資訊流的可視化表示來滿足這一需求,允許使用者追蹤各個組件對模型輸出的影響。此功能對於識別和減輕訓練資料或模型架構可能產生的潛在偏差尤其重要。透過 LLM-TT,研究人員可以系統地檢查模型的推理過程,發現隱藏的假設,並確保其與預期目標保持一致。

Interactive Inspection of Model Components

模型組件的互動式檢查

LLM-TT offers an interactive user experience, enabling detailed inspection of the model's architecture and its processing of information. By selecting a model and input, users can generate a contribution graph that visualizes the flow of information from input to output. The tool provides interactive controls to adjust the contribution threshold, allowing users to focus on the most influential components of the model's computation.

LLM-TT 提供互動式使用者體驗,可以詳細檢查模型的架構及其資訊處理。透過選擇模型和輸入,使用者可以產生貢獻圖,以視覺化從輸入到輸出的資訊流。該工具提供互動式控制項來調整貢獻閾值,使用戶能夠專注於模型計算中最有影響力的部分。

Moreover, LLM-TT allows users to select any token within the model's output and explore its representation after each layer in the model. This feature provides insights into how the model processes individual words or phrases, enabling users to understand the relationship between input and output and identify potential sources of bias or error.

此外,LLM-TT 允許使用者選擇模型輸出中的任何標記,並在模型中的每一層之後探索其表示。此功能提供了有關模型如何處理單字或短語的見解,使用戶能夠理解輸入和輸出之間的關係,並識別潛在的偏差或錯誤來源。

Dissecting Attention Mechanisms and Feedforward Networks

剖析注意力機制與前饋網絡

LLM-TT goes beyond static visualizations by incorporating interactive elements that allow users to delve deeper into the model's inner workings. By clicking on edges within the contribution graph, users can reveal details about the contributing attention head, providing insights into the specific relationships between input and output tokens.

LLM-TT 超越了靜態視覺化,它融入了互動式元素,讓使用者更深入地研究模型的內部工作原理。透過點擊貢獻圖中的邊緣,使用者可以顯示有關貢獻注意力頭的詳細信息,從而深入了解輸入和輸出標記之間的特定關係。

Furthermore, LLM-TT provides the ability to inspect feedforward network (FFN) blocks and their constituent neurons. This fine-grained analysis enables users to pinpoint the exact locations within the model where important computations occur, shedding light on the intricate mechanisms that underpin the model's predictions.

此外,LLM-TT 還提供了檢查前饋網路 (FFN) 區塊及其組成神經元的能力。這種細粒度的分析使用戶能夠找出模型中發生重要計算的確切位置,從而揭示支撐模型預測的複雜機制。

Enhancing Trust and Reliability in AI Deployments

增強人工智慧部署的信任和可靠性

The LLM-TT tool is an invaluable asset for building trust and reliability in AI deployments. By providing a deeper understanding of how LLMs make decisions, the tool empowers users to identify and mitigate potential risks, such as biases or errors. This transparency fosters accountability and allows organizations to make informed decisions about the use of LLMs in critical applications.

LLM-TT 工具是在人工智慧部署中建立信任和可靠性的寶貴資產。透過更深入了解法學碩士如何做出決策,該工具使用戶能夠識別和減輕潛在風險,例如偏見或錯誤。這種透明度促進了問責制,並使組織能夠就關鍵應用程式中使用法學碩士做出明智的決策。

Conclusion: Empowering Ethical and Responsible AI

結論:賦予道德和負責任的人工智慧權力

The Large Language Model Transparency Tool (LLM-TT) is a groundbreaking innovation that empowers researchers, developers, and policymakers to enhance the transparency, accountability, and ethical use of large language models. By providing an interactive and comprehensive view into the inner workings of LLMs, LLM-TT supports the development and deployment of more fair, reliable, and responsible AI technologies.

大語言模型透明度工具 (LLM-TT) 是一項突破性創新,使研究人員、開發人員和政策制定者能夠提高大語言模型的透明度、問責制和道德使用。透過提供 LLM 內部運作的互動式全面視圖,LLM-TT 支援更公平、可靠和負責任的人工智慧技術的開發和部署。

As the field of AI continues to advance, the need for robust tools to monitor and understand complex models will only grow stronger. LLM-TT stands as a testament to the importance of transparency and accountability in AI, paving the way for a future where AI systems are used ethically and responsibly to benefit society.

隨著人工智慧領域的不斷發展,對監控和理解複雜模型的強大工具的需求只會越來越強烈。 LLM-TT 證明了人工智慧透明度和問責制的重要性,為未來以道德和負責任的方式使用人工智慧系統造福社會鋪平了道路。

免責聲明:info@kdj.com

所提供的資訊並非交易建議。 kDJ.com對任何基於本文提供的資訊進行的投資不承擔任何責任。加密貨幣波動性較大,建議您充分研究後謹慎投資!

如果您認為本網站使用的內容侵犯了您的版權,請立即聯絡我們(info@kdj.com),我們將及時刪除。

-

- 加密貨幣剛打電話。

- 2025-04-03 13:25:12

- BlockDag的第三個主題演講不僅丟棄更新,還觸發了資金。在僅48小時內,超過500萬美元湧入,該項目的預售總計超過2.1億美元。

-

- BlockDag(BDAG)在2025年的加密貨幣市場上占主導地位

- 2025-04-03 13:25:12

- 加密貨幣領域在2025年正在迅速發展,投資者積極尋求下一個可以帶來巨大回報的大加密貨幣。

-

-

- 比特幣(BTC)價格下跌,特朗普總統的新關稅搖滾加密資產

- 2025-04-03 13:20:12

- 隨著特朗普總統的新關稅搖滾全球市場,加密資產目睹了新的清算浪潮。

-

-

-

![比特幣[BTC]交換流出峰值,超過20,000 BTC遷移到市場 比特幣[BTC]交換流出峰值,超過20,000 BTC遷移到市場](/assets/pc/images/moren/280_160.png)

- 比特幣[BTC]交換流出峰值,超過20,000 BTC遷移到市場

- 2025-04-03 13:10:13

- 在過去的96小時中,超過21,000比特幣[BTC]已轉移到交換中,這標誌著交換儲備中的巨大尖峰。

-

- 在過去的96小時內,超過21,000個比特幣轉移到交易所,標誌著交換儲量的重大尖峰

- 2025-04-03 13:10:13

- 從歷史上看,這種流入通常表示賣方壓力的增長,尤其是當交易者預計本地頂級時。

-

![比特幣[BTC]交換流出峰值,超過20,000 BTC遷移到市場 比特幣[BTC]交換流出峰值,超過20,000 BTC遷移到市場](/uploads/2025/04/03/cryptocurrencies-news/articles/bitcoin-btc-exchange-outflows-spike-btc-move-market/middle_800_480.webp)