|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Cryptocurrency News Articles

Demystifying Complex Language Models with LLM-TT: Enhancing Transparency and Trust in AI

Apr 18, 2024 at 04:00 pm

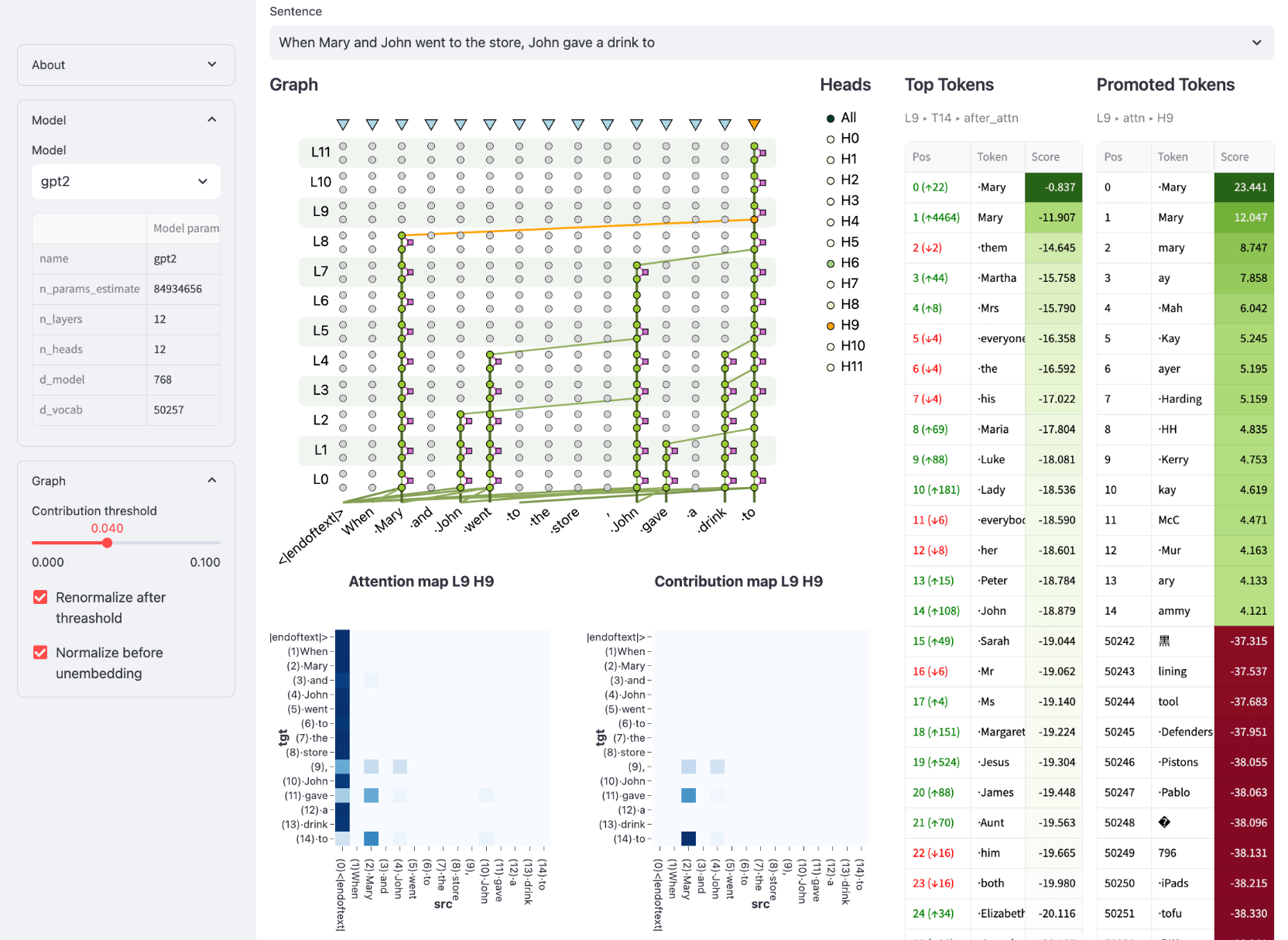

The Large Language Model Transparency Tool (LLM-TT), developed by Meta Research, provides unprecedented visibility into the decision-making processes of Transformer-based language models. This open-source toolkit enables the inspection of information flow, allowing users to explore attention head contributions and neuron influences. The tool empowers the development of more ethical and trustworthy AI deployments by facilitating the verification of model behavior, bias detection, and alignment with standards.

Demystifying Complex Language Models with the Pioneering LLM-TT Tool: Enhancing Transparency and Accountability in AI

In the burgeoning realm of artificial intelligence (AI), large language models (LLMs) have emerged as powerful tools, capable of tasks ranging from natural language processing to content generation. However, their intricate inner workings have remained largely opaque, hindering efforts to ensure their fairness, accountability, and alignment with ethical standards.

Enter the Large Language Model Transparency Tool (LLM-TT), a groundbreaking open-source toolkit developed by Meta Research. This innovative tool brings unprecedented transparency to LLMs, empowering users to dissect their decision-making processes and uncover hidden biases. By providing a comprehensive view into the flow of information within an LLM, LLM-TT empowers researchers, developers, and policymakers alike to foster more ethical and responsible AI practices.

Bridging the Gap of Understanding and Oversight

The development of LLM-TT stems from the growing recognition that the complexity of LLMs poses significant challenges for understanding and monitoring their behavior. As these models are increasingly deployed in high-stakes applications, such as decision-making processes and content moderation, the need for methods to ensure their fairness, accuracy, and adherence to ethical principles becomes paramount.

LLM-TT addresses this need by providing a visual representation of the information flow within a model, allowing users to trace the impact of individual components on model outputs. This capability is particularly crucial for identifying and mitigating potential biases that may arise from the training data or model architecture. With LLM-TT, researchers can systematically examine the model's reasoning process, uncover hidden assumptions, and ensure its alignment with desired objectives.

Interactive Inspection of Model Components

LLM-TT offers an interactive user experience, enabling detailed inspection of the model's architecture and its processing of information. By selecting a model and input, users can generate a contribution graph that visualizes the flow of information from input to output. The tool provides interactive controls to adjust the contribution threshold, allowing users to focus on the most influential components of the model's computation.

Moreover, LLM-TT allows users to select any token within the model's output and explore its representation after each layer in the model. This feature provides insights into how the model processes individual words or phrases, enabling users to understand the relationship between input and output and identify potential sources of bias or error.

Dissecting Attention Mechanisms and Feedforward Networks

LLM-TT goes beyond static visualizations by incorporating interactive elements that allow users to delve deeper into the model's inner workings. By clicking on edges within the contribution graph, users can reveal details about the contributing attention head, providing insights into the specific relationships between input and output tokens.

Furthermore, LLM-TT provides the ability to inspect feedforward network (FFN) blocks and their constituent neurons. This fine-grained analysis enables users to pinpoint the exact locations within the model where important computations occur, shedding light on the intricate mechanisms that underpin the model's predictions.

Enhancing Trust and Reliability in AI Deployments

The LLM-TT tool is an invaluable asset for building trust and reliability in AI deployments. By providing a deeper understanding of how LLMs make decisions, the tool empowers users to identify and mitigate potential risks, such as biases or errors. This transparency fosters accountability and allows organizations to make informed decisions about the use of LLMs in critical applications.

Conclusion: Empowering Ethical and Responsible AI

The Large Language Model Transparency Tool (LLM-TT) is a groundbreaking innovation that empowers researchers, developers, and policymakers to enhance the transparency, accountability, and ethical use of large language models. By providing an interactive and comprehensive view into the inner workings of LLMs, LLM-TT supports the development and deployment of more fair, reliable, and responsible AI technologies.

As the field of AI continues to advance, the need for robust tools to monitor and understand complex models will only grow stronger. LLM-TT stands as a testament to the importance of transparency and accountability in AI, paving the way for a future where AI systems are used ethically and responsibly to benefit society.

Disclaimer:info@kdj.com

The information provided is not trading advice. kdj.com does not assume any responsibility for any investments made based on the information provided in this article. Cryptocurrencies are highly volatile and it is highly recommended that you invest with caution after thorough research!

If you believe that the content used on this website infringes your copyright, please contact us immediately (info@kdj.com) and we will delete it promptly.

-

-

-

![Bitcoin [BTC] Exchange Outflows Spike as Over 20,000 BTC Move to Market Bitcoin [BTC] Exchange Outflows Spike as Over 20,000 BTC Move to Market](/assets/pc/images/moren/280_160.png)

-

-

-

- STEPN to Launch a Major Update on April 3rd, Introducing New Ways to Play, Compete, and Rise to the Top

- Apr 03, 2025 at 01:05 pm

- STEPN will launch a major update on April 3rd, introducing a new way to play, compete, and rise to the top. The update includes the ability for users to set unique STEPN usernames

-

-

-

![Bitcoin [BTC] Exchange Outflows Spike as Over 20,000 BTC Move to Market Bitcoin [BTC] Exchange Outflows Spike as Over 20,000 BTC Move to Market](/uploads/2025/04/03/cryptocurrencies-news/articles/bitcoin-btc-exchange-outflows-spike-btc-move-market/middle_800_480.webp)