|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Cryptocurrency News Articles

ReMoE: ReLU-based Mixture-of-Experts Architecture for Scalable and Efficient Training

Dec 29, 2024 at 04:05 pm

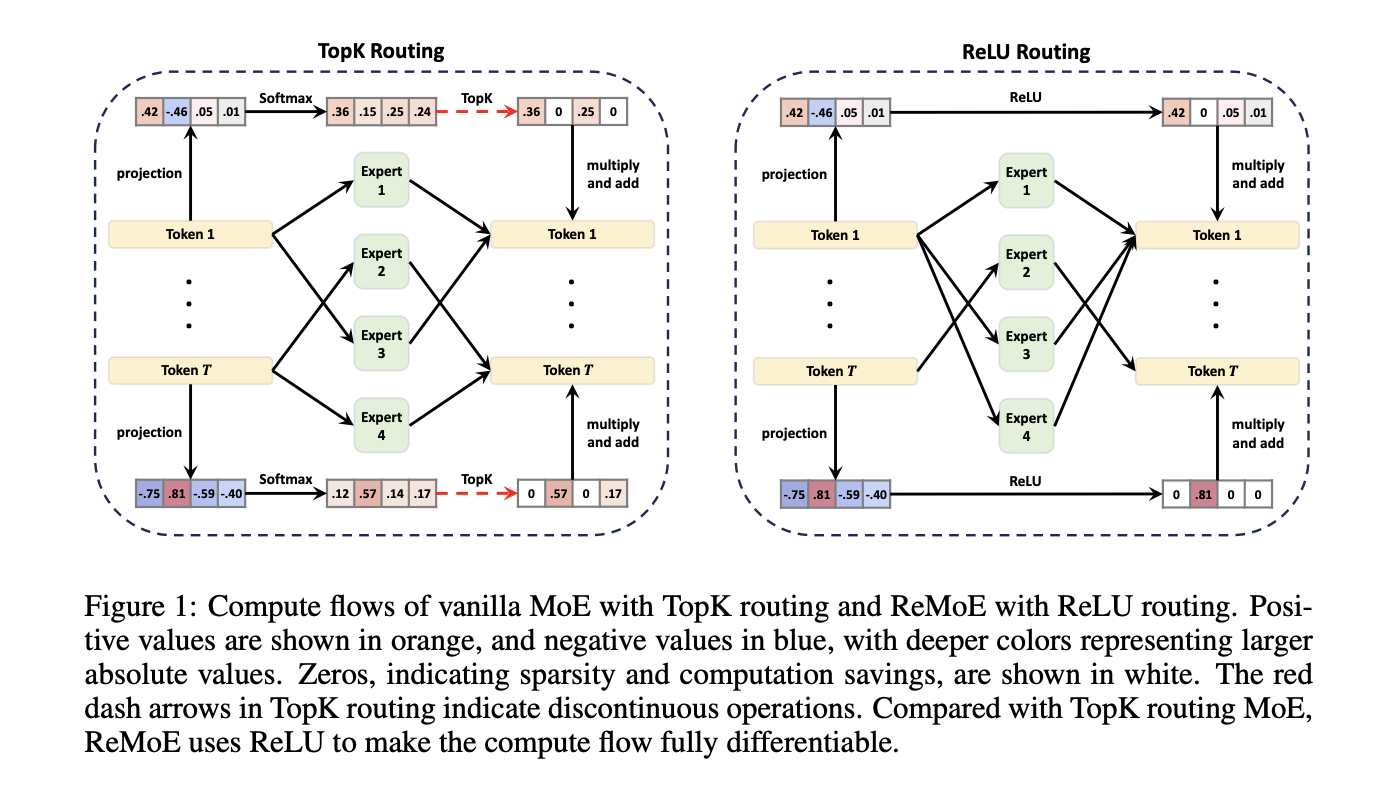

The development of Transformer models has significantly advanced artificial intelligence, delivering remarkable performance across diverse tasks. However, these advancements often come with steep computational requirements, presenting challenges in scalability and efficiency. Sparsely activated Mixture-of-Experts (MoE) architectures provide a promising solution, enabling increased model capacity without proportional computational costs. Yet, traditional TopK+Softmax routing in MoE models faces notable limitations. The discrete and non-differentiable nature of TopK routing hampers scalability and optimization, while ensuring balanced expert utilization remains a persistent issue, leading to inefficiencies and suboptimal performance.

Mixture-of-Experts (MoE) architectures have emerged as a powerful technique to increase the capacity of Transformer models without incurring proportional computational costs. However, traditional TopK+Softmax routing in MoE models presents several limitations, including the discrete and non-differentiable nature of TopK routing, which hampers scalability and optimization, and the difficulty in ensuring balanced expert utilization, leading to inefficiencies and suboptimal performance.

To address these limitations, researchers at Tsinghua University have proposed a new architecture called ReMoE (ReLU-based Mixture-of-Experts). ReMoE replaces the conventional TopK+Softmax routing with a ReLU-based mechanism, enabling a fully differentiable routing process. This design simplifies the architecture and seamlessly integrates with existing MoE systems.

ReMoE utilizes ReLU activation functions to dynamically determine the active state of experts. In contrast to TopK routing, which activates only the top-k experts based on a discrete probability distribution, ReMoE’s ReLU routing transitions smoothly between active and inactive states. The sparsity of activated experts is controlled using adaptive L1 regularization, ensuring efficient computation while maintaining high performance. This differentiable design also allows for dynamic allocation of resources across tokens and layers, adapting to the complexity of individual inputs.

Technical Details and Benefits

The key innovation of ReMoE lies in its routing mechanism. By replacing the discontinuous TopK operation with a continuous ReLU-based approach, ReMoE eliminates abrupt changes in expert activation, ensuring smoother gradient updates and improved stability during training. Additionally, ReMoE’s dynamic routing mechanism allows for adjusting the number of active experts based on token complexity, promoting efficient resource utilization.

To address imbalances where some experts might remain underutilized, ReMoE incorporates an adaptive load-balancing strategy into its L1 regularization. This refinement ensures a fairer distribution of token assignments across experts, enhancing the model’s capacity and overall performance. The architecture’s scalability is evident in its ability to handle a larger number of experts and finer levels of granularity compared to traditional MoE models.

Performance Insights and Experimental Results

Extensive experiments demonstrate that ReMoE consistently outperforms conventional MoE architectures. The researchers tested ReMoE using the LLaMA architecture, training models of varying sizes (182M to 978M parameters) with different numbers of experts (4 to 128). Key findings include:

For instance, on downstream tasks like ARC, BoolQ, and LAMBADA, ReMoE showed measurable accuracy improvements over both dense and TopK-routed MoE models. Analyses of training and inference throughput revealed that ReMoE’s differentiable design introduces minimal computational overhead, making it suitable for practical applications.

Conclusion

ReMoE presents a valuable advance in Mixture-of-Experts architectures by addressing the limitations of TopK+Softmax routing. The ReLU-based routing mechanism, combined with adaptive regularization techniques, ensures that ReMoE is both efficient and adaptable. This innovation highlights the potential of revisiting foundational design choices to achieve better scalability and performance. By offering a practical and resource-conscious approach, ReMoE provides a useful tool for advancing AI systems to meet growing computational demands.

Check out the Paper and GitHub Page. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 60k+ ML SubReddit.

Trending: LG AI Research Releases EXAONE 3.5: Three Open-Source Bilingual Frontier AI-level Models Delivering Unmatched Instruction Following and Long Context Understanding for Global Leadership in Generative AI Excellence…

Disclaimer:info@kdj.com

The information provided is not trading advice. kdj.com does not assume any responsibility for any investments made based on the information provided in this article. Cryptocurrencies are highly volatile and it is highly recommended that you invest with caution after thorough research!

If you believe that the content used on this website infringes your copyright, please contact us immediately (info@kdj.com) and we will delete it promptly.

-

-

-

-

-

-

-

-

- Publicly Traded Bitcoin Miners Pop Friday—But YTD Losses Still Cut Deep

- Apr 12, 2025 at 08:45 am

- Financial markets shimmered with cautious optimism as U.S. equities closed positively Friday, with the Nasdaq Composite rising 2.06% and the digital asset sector vaulting 3.72% to a $2.63 trillion valuation.

-