|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Transformer 模型的開發顯著地推進了人工智慧的發展,在不同的任務中提供了卓越的性能。然而,這些進步通常伴隨著嚴格的計算要求,在可擴展性和效率方面提出了挑戰。稀疏激活的專家混合 (MoE) 架構提供了一種有前景的解決方案,無需成比例的計算成本即可增加模型容量。然而,MoE 模型中傳統的 TopK+Softmax 路由面臨顯著的限制。 TopK 路由的離散性和不可微分性質阻礙了可擴展性和最佳化,同時確保平衡的專家利用率仍然是一個長期存在的問題,導致效率低下和效能不佳。

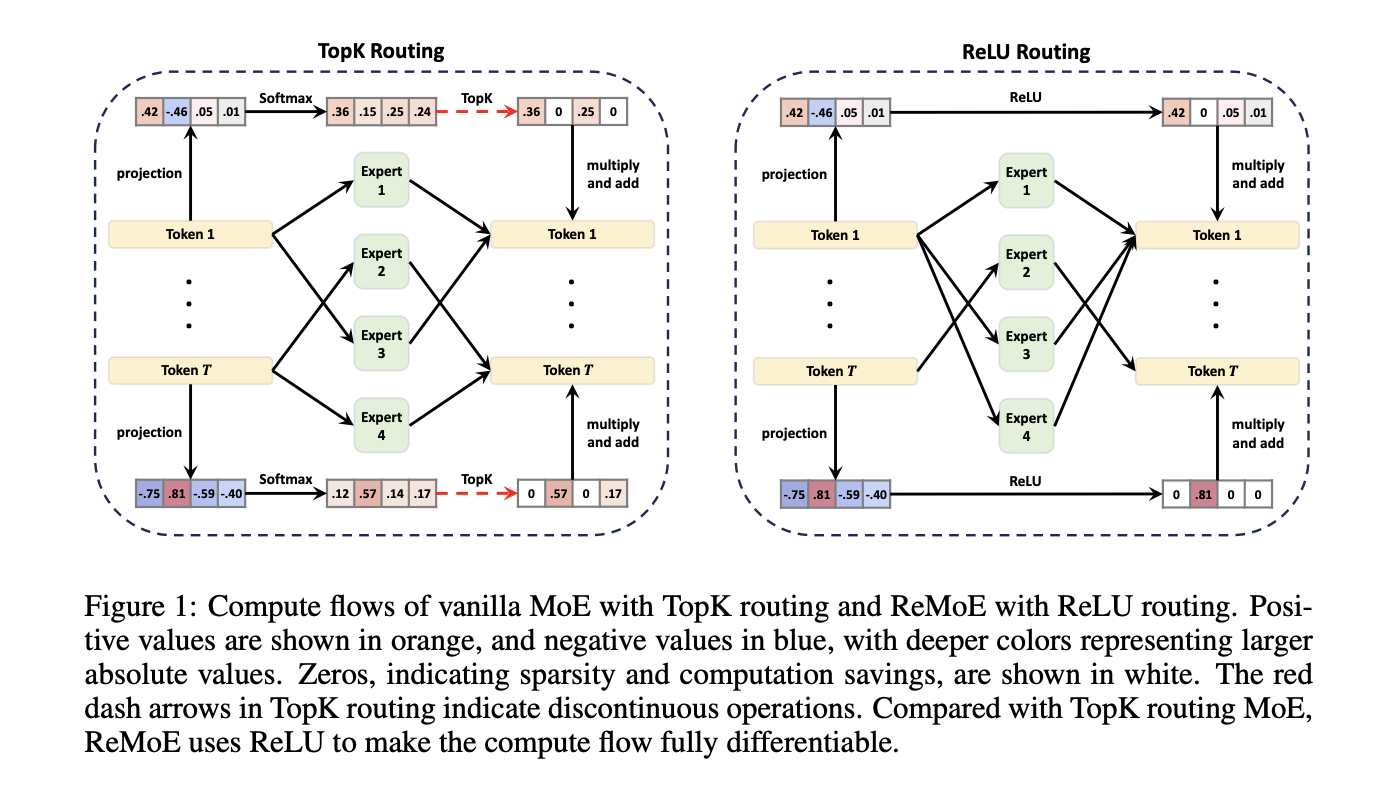

Mixture-of-Experts (MoE) architectures have emerged as a powerful technique to increase the capacity of Transformer models without incurring proportional computational costs. However, traditional TopK+Softmax routing in MoE models presents several limitations, including the discrete and non-differentiable nature of TopK routing, which hampers scalability and optimization, and the difficulty in ensuring balanced expert utilization, leading to inefficiencies and suboptimal performance.

專家混合 (MoE) 架構已成為一種強大的技術,可在不產生相應計算成本的情況下增加 Transformer 模型的容量。然而,MoE模型中傳統的TopK+Softmax路由存在一些局限性,包括TopK路由的離散性和不可微分性,這阻礙了可擴展性和優化,以及難以確保均衡的專家利用率,導致效率低下和性能不佳。

To address these limitations, researchers at Tsinghua University have proposed a new architecture called ReMoE (ReLU-based Mixture-of-Experts). ReMoE replaces the conventional TopK+Softmax routing with a ReLU-based mechanism, enabling a fully differentiable routing process. This design simplifies the architecture and seamlessly integrates with existing MoE systems.

為了解決這些限制,清華大學的研究人員提出了一個名為 ReMoE(基於 ReLU 的專家混合)的新架構。 ReMoE 使用基於 ReLU 的機制取代了傳統的 TopK+Softmax 路由,從而實現了完全可微分的路由過程。該設計簡化了架構,並與現有的 MoE 系統無縫整合。

ReMoE utilizes ReLU activation functions to dynamically determine the active state of experts. In contrast to TopK routing, which activates only the top-k experts based on a discrete probability distribution, ReMoE’s ReLU routing transitions smoothly between active and inactive states. The sparsity of activated experts is controlled using adaptive L1 regularization, ensuring efficient computation while maintaining high performance. This differentiable design also allows for dynamic allocation of resources across tokens and layers, adapting to the complexity of individual inputs.

ReMoE 利用 ReLU 激活函數來動態確定專家的活動狀態。與僅啟動基於離散機率分佈的前 k 個專家的 TopK 路由相比,ReMoE 的 ReLU 路由在活動和非活動狀態之間平滑過渡。使用自適應 L1 正規化控制啟動專家的稀疏性,確保高效運算,同時保持高效能。這種可微分的設計也允許跨代幣和層動態分配資源,適應各個輸入的複雜性。

Technical Details and Benefits

技術細節和優勢

The key innovation of ReMoE lies in its routing mechanism. By replacing the discontinuous TopK operation with a continuous ReLU-based approach, ReMoE eliminates abrupt changes in expert activation, ensuring smoother gradient updates and improved stability during training. Additionally, ReMoE’s dynamic routing mechanism allows for adjusting the number of active experts based on token complexity, promoting efficient resource utilization.

ReMoE的關鍵創新在於其路由機制。透過以基於 ReLU 的連續方法取代不連續的 TopK 操作,ReMoE 消除了專家活化中的突然變化,確保更平滑的梯度更新並提高訓練過程中的穩定性。此外,ReMoE的動態路由機制允許根據代幣複雜度調整活躍專家的數量,從而促進資源的高效利用。

To address imbalances where some experts might remain underutilized, ReMoE incorporates an adaptive load-balancing strategy into its L1 regularization. This refinement ensures a fairer distribution of token assignments across experts, enhancing the model’s capacity and overall performance. The architecture’s scalability is evident in its ability to handle a larger number of experts and finer levels of granularity compared to traditional MoE models.

為了解決一些專家可能仍未充分利用的不平衡問題,ReMoE 將自適應負載平衡策略納入其 L1 正規化中。這種改進確保了專家之間代幣分配的更公平分配,從而增強了模型的容量和整體性能。與傳統的 MoE 模型相比,此架構的可擴展性體現在它能夠處理更多數量的專家和更精細的粒度。

Performance Insights and Experimental Results

性能見解和實驗結果

Extensive experiments demonstrate that ReMoE consistently outperforms conventional MoE architectures. The researchers tested ReMoE using the LLaMA architecture, training models of varying sizes (182M to 978M parameters) with different numbers of experts (4 to 128). Key findings include:

大量實驗表明,ReMoE 的性能始終優於傳統的 MoE 架構。研究人員使用 LLaMA 架構測試了 ReMoE,使用不同數量的專家(4 到 128)訓練不同大小(182M 到 978M 參數)的模型。主要發現包括:

For instance, on downstream tasks like ARC, BoolQ, and LAMBADA, ReMoE showed measurable accuracy improvements over both dense and TopK-routed MoE models. Analyses of training and inference throughput revealed that ReMoE’s differentiable design introduces minimal computational overhead, making it suitable for practical applications.

例如,在 ARC、BoolQ 和 LAMBADA 等下游任務中,ReMoE 比密集和 TopK 路由的 MoE 模型顯示出可測量的精度改進。對訓練和推理吞吐量的分析表明,ReMoE 的可微分設計引入了最小的計算開銷,使其適合實際應用。

Conclusion

結論

ReMoE presents a valuable advance in Mixture-of-Experts architectures by addressing the limitations of TopK+Softmax routing. The ReLU-based routing mechanism, combined with adaptive regularization techniques, ensures that ReMoE is both efficient and adaptable. This innovation highlights the potential of revisiting foundational design choices to achieve better scalability and performance. By offering a practical and resource-conscious approach, ReMoE provides a useful tool for advancing AI systems to meet growing computational demands.

ReMoE 透過解決 TopK+Softmax 路由的局限性,在專家混合架構中呈現出寶貴的進步。基於ReLU的路由機制與自適應正規化技術相結合,確保了ReMoE的高效性和適應性。這項創新凸顯了重新審視基礎設計選擇以實現更好的可擴展性和效能的潛力。透過提供實用且資源意識型的方法,ReMoE 為推進人工智慧系統滿足不斷增長的運算需求提供了一個有用的工具。

Check out the Paper and GitHub Page. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 60k+ ML SubReddit.

請參閱 Paper 和 GitHub 頁面。這項研究的所有功勞都歸功於該計畫的研究人員。另外,不要忘記在 Twitter 上關注我們並加入我們的 Telegram 頻道和 LinkedIn 群組。不要忘記加入我們 60k+ ML SubReddit。

Trending: LG AI Research Releases EXAONE 3.5: Three Open-Source Bilingual Frontier AI-level Models Delivering Unmatched Instruction Following and Long Context Understanding for Global Leadership in Generative AI Excellence…

趨勢:LG AI Research 發布 EXAONE 3.5:三個開源雙語前沿 AI 級模型,提供無與倫比的指令跟踪和長期上下文理解,以實現卓越生成 AI 的全球領導地位…

免責聲明:info@kdj.com

所提供的資訊並非交易建議。 kDJ.com對任何基於本文提供的資訊進行的投資不承擔任何責任。加密貨幣波動性較大,建議您充分研究後謹慎投資!

如果您認為本網站使用的內容侵犯了您的版權,請立即聯絡我們(info@kdj.com),我們將及時刪除。

-

-

- 比特幣在市值達到1.88噸時占主導地位

- 2025-04-26 14:25:13

- 當天增長0.92%後,目前的加密貨幣市值目前為2.99噸。總加密交易量增加了0.92%

-

-

- 靠近協議(接近)價格彈回,當鑽頭記錄ETF附近的位置

- 2025-04-26 14:20:14

- 本週,隨著關稅戰爭的恐慌平息,加密貨幣市場見證了看漲的轉變。因此,比特幣的價格在95,000美元的障礙物上彈起

-

- POL價格預測:POL在2025年會達到$ 1嗎?

- 2025-04-26 14:15:13

- 加密貨幣市場正經歷著一個動蕩的時期,但是在謹慎下降的情況下,一個令牌引起了投資者的注意:POL(以前稱為Matic)。

-

-

- 4最佳加密貨幣在2025年投資:為什麼不固定,鏈接,Avax和Ada值得關注

- 2025-04-26 14:10:13

- 加密不再只是投機性資產類別,現在它被用來真正賺錢。從全球匯款和實時商務到協議級集成

-

-