|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Transformer 模型的开发显着推进了人工智能的发展,在不同的任务中提供了卓越的性能。然而,这些进步通常伴随着严格的计算要求,在可扩展性和效率方面提出了挑战。稀疏激活的专家混合 (MoE) 架构提供了一种有前景的解决方案,无需成比例的计算成本即可增加模型容量。然而,MoE 模型中传统的 TopK+Softmax 路由面临着显着的局限性。 TopK 路由的离散性和不可微分性质阻碍了可扩展性和优化,同时确保平衡的专家利用率仍然是一个长期存在的问题,导致效率低下和性能不佳。

Mixture-of-Experts (MoE) architectures have emerged as a powerful technique to increase the capacity of Transformer models without incurring proportional computational costs. However, traditional TopK+Softmax routing in MoE models presents several limitations, including the discrete and non-differentiable nature of TopK routing, which hampers scalability and optimization, and the difficulty in ensuring balanced expert utilization, leading to inefficiencies and suboptimal performance.

专家混合 (MoE) 架构已成为一种强大的技术,可以在不产生相应计算成本的情况下增加 Transformer 模型的容量。然而,MoE模型中传统的TopK+Softmax路由存在一些局限性,包括TopK路由的离散性和不可微分性,这阻碍了可扩展性和优化,以及难以确保均衡的专家利用率,导致效率低下和性能不佳。

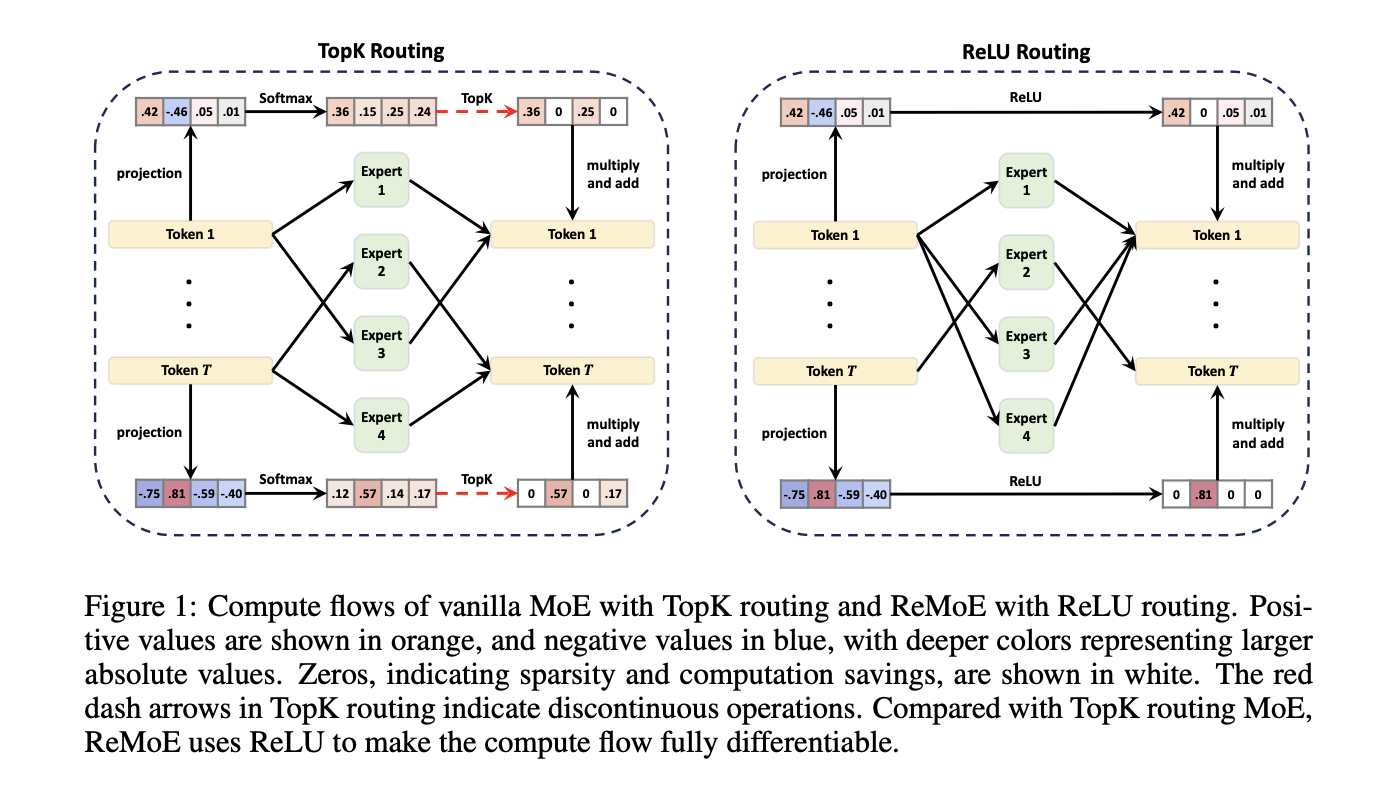

To address these limitations, researchers at Tsinghua University have proposed a new architecture called ReMoE (ReLU-based Mixture-of-Experts). ReMoE replaces the conventional TopK+Softmax routing with a ReLU-based mechanism, enabling a fully differentiable routing process. This design simplifies the architecture and seamlessly integrates with existing MoE systems.

为了解决这些限制,清华大学的研究人员提出了一种名为 ReMoE(基于 ReLU 的专家混合)的新架构。 ReMoE 使用基于 ReLU 的机制取代了传统的 TopK+Softmax 路由,从而实现了完全可微分的路由过程。该设计简化了架构,并与现有的 MoE 系统无缝集成。

ReMoE utilizes ReLU activation functions to dynamically determine the active state of experts. In contrast to TopK routing, which activates only the top-k experts based on a discrete probability distribution, ReMoE’s ReLU routing transitions smoothly between active and inactive states. The sparsity of activated experts is controlled using adaptive L1 regularization, ensuring efficient computation while maintaining high performance. This differentiable design also allows for dynamic allocation of resources across tokens and layers, adapting to the complexity of individual inputs.

ReMoE 利用 ReLU 激活函数来动态确定专家的活动状态。与仅激活基于离散概率分布的前 k 个专家的 TopK 路由相比,ReMoE 的 ReLU 路由在活动和非活动状态之间平滑过渡。使用自适应 L1 正则化控制激活专家的稀疏性,确保高效计算,同时保持高性能。这种可微分的设计还允许跨代币和层动态分配资源,适应各个输入的复杂性。

Technical Details and Benefits

技术细节和优势

The key innovation of ReMoE lies in its routing mechanism. By replacing the discontinuous TopK operation with a continuous ReLU-based approach, ReMoE eliminates abrupt changes in expert activation, ensuring smoother gradient updates and improved stability during training. Additionally, ReMoE’s dynamic routing mechanism allows for adjusting the number of active experts based on token complexity, promoting efficient resource utilization.

ReMoE的关键创新在于其路由机制。通过用基于 ReLU 的连续方法替换不连续的 TopK 操作,ReMoE 消除了专家激活中的突然变化,确保更平滑的梯度更新并提高训练过程中的稳定性。此外,ReMoE的动态路由机制允许根据代币复杂度调整活跃专家的数量,促进资源的高效利用。

To address imbalances where some experts might remain underutilized, ReMoE incorporates an adaptive load-balancing strategy into its L1 regularization. This refinement ensures a fairer distribution of token assignments across experts, enhancing the model’s capacity and overall performance. The architecture’s scalability is evident in its ability to handle a larger number of experts and finer levels of granularity compared to traditional MoE models.

为了解决一些专家可能仍未得到充分利用的不平衡问题,ReMoE 将自适应负载平衡策略纳入其 L1 正则化中。这种改进确保了专家之间代币分配的更公平分配,从而增强了模型的容量和整体性能。与传统的 MoE 模型相比,该架构的可扩展性体现在它能够处理更多数量的专家和更精细的粒度。

Performance Insights and Experimental Results

性能见解和实验结果

Extensive experiments demonstrate that ReMoE consistently outperforms conventional MoE architectures. The researchers tested ReMoE using the LLaMA architecture, training models of varying sizes (182M to 978M parameters) with different numbers of experts (4 to 128). Key findings include:

大量实验表明,ReMoE 的性能始终优于传统的 MoE 架构。研究人员使用 LLaMA 架构测试了 ReMoE,使用不同数量的专家(4 到 128)训练不同大小(182M 到 978M 参数)的模型。主要发现包括:

For instance, on downstream tasks like ARC, BoolQ, and LAMBADA, ReMoE showed measurable accuracy improvements over both dense and TopK-routed MoE models. Analyses of training and inference throughput revealed that ReMoE’s differentiable design introduces minimal computational overhead, making it suitable for practical applications.

例如,在 ARC、BoolQ 和 LAMBADA 等下游任务中,ReMoE 比密集和 TopK 路由的 MoE 模型显示出可测量的精度改进。对训练和推理吞吐量的分析表明,ReMoE 的可微分设计引入了最小的计算开销,使其适合实际应用。

Conclusion

结论

ReMoE presents a valuable advance in Mixture-of-Experts architectures by addressing the limitations of TopK+Softmax routing. The ReLU-based routing mechanism, combined with adaptive regularization techniques, ensures that ReMoE is both efficient and adaptable. This innovation highlights the potential of revisiting foundational design choices to achieve better scalability and performance. By offering a practical and resource-conscious approach, ReMoE provides a useful tool for advancing AI systems to meet growing computational demands.

ReMoE 通过解决 TopK+Softmax 路由的局限性,在专家混合架构中呈现出宝贵的进步。基于ReLU的路由机制与自适应正则化技术相结合,确保了ReMoE的高效性和适应性。这项创新凸显了重新审视基础设计选择以实现更好的可扩展性和性能的潜力。通过提供实用且资源意识型的方法,ReMoE 为推进人工智能系统满足不断增长的计算需求提供了一个有用的工具。

Check out the Paper and GitHub Page. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 60k+ ML SubReddit.

查看 Paper 和 GitHub 页面。这项研究的所有功劳都归功于该项目的研究人员。另外,不要忘记在 Twitter 上关注我们并加入我们的 Telegram 频道和 LinkedIn 群组。不要忘记加入我们 60k+ ML SubReddit。

Trending: LG AI Research Releases EXAONE 3.5: Three Open-Source Bilingual Frontier AI-level Models Delivering Unmatched Instruction Following and Long Context Understanding for Global Leadership in Generative AI Excellence…

热门话题:LG AI Research 发布 EXAONE 3.5:三个开源双语前沿 AI 级模型,提供无与伦比的指令跟踪和长期上下文理解,以实现卓越生成 AI 的全球领导地位……

免责声明:info@kdj.com

所提供的信息并非交易建议。根据本文提供的信息进行的任何投资,kdj.com不承担任何责任。加密货币具有高波动性,强烈建议您深入研究后,谨慎投资!

如您认为本网站上使用的内容侵犯了您的版权,请立即联系我们(info@kdj.com),我们将及时删除。

-

-

- 比特币财政部:泡沫还是突破?

- 2025-10-19 16:00:00

- 像 MicroStrategy 这样的比特币财务公司是明智的投资,还是一个等待破裂的泡沫?深入研究这些加密货币控股公司的估值和未来前景。

-

- Floki 价格检查:超卖?布林线讲故事!

- 2025-10-19 15:58:40

- Floki 准备好反弹了吗?我们深入研究图表,检查超卖情况和布林带,看看 Floki 价格的下一步走势。

-

-

- 应对加密货币动荡:INJ、布林带和市场整合

- 2025-10-19 15:58:39

- 随着加密货币市场整合,INJ 测试较低的布林带支撑。反弹即将来临,还是空头会继续控制?让我们深入探讨技术细节。

-

-

- CRV 测试、技术指标、潜在逆转:下一步是什么?

- 2025-10-19 15:58:37

- 深入了解最新的 CRV 分析。发现关键支撑位、技术指标和潜在逆转情景。在动荡的加密货币市场中保持领先!

-

-

![[4K 60fps] oc3andark 的 Astral(1 币) [4K 60fps] oc3andark 的 Astral(1 币)](/uploads/2025/10/19/cryptocurrencies-news/videos/k-fps-astral-ocandark-coin/68f438453fa33_image_500_375.webp)