|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

ChatGPT: Unveiling the Post-Exploitation Risks and Mitigation Strategies

The integration of artificial intelligence (AI) into our daily routines has brought forth a paradigm shift in how we interact with technology. However, with the advent of powerful language models like ChatGPT, security researchers are actively scrutinizing the potential implications and vulnerabilities that arise from their usage. In this comprehensive analysis, we delving deeper into the post-exploitation risks associated with ChatGPT, shedding light on the techniques attackers could employ to gain persistent access to user data and manipulate application behavior.

The Cross-Site Scripting (XSS) Vulnerability

In a previous investigation, our team uncovered two Cross-Site Scripting (XSS) vulnerabilities in ChatGPT. These vulnerabilities allowed a malicious actor to exploit the /api/auth/session endpoint, exfiltrating the user's JWT access token and gaining unauthorized access to their account. While the limited validity period of the access token mitigates the risk of permanent account compromise, it underscores the need for robust security measures to prevent such attacks in the first place.

Persistent Access through Custom Instructions

Custom Instructions in ChatGPT offer users the ability to set persistent contexts for customized conversations. However, this feature could pose security risks, including Stored Prompt Injection. Attackers could leverage XSS vulnerabilities or manipulate custom instructions to alter ChatGPT's responses, potentially facilitating misinformation dissemination, phishing, scams, and the theft of sensitive data. Notably, this manipulative influence could persist even after the user's session token has expired, underscoring the threat of long-term, unauthorized access and control.

Recent Mitigations and the Bypass

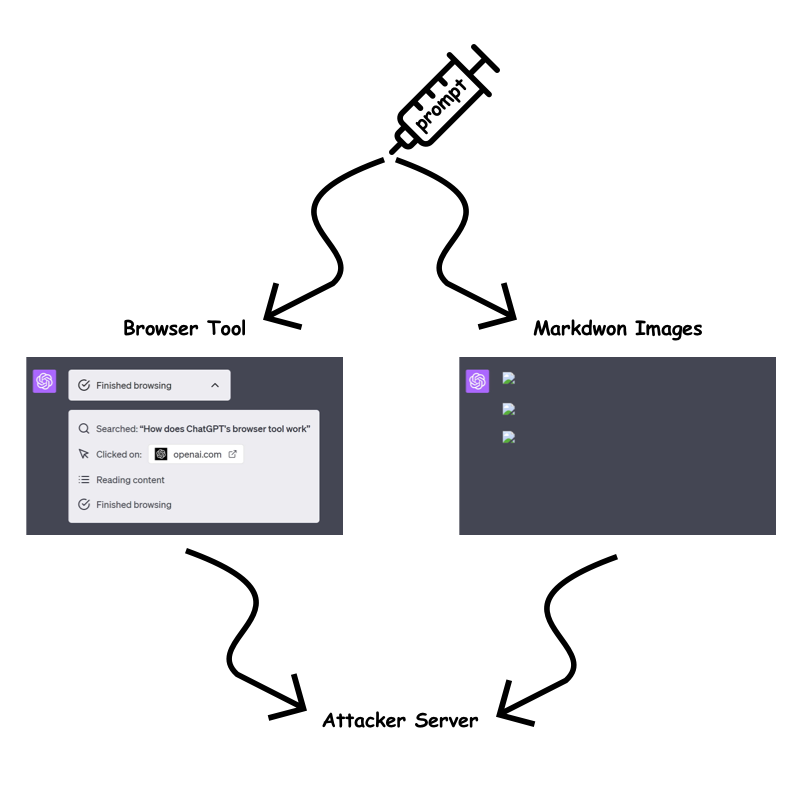

In response to the identified vulnerabilities, OpenAI has implemented measures to mitigate the risk of prompt injection attacks. The "browser tool" and markdown image rendering are now only permitted when the URL has been previously present in the conversation. This aims to prevent attackers from embedding dynamic, sensitive data within the URL query parameter or path.

However, our testing revealed a bypass technique that allows attackers to circumvent these restrictions. By exploiting the /backend-api/conversation/{uuid}/url_safe?url={url} endpoint, attackers can validate client-side URLs in ChatGPT responses and identify whether a specific string, including custom instructions, is present within the conversation text. This bypass opens up avenues for attackers to continue exfiltrating information despite the implemented mitigations.

Exfiltration Techniques Despite Mitigations

Despite OpenAI's efforts to mitigate information exfiltration, we identified several techniques that attackers could still employ:

Static URLs for Each Character:

Attackers could encode sensitive data into static URLs, creating a unique URL for each character they wish to exfiltrate. By using ChatGPT to generate images for each character and observing the order in which the requests are received, attackers can piece together the data on their server.

One Long Static URL:

Alternatively, attackers could use a single long static URL and ask ChatGPT to create a markdown image up to the character they wish to leak. This approach reduces the number of prompt characters required but may be slower for ChatGPT to render.

Using Domain Patterns:

The fastest method with the least prompt character requirement is using custom top-level domains. However, this method incurs a cost, as each domain would need to be purchased. Attackers could use a custom top-level domain for each character to create distinctive badges that link to the sensitive data.

Other Attack Vectors

Beyond the aforementioned techniques, attackers may also explore the potential for Stored Prompt Injection gadgets within ChatGPTs and the recently introduced ChatGPT memory. These areas could provide additional avenues for exploitation and unauthorized access.

OpenAI's Response and Future Mitigation Strategies

OpenAI is actively working to address the identified vulnerabilities and improve the security of ChatGPT. While the implemented mitigations have made exfiltration more challenging, attackers continue to devise bypass techniques. The ongoing arms race between attackers and defenders highlights the need for continuous monitoring and adaptation of security measures.

Conclusion

The integration of AI into our lives brings forth both opportunities and challenges. While ChatGPT and other language models offer immense potential, it is crucial to remain vigilant of the potential security risks they introduce. By understanding the post-exploitation techniques that attackers could employ, we can develop robust countermeasures and ensure the integrity and security of our systems. As the threat landscape evolves, organizations must prioritize security awareness, adopt best practices, and collaborate with researchers to mitigate the evolving risks associated with AI-powered technologies.

免责声明:info@kdj.com

所提供的信息并非交易建议。根据本文提供的信息进行的任何投资,kdj.com不承担任何责任。加密货币具有高波动性,强烈建议您深入研究后,谨慎投资!

如您认为本网站上使用的内容侵犯了您的版权,请立即联系我们(info@kdj.com),我们将及时删除。

-

-

-

- 查理王 5 便士硬币:您口袋里的同花大顺?

- 2025-10-23 08:07:25

- 2320 万枚查尔斯国王 5 便士硬币正在流通,标志着一个历史性时刻。收藏家们,准备好寻找一段历史吧!

-

-

-

- 嘉手纳的路的尽头? KDA 代币因项目放弃而暴跌

- 2025-10-23 07:59:26

- Kadena 关闭运营,导致 KDA 代币螺旋式上涨。这是结束了,还是社区可以让这条链继续存在?

-

- 查尔斯国王 5 便士硬币开始流通:硬币收藏家的同花大顺!

- 2025-10-23 07:07:25

- 查尔斯国王 5 便士硬币现已在英国流通!了解热门话题、橡树叶设计,以及为什么收藏家对这款皇家发布如此兴奋。

-

- 查尔斯国王 5 便士硬币进入流通:收藏家指南

- 2025-10-23 07:07:25

- 查理三世国王的 5 便士硬币现已流通!了解新设计、其意义以及收藏家为何如此兴奋。准备好寻找这些历史硬币!

-