|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

ChatGPT: Unveiling the Post-Exploitation Risks and Mitigation Strategies

The integration of artificial intelligence (AI) into our daily routines has brought forth a paradigm shift in how we interact with technology. However, with the advent of powerful language models like ChatGPT, security researchers are actively scrutinizing the potential implications and vulnerabilities that arise from their usage. In this comprehensive analysis, we delving deeper into the post-exploitation risks associated with ChatGPT, shedding light on the techniques attackers could employ to gain persistent access to user data and manipulate application behavior.

The Cross-Site Scripting (XSS) Vulnerability

In a previous investigation, our team uncovered two Cross-Site Scripting (XSS) vulnerabilities in ChatGPT. These vulnerabilities allowed a malicious actor to exploit the /api/auth/session endpoint, exfiltrating the user's JWT access token and gaining unauthorized access to their account. While the limited validity period of the access token mitigates the risk of permanent account compromise, it underscores the need for robust security measures to prevent such attacks in the first place.

Persistent Access through Custom Instructions

Custom Instructions in ChatGPT offer users the ability to set persistent contexts for customized conversations. However, this feature could pose security risks, including Stored Prompt Injection. Attackers could leverage XSS vulnerabilities or manipulate custom instructions to alter ChatGPT's responses, potentially facilitating misinformation dissemination, phishing, scams, and the theft of sensitive data. Notably, this manipulative influence could persist even after the user's session token has expired, underscoring the threat of long-term, unauthorized access and control.

Recent Mitigations and the Bypass

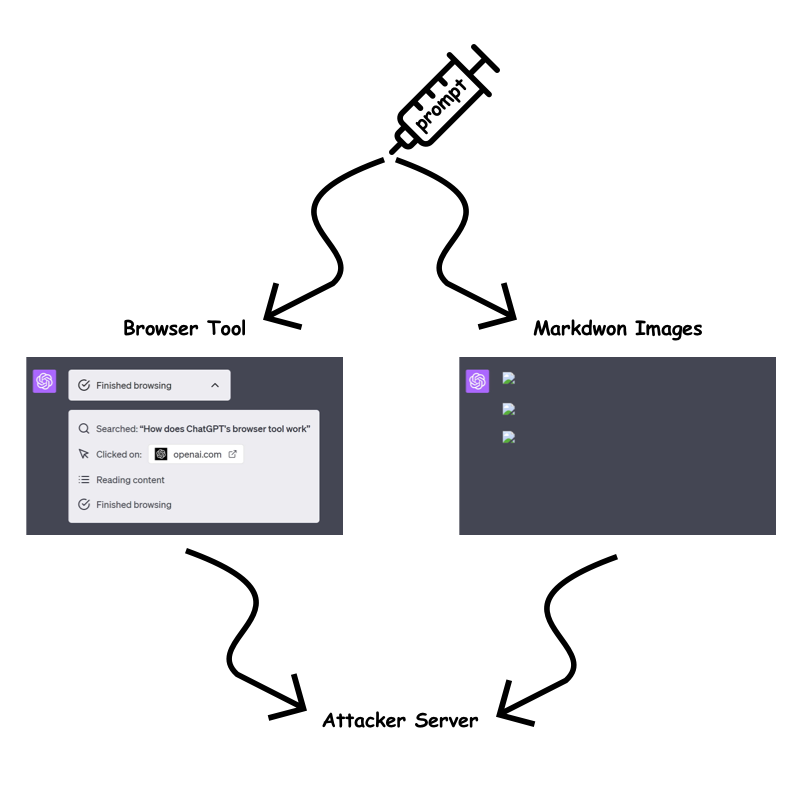

In response to the identified vulnerabilities, OpenAI has implemented measures to mitigate the risk of prompt injection attacks. The "browser tool" and markdown image rendering are now only permitted when the URL has been previously present in the conversation. This aims to prevent attackers from embedding dynamic, sensitive data within the URL query parameter or path.

However, our testing revealed a bypass technique that allows attackers to circumvent these restrictions. By exploiting the /backend-api/conversation/{uuid}/url_safe?url={url} endpoint, attackers can validate client-side URLs in ChatGPT responses and identify whether a specific string, including custom instructions, is present within the conversation text. This bypass opens up avenues for attackers to continue exfiltrating information despite the implemented mitigations.

Exfiltration Techniques Despite Mitigations

Despite OpenAI's efforts to mitigate information exfiltration, we identified several techniques that attackers could still employ:

Static URLs for Each Character:

Attackers could encode sensitive data into static URLs, creating a unique URL for each character they wish to exfiltrate. By using ChatGPT to generate images for each character and observing the order in which the requests are received, attackers can piece together the data on their server.

One Long Static URL:

Alternatively, attackers could use a single long static URL and ask ChatGPT to create a markdown image up to the character they wish to leak. This approach reduces the number of prompt characters required but may be slower for ChatGPT to render.

Using Domain Patterns:

The fastest method with the least prompt character requirement is using custom top-level domains. However, this method incurs a cost, as each domain would need to be purchased. Attackers could use a custom top-level domain for each character to create distinctive badges that link to the sensitive data.

Other Attack Vectors

Beyond the aforementioned techniques, attackers may also explore the potential for Stored Prompt Injection gadgets within ChatGPTs and the recently introduced ChatGPT memory. These areas could provide additional avenues for exploitation and unauthorized access.

OpenAI's Response and Future Mitigation Strategies

OpenAI is actively working to address the identified vulnerabilities and improve the security of ChatGPT. While the implemented mitigations have made exfiltration more challenging, attackers continue to devise bypass techniques. The ongoing arms race between attackers and defenders highlights the need for continuous monitoring and adaptation of security measures.

Conclusion

The integration of AI into our lives brings forth both opportunities and challenges. While ChatGPT and other language models offer immense potential, it is crucial to remain vigilant of the potential security risks they introduce. By understanding the post-exploitation techniques that attackers could employ, we can develop robust countermeasures and ensure the integrity and security of our systems. As the threat landscape evolves, organizations must prioritize security awareness, adopt best practices, and collaborate with researchers to mitigate the evolving risks associated with AI-powered technologies.

免責聲明:info@kdj.com

所提供的資訊並非交易建議。 kDJ.com對任何基於本文提供的資訊進行的投資不承擔任何責任。加密貨幣波動性較大,建議您充分研究後謹慎投資!

如果您認為本網站使用的內容侵犯了您的版權,請立即聯絡我們(info@kdj.com),我們將及時刪除。

-

-

-

-

-

-

- 梅拉尼婭·特朗普、加密貨幣和欺詐指控:深入探討

- 2025-10-22 07:29:06

- 探索梅拉尼婭·特朗普、加密貨幣和加密世界中的欺詐指控之間的聯繫。揭開 $MELANIA 硬幣爭議的面紗。

-

- Kadena 代幣退出:KDA 發生了什麼以及下一步是什麼?

- 2025-10-22 07:20:56

- Kadena 的創始團隊因“市場狀況”而關閉,導致 KDA 暴跌。這對於區塊鍊及其代幣的未來意味著什麼?

-

- Solana 的狂野之旅:ATH 夢想與回調現實

- 2025-10-22 07:03:27

- 索拉納 (Solana) 正觸及歷史新高,但可能會出現回調。我們對關鍵水平、鏈上數據和市場情緒進行了細分。

-

![MaxxoRMeN 的 dooMEd(硬惡魔)[1 幣] |幾何衝刺 MaxxoRMeN 的 dooMEd(硬惡魔)[1 幣] |幾何衝刺](/uploads/2025/10/22/cryptocurrencies-news/videos/doomed-hard-demon-maxxormen-coin-geometry-dash/68f8029c3b212_image_500_375.webp)