|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

尽管通过促进链(COT)提示取得的突破性进步,但大型语言模型(LLMS)在复杂的推理任务中面临重大挑战。

Large Language Models (LLMs) have made substantial progress in handling Chain-of-Thought (CoT) reasoning tasks, but they face challenges in terms of computational overhead, especially for longer CoT sequences. This directly affects inference latency and memory requirements.

大型语言模型(LLMS)在处理思想链(COT)推理任务方面取得了重大进展,但它们在计算开销方面面临挑战,尤其是对于更长的COT序列。这直接影响推理潜伏期和内存需求。

Since LLM decoding is autoregressive in nature, as CoT sequences grow longer, there is a proportional increase in processing time and memory usage in attention layers where computational costs scale quadratically. Striking a balance between maintaining reasoning accuracy and computational efficiency has become a critical challenge, as attempts to reduce reasoning steps often compromise the model’s problem-solving capabilities.

由于LLM解码本质上是自回归的,随着COT序列的增长更长,在注意层中的处理时间和记忆使用量会增加,其中计算成本量规则二次。在保持推理准确性和计算效率之间达到平衡已成为一个关键挑战,因为试图减少推理步骤通常会损害模型解决问题的能力。

To address the computational challenges of Chain-of-Thought (CoT) reasoning, various methodologies have been developed. Some approaches focus on streamlining the reasoning process by simplifying or skipping certain thinking steps, while others attempt to generate steps in parallel. A different strategy involves compressing reasoning steps into continuous latent representations, enabling LLMs to reason without generating explicit word tokens.

为了解决思想链(COT)推理的计算挑战,已经开发了各种方法。一些方法着重于通过简化或跳过某些思维步骤来简化推理过程,而另一些方法则试图并行生成步骤。另一种策略涉及将推理步骤压缩到连续的潜在表示中,使LLMS能够在不生成明确的单词代币的情况下进行推理。

Moreover, prompt compression techniques to handle complex instructions and long-context inputs more efficiently range from using lightweight language models to generate concise prompts, employing implicit continuous tokens for task representation, and implementing direct compression by filtering for high-informative tokens.

此外,迅速的压缩技术以处理复杂的指令和长篇文章输入更有效地范围从使用轻巧的语言模型来生成简洁提示,使用隐式连续令牌来完成任务表示,并通过过滤高信息令牌来实现直接压缩。

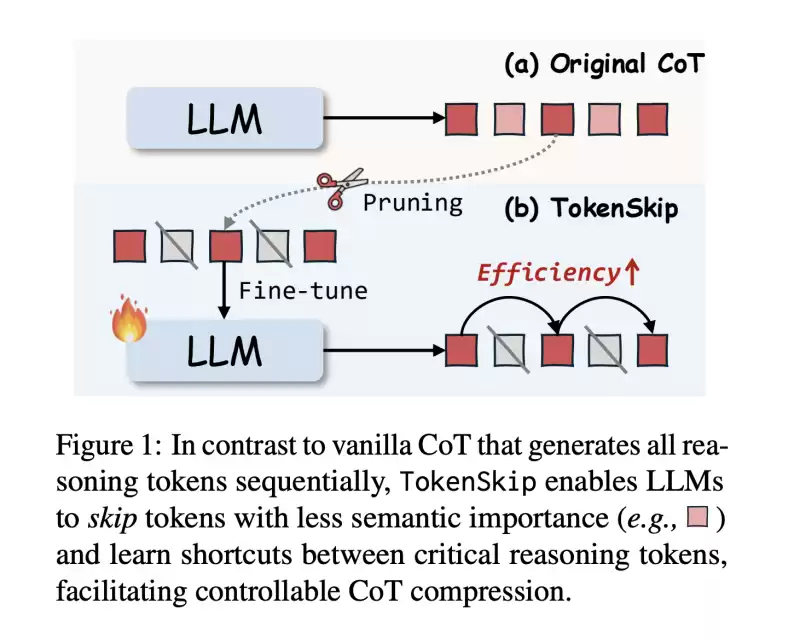

In this work, researchers from The Hong Kong Polytechnic University and the University of Science and Technology of China propose TokenSkip, an approach to optimize CoT processing in LLMs. It enables models to skip less important tokens within CoT sequences while maintaining connections between critical reasoning tokens, with adjustable compression ratios.

在这项工作中,香港理工大学和中国科学技术大学的研究人员提出了Tokenskip,这是一种优化LLM中COT处理的方法。它使模型能够在COT序列中跳过较少重要的令牌,同时保持关键推理令牌之间的连接,并具有可调节的压缩比。

The system works by first constructing compressed CoT training data through token pruning, followed by a supervised fine-tuning process. Initial testing across multiple models, including LLaMA-3.1-8B-Instruct and Qwen2.5-Instruct series shows promising results, particularly in maintaining reasoning capabilities while significantly reducing computational overhead.

该系统通过首先通过令牌修剪构建压缩的COT训练数据,然后进行监督的微调过程。跨多个模型的初步测试,包括Llama-3.1-8b-Instruct和QWEN2.5-Insruction系列显示出令人鼓舞的结果,尤其是在保持推理能力的同时大大减少计算开销时。

The architecture of TokenSkip is built on the fundamental principle that different reasoning tokens contribute varying levels of importance to reaching the final answer. It consists of two main phases: training data preparation and inference.

Tokenskip的架构建立在以下基本原则的基础上,即不同的推理令牌为达到最终答案的重要性有所不同。它由两个主要阶段组成:培训数据准备和推理。

During the training phase, the system generates CoT trajectories using the target LLM, and each remaining trajectory is pruned with a randomly selected compression ratio. The token pruning process is guided by an “importance scoring” mechanism, which assigns higher scores to tokens that are more critical for the final answer.

在训练阶段,系统使用目标LLM生成COT轨迹,并且每个剩余的轨迹都用随机选择的压缩比修剪。令牌修剪过程以“重要性评分”机制为指导,该机制为最终答案更为重要的代币分配了更高的分数。

At inference time, TokenSkip maintains the autoregressive decoding approach but enhances efficiency by enabling LLMs to skip less important tokens. The structure of the input format is such that the question and compression ratio get separated by end-of-sequence tokens.

在推论时,Tokenskip保持自回归解码方法,但通过使LLMS能够跳过重要的令牌来提高效率。输入格式的结构使得问题和压缩比通过序列末端令牌分开。

The results show that larger language models are more capable of maintaining performance while achieving higher compression rates. The Qwen2.5-14B-Instruct model achieves remarkable results with only a 0.4% performance drop while reducing token usage by 40%.

结果表明,较大的语言模型更有能力保持性能,同时达到更高的压缩率。 QWEN2.5-14B-INSTRUCT模型取得了显着的结果,只有0.4%的性能下降,同时将令牌使用量减少了40%。

When compared with alternative approaches like prompt-based reduction and truncation, TokenSkip shows superior performance. While prompt-based reduction fails to achieve target compression ratios and truncation leads to significant performance degradation, TokenSkip maintains the specified compression ratio while preserving reasoning capabilities. On the MATH-500 dataset, it achieves a 30% reduction in token usage with less than a 4% performance drop.

与替代方法相比,诸如及时的减少和截断之类的方法时,Tokenskip显示出卓越的性能。尽管基于迅速的减少无法达到目标压缩比和截断会导致显着的性能降解,但Tokenskip在保留推理能力的同时保持指定的压缩比。在Math-500数据集上,它的令牌使用率降低了30%,性能下降不到4%。

In this paper, researchers introduce TokenSkip, a method that represents a significant advancement in optimizing CoT processing for LLMs by introducing a controllable compression mechanism based on token importance. The success of the method lies in maintaining reasoning accuracy while significantly reducing computational overhead by selectively preserving critical tokens and skipping less important ones. The approach has proven effective with LLMs, showing minimal performance degradation even at substantial compression ratios.

在本文中,研究人员介绍了Tokenskip,该方法代表了通过基于令牌重要性引入可控的压缩机制来优化LLM的COT处理的重大进步。该方法的成功在于维持推理精度,同时通过选择性地保留关键令牌和跳过较少重要的代币来显着降低计算开销。该方法已被证明对LLM有效,即使以实质性的压缩比也显示出最小的性能降解。

This research opens new possibilities for advancing efficient reasoning in LLMs, establishing a foundation for future developments in computational efficiency while maintaining robust reasoning capabilities.

这项研究为推进LLM中有效推理的新可能性开辟了新的可能性,为计算效率的未来发展建立了基础,同时保持了强大的推理能力。

Check out the Paper. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 75k+ ML SubReddit.

查看纸。这项研究的所有信用都归该项目的研究人员。另外,请随时在Twitter上关注我们,不要忘记加入我们的75K+ ML Subreddit。

免责声明:info@kdj.com

所提供的信息并非交易建议。根据本文提供的信息进行的任何投资,kdj.com不承担任何责任。加密货币具有高波动性,强烈建议您深入研究后,谨慎投资!

如您认为本网站上使用的内容侵犯了您的版权,请立即联系我们(info@kdj.com),我们将及时删除。

-

-

- 吨币(吨)鲸鱼提高了他们的活动水平

- 2025-04-06 13:20:12

- 链上的数据显示,由于交易量已设定了新的高度,吨币(吨)鲸鱼最近加强了活动水平。

-

-

![比特币[BTC]接近一个临界阈值,其价格现在接近紧密包装的短清算水平。 比特币[BTC]接近一个临界阈值,其价格现在接近紧密包装的短清算水平。](/assets/pc/images/moren/280_160.png)

-

-

-

-

-

![比特币[BTC]接近一个临界阈值,其价格现在接近紧密包装的短清算水平。 比特币[BTC]接近一个临界阈值,其价格现在接近紧密包装的短清算水平。](/uploads/2025/04/01/cryptocurrencies-news/articles/bitcoin-btc-nearing-critical-threshold-price-close-tightly-packed-short-liquidation-levels/middle_800_480.webp)