|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

尽管最新的法学硕士拥有先进的推理能力,但他们在解读关系时常常无法抓住重点。在本文中,我们探讨了逆转诅咒,这是一个影响法学硕士跨理解和生成等任务的陷阱。

Large Language Models (LLMs) are renowned for their advanced reasoning capabilities, enabling them to perform a wide range of tasks, from natural language processing to code generation. However, despite their strengths, LLMs often exhibit a weakness in deciphering relationships, particularly when dealing with inverses. This phenomenon, termed the “reversal curse,” affects LLMs across various tasks, including comprehension and generation.

大型语言模型 (LLM) 以其先进的推理能力而闻名,使它们能够执行从自然语言处理到代码生成的各种任务。然而,尽管法学硕士有优势,但他们在解读关系方面往往表现出弱点,特别是在处理逆关系时。这种现象被称为“逆转诅咒”,它影响着法学硕士的各种任务,包括理解和生成。

To understand the underlying issue, let’s consider a scenario with two entities, denoted as a and b, connected by their relation R and its inverse. LLMs excel at handling sequences such as “aRb,” where a is related to b by relation R. For instance, an LLM can quickly answer the question, “Who is the mother of Tom Cruise?” when asked. However, LLMs struggle with the inverse relation, denoted as R inverse. In our example, if we ask an LLM, “Who is Mary Lee Pfeiffer’s son?” it is more likely to hallucinate and falter, despite already knowing the relationship between Tom Cruise and Mary Lee Pfeiffer.

为了理解根本问题,让我们考虑一个场景,其中有两个实体(表示为 a 和 b),通过它们的关系 R 及其逆关系连接。法学硕士擅长处理“aRb”等序列,其中 a 通过关系 R 与 b 相关。例如,法学硕士可以快速回答以下问题:“谁是汤姆·克鲁斯的母亲?”当被问到时。然而,法学硕士却难以应对逆关系,即 R 逆关系。在我们的例子中,如果我们问一位法学硕士,“玛丽·李·菲佛的儿子是谁?”尽管已经知道汤姆·克鲁斯和玛丽·李·菲佛之间的关系,但它更有可能产生幻觉和动摇。

This reversal curse is a pitfall that affects LLMs in a variety of tasks. In a recent study, researchers from the Renmin University of China brought this phenomenon to the attention of the research community, shedding light on its probable causes and suggesting potential mitigation strategies. They identify the Training Objective Function as one of the key factors influencing the extent of the reversal curse.

这种逆转诅咒是一个陷阱,影响着法学硕士从事各种任务。在最近的一项研究中,中国人民大学的研究人员引起了研究界的注意,揭示了其可能的原因并提出了潜在的缓解策略。他们将训练目标函数确定为影响逆转诅咒程度的关键因素之一。

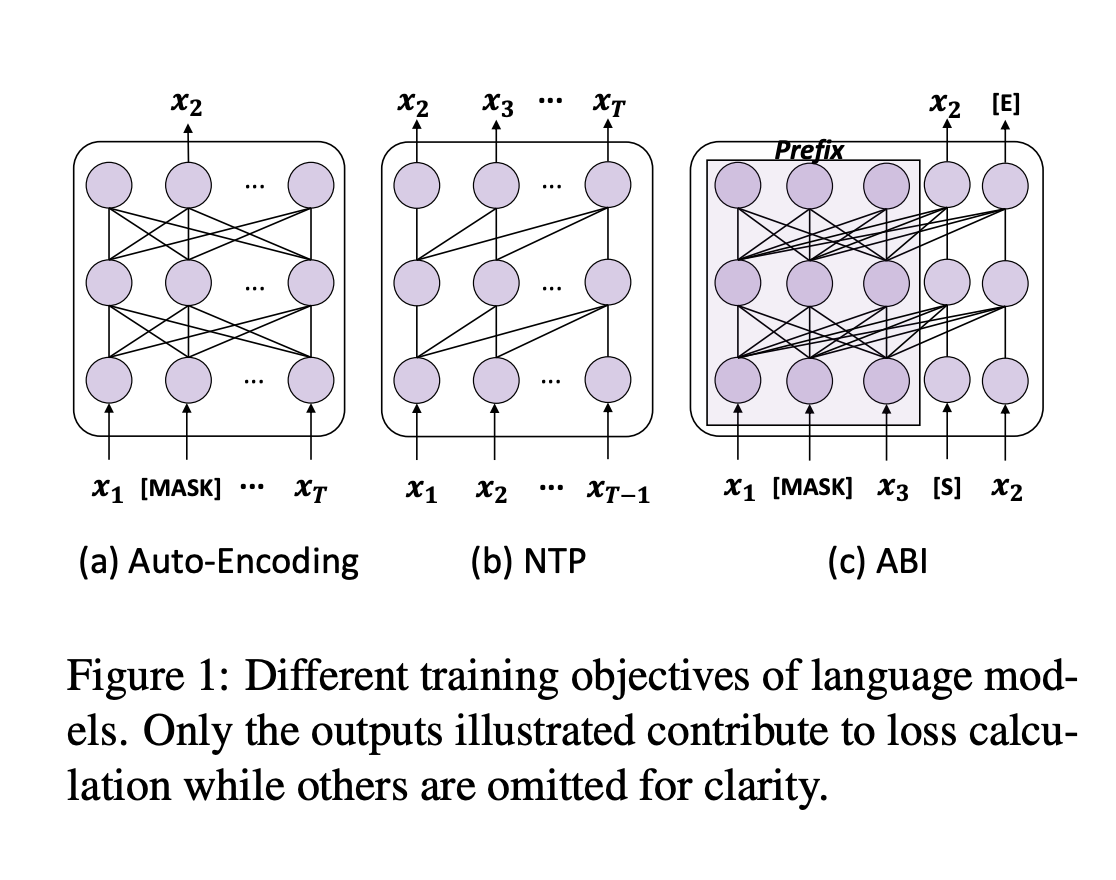

To fully grasp the reversal curse, we must first understand the training process of LLMs. Next-token prediction (NTP) is the dominant pre-training objective for current large language models, such as GPT and Llama. In models like GPT and Llama, the attention masks during training depend on the preceding tokens, meaning each token focuses solely on its prior context. This makes it impossible to account for subsequent tokens. As a result, if a occurs before b in the training corpus, the model maximizes the probability of b given a over the likelihood of a given b. Therefore, there is no guarantee that LLMs can provide a high probability for a when presented with b. In contrast, GLM models are pre-trained with autoregressive blank in-filling objectives, where the masked token controls both preceding and succeeding tokens, making them more robust to the reversal curse.

要完全掌握逆转诅咒,首先要了解LLM的培养流程。下一个标记预测 (NTP) 是当前大型语言模型(例如 GPT 和 Llama)的主要预训练目标。在 GPT 和 Llama 等模型中,训练期间的注意力掩模取决于前面的标记,这意味着每个标记仅关注其先前的上下文。这使得无法计算后续令牌。因此,如果训练语料库中 a 出现在 b 之前,则模型会最大化给定 a 的 b 的概率,而不是给定 b 的可能性。因此,不能保证法学硕士在出现 b 时能够提供 a 的高概率。相比之下,GLM 模型是使用自回归空白填充目标进行预训练的,其中屏蔽标记控制前面和后面的标记,使它们对逆转诅咒更加鲁棒。

The authors put this hypothesis to the test by fine-tuning GLMs on “Name to Description” data, using fictitious names and feeding descriptions to retrieve information about the entities. The GLMs achieved approximately 80% accuracy on this task, while Llama’s accuracy was 0%.

作者通过对“名称到描述”数据的 GLM 进行微调,使用虚构名称和提供描述来检索有关实体的信息,从而检验了这一假设。 GLM 在这项任务上的准确率约为 80%,而 Llama 的准确率为 0%。

To address this issue, the authors propose a method that adapts the training objective of LLMs to something similar to ABI. They fine-tuned models using Bidirectional Causal Language Model Optimization (BICO) to reverse-engineer mathematical tasks and translation problems. BICO adopts an autoregressive blank infilling objective, similar to GLM, but with tailored modifications designed explicitly for causal language models. The authors introduced rotary (relative) position embeddings and modified the attention function to make it bidirectional. This fine-tuning method improved the model’s accuracy in reverse translation and mathematical problem-solving tasks.

为了解决这个问题,作者提出了一种将法学硕士的培训目标调整为类似于 ABI 的方法。他们使用双向因果语言模型优化(BICO)对模型进行微调,以逆向工程数学任务和翻译问题。 BICO 采用自回归空白填充目标,类似于 GLM,但进行了专门针对因果语言模型设计的定制修改。作者引入了旋转(相对)位置嵌入,并修改了注意力函数以使其成为双向的。这种微调方法提高了模型在逆向翻译和数学问题解决任务中的准确性。

In conclusion, the authors analyze the reversal curse and propose a fine-tuning strategy to mitigate this pitfall. By adopting a causal language model with an ABI-like objective, this study sheds light on the reversal underperformance of LLMs. This work could be further expanded to examine the impact of advanced techniques, such as RLHF, on the reversal curse.

总之,作者分析了逆转诅咒并提出了一种微调策略来减轻这一陷阱。通过采用具有类似 ABI 目标的因果语言模型,这项研究揭示了法学硕士的逆转表现不佳的情况。这项工作可以进一步扩展,以研究先进技术(例如 RLHF)对逆转诅咒的影响。

Don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter. Don’t Forget to join our 55k+ ML SubReddit.

不要忘记在 Twitter 上关注我们并加入我们的 Telegram 频道和 LinkedIn 群组。如果您喜欢我们的工作,您一定会喜欢我们的时事通讯。不要忘记加入我们超过 55k 的 ML SubReddit。

免责声明:info@kdj.com

所提供的信息并非交易建议。根据本文提供的信息进行的任何投资,kdj.com不承担任何责任。加密货币具有高波动性,强烈建议您深入研究后,谨慎投资!

如您认为本网站上使用的内容侵犯了您的版权,请立即联系我们(info@kdj.com),我们将及时删除。

-

- 自3月初以来,比特币首次中断了$ 93,000

- 2025-04-24 20:45:12

- 加密货币投资者希望在这样的交易日:比特币自3月初以来首次交易超过93,000美元

-

- 超级微型计算机在贸易政策变化和富士通合作伙伴关系上共享

- 2025-04-24 20:45:12

- 在两个方面的积极新闻:潜在的贸易政策变化和与科技巨头富士通的伙伴关系扩大之后,超级微型计算机的股票在周三飙升。

-

-

- 仲裁(ARB)推动用空投重建声誉为早期支持者

- 2025-04-24 20:40:11

- 在最近的《代币回购计划》之后,一项新的治理提案呼吁加密式空投激励早期的支持者。

-

-

-

- Bybit卡持有人现在可以在USDC中赚取现金返还奖励

- 2025-04-24 20:30:11

- Bybit是全球第二大加密货币交易所通过交易量的交易,现在在两个主要的USD负体稳定性的稳定股中提供现金返还

-

-