|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

儘管最新的法學碩士擁有先進的推理能力,但他們在解讀關係時常常無法抓住重點。在本文中,我們探討了逆轉詛咒,這是一個影響法學碩士跨理解和生成等任務的陷阱。

Large Language Models (LLMs) are renowned for their advanced reasoning capabilities, enabling them to perform a wide range of tasks, from natural language processing to code generation. However, despite their strengths, LLMs often exhibit a weakness in deciphering relationships, particularly when dealing with inverses. This phenomenon, termed the “reversal curse,” affects LLMs across various tasks, including comprehension and generation.

大型語言模型 (LLM) 以其先進的推理能力而聞名,使它們能夠執行從自然語言處理到程式碼生成的各種任務。然而,儘管法學碩士有優勢,但他們在解讀關係方面往往表現出弱點,特別是在處理逆關係時。這種現像被稱為“逆轉詛咒”,它影響法學碩士的各種任務,包括理解和生成。

To understand the underlying issue, let’s consider a scenario with two entities, denoted as a and b, connected by their relation R and its inverse. LLMs excel at handling sequences such as “aRb,” where a is related to b by relation R. For instance, an LLM can quickly answer the question, “Who is the mother of Tom Cruise?” when asked. However, LLMs struggle with the inverse relation, denoted as R inverse. In our example, if we ask an LLM, “Who is Mary Lee Pfeiffer’s son?” it is more likely to hallucinate and falter, despite already knowing the relationship between Tom Cruise and Mary Lee Pfeiffer.

為了理解根本問題,讓我們考慮一個場景,其中有兩個實體(表示為 a 和 b),透過它們的關係 R 及其逆關係連結。法學碩士擅長處理“aRb”等序列,其中 a 通過關係 R 與 b 相關。當被問到時。然而,法學碩士卻難以應付逆關係,即 R 逆關係。在我們的例子中,如果我們問一位法學碩士,“瑪麗·李·菲佛的兒子是誰?”儘管已經知道湯姆·克魯斯和瑪麗·李·菲佛之間的關係,但它更有可能產生幻覺和動搖。

This reversal curse is a pitfall that affects LLMs in a variety of tasks. In a recent study, researchers from the Renmin University of China brought this phenomenon to the attention of the research community, shedding light on its probable causes and suggesting potential mitigation strategies. They identify the Training Objective Function as one of the key factors influencing the extent of the reversal curse.

這種逆轉詛咒是個陷阱,影響法學碩士從事各種任務。在最近的一項研究中,中國人民大學的研究人員引起了研究界的注意,揭示了其可能的原因並提出了潛在的緩解策略。他們將訓練目標函數確定為影響逆轉詛咒程度的關鍵因素之一。

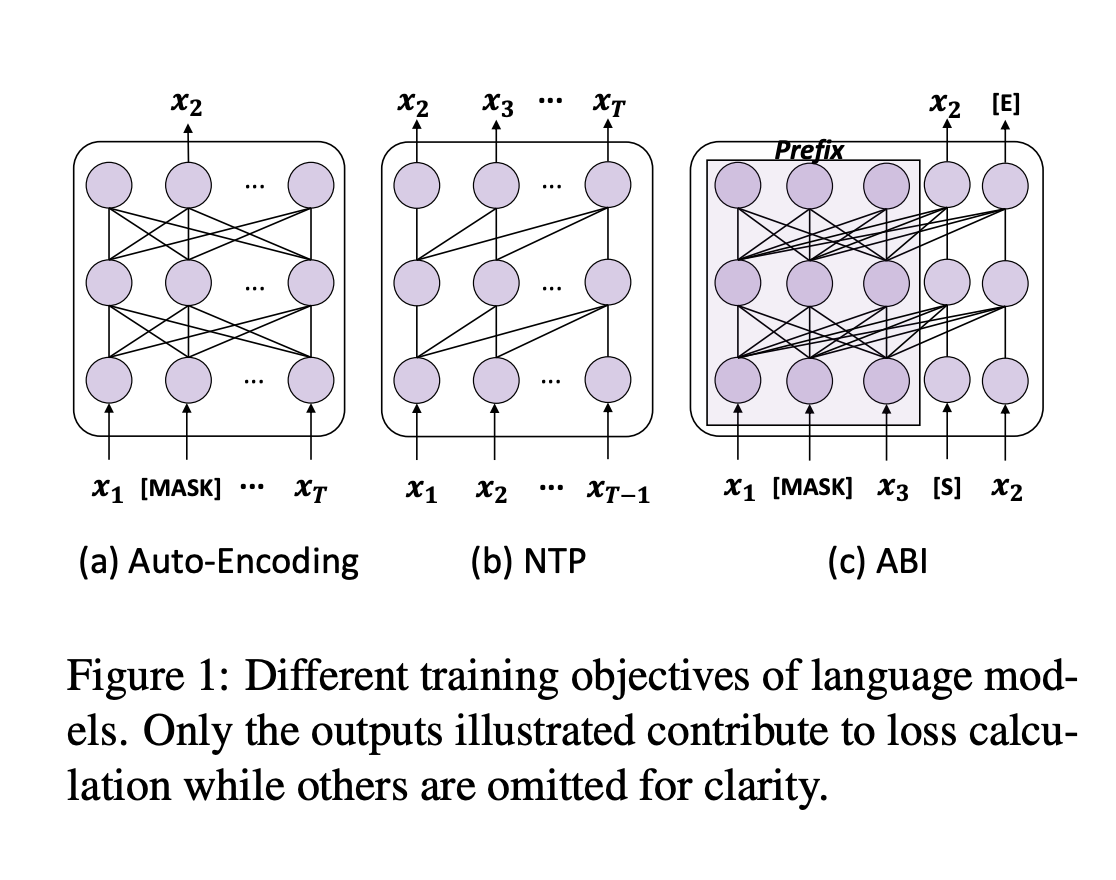

To fully grasp the reversal curse, we must first understand the training process of LLMs. Next-token prediction (NTP) is the dominant pre-training objective for current large language models, such as GPT and Llama. In models like GPT and Llama, the attention masks during training depend on the preceding tokens, meaning each token focuses solely on its prior context. This makes it impossible to account for subsequent tokens. As a result, if a occurs before b in the training corpus, the model maximizes the probability of b given a over the likelihood of a given b. Therefore, there is no guarantee that LLMs can provide a high probability for a when presented with b. In contrast, GLM models are pre-trained with autoregressive blank in-filling objectives, where the masked token controls both preceding and succeeding tokens, making them more robust to the reversal curse.

要完全掌握逆轉詛咒,首先要了解LLM的培養流程。下一個標記預測 (NTP) 是目前大型語言模型(例如 GPT 和 Llama)的主要預訓練目標。在 GPT 和 Llama 等模型中,訓練期間的注意力掩模取決於前面的標記,這意味著每個標記僅關注其先前的上下文。這使得無法計算後續令牌。因此,如果訓練語料庫中 a 出現在 b 之前,則模型會最大化給定 a 的 b 的機率,而不是給定 b 的可能性。因此,不能保證法學碩士在出現 b 時能夠提供 a 的高機率。相較之下,GLM 模型是使用自回歸空白填充目標進行預訓練的,其中屏蔽標記控制前面和後面的標記,使它們對逆轉詛咒更加穩健。

The authors put this hypothesis to the test by fine-tuning GLMs on “Name to Description” data, using fictitious names and feeding descriptions to retrieve information about the entities. The GLMs achieved approximately 80% accuracy on this task, while Llama’s accuracy was 0%.

作者透過對「名稱到描述」資料的 GLM 進行微調,使用虛構名稱和提供描述來檢索有關實體的信息,從而檢驗了這一假設。 GLM 在這項任務上的準確率約為 80%,而 Llama 的準確率為 0%。

To address this issue, the authors propose a method that adapts the training objective of LLMs to something similar to ABI. They fine-tuned models using Bidirectional Causal Language Model Optimization (BICO) to reverse-engineer mathematical tasks and translation problems. BICO adopts an autoregressive blank infilling objective, similar to GLM, but with tailored modifications designed explicitly for causal language models. The authors introduced rotary (relative) position embeddings and modified the attention function to make it bidirectional. This fine-tuning method improved the model’s accuracy in reverse translation and mathematical problem-solving tasks.

為了解決這個問題,作者提出了一種將法學碩士的訓練目標調整為類似 ABI 的方法。他們使用雙向因果語言模型優化(BICO)對模型進行微調,以逆向工程數學任務和翻譯問題。 BICO 採用自回歸空白填充目標,類似於 GLM,但進行了專門針對因果語言模型設計的客製化修改。作者引入了旋轉(相對)位置嵌入,並修改了注意力函數以使其成為雙向的。這種微調方法提高了模型在逆向翻譯和數學問題解決任務中的準確性。

In conclusion, the authors analyze the reversal curse and propose a fine-tuning strategy to mitigate this pitfall. By adopting a causal language model with an ABI-like objective, this study sheds light on the reversal underperformance of LLMs. This work could be further expanded to examine the impact of advanced techniques, such as RLHF, on the reversal curse.

總之,作者分析了逆轉詛咒並提出了一種微調策略來減輕這個陷阱。透過採用具有類似 ABI 目標的因果語言模型,這項研究揭示了法學碩士的逆轉表現不佳的情況。這項工作可以進一步擴展,以研究先進技術(例如 RLHF)對逆轉詛咒的影響。

Don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter. Don’t Forget to join our 55k+ ML SubReddit.

不要忘記在 Twitter 上關注我們並加入我們的 Telegram 頻道和 LinkedIn 群組。如果您喜歡我們的工作,您一定會喜歡我們的時事通訊。不要忘記加入我們超過 55k 的 ML SubReddit。

免責聲明:info@kdj.com

所提供的資訊並非交易建議。 kDJ.com對任何基於本文提供的資訊進行的投資不承擔任何責任。加密貨幣波動性較大,建議您充分研究後謹慎投資!

如果您認為本網站使用的內容侵犯了您的版權,請立即聯絡我們(info@kdj.com),我們將及時刪除。

-

- 黃金特朗普,比特幣和一個雕像:有什麼交易?

- 2025-09-18 18:05:16

- 華盛頓出現了一個持有比特幣的黃金特朗普雕像,引發了有關加密貨幣,金融和特朗普的親克賴特托立場的辯論。這是未來嗎?

-

-

- Binance,CZ和Hyproliquid:加密貨幣的新時代?

- 2025-09-18 18:00:55

- 探索Binance的影響力,CZ的潛在回歸以及超級流動性在不斷發展的加密景觀中的統治地位。

-

- 杜格供應,稀缺敘事和模因硬幣躁狂症:有什麼交易?

- 2025-09-18 18:00:30

- 探索圍繞門多格供應量減少,稀缺性敘事和模因硬幣市場的嗡嗡聲,包括潛在的轉變和競爭者。

-

-

- Schuyler Police K-9計劃:挑戰硬幣是有理由的

- 2025-09-18 17:59:56

- 發現舒勒警察局如何使用挑戰硬幣來支持其K-9計劃,並有可能將第二隻狗添加到部隊中。

-

- 通過圈子投資:價格預測更新

- 2025-09-18 17:59:26

- 超流動性(HYPE)違反了市場趨勢,這是由於Circle的投資和平台增長所推動的新高度。 $ 100看嗎?讓我們深入研究分析。

-

- Mymonero Wallet:安全和財務自由的生命線

- 2025-09-18 17:56:43

- 發現Mymonero錢包如何超越其作為加密工具的角色,成為在挑戰環境中隱私,安全和財務獨立性的重要生命線。

-