|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

视觉变压器 (ViTs) 通过提供一种使用自注意力机制来处理图像数据的创新架构,彻底改变了计算机视觉。与依赖卷积层进行特征提取的卷积神经网络 (CNN) 不同,ViT 将图像划分为更小的块,并将它们视为单独的标记。这种基于令牌的方法允许对大型数据集进行可扩展且高效的处理,使得 ViT 对于图像分类和对象检测等高维任务特别有效。它们能够将令牌之间的信息流动方式与令牌内的特征提取方式解耦,为解决各种计算机视觉挑战提供了灵活的框架。

Vision Transformers (ViTs) have emerged as a powerful architecture in computer vision, thanks to their self-attention mechanisms that can effectively process image data. Unlike Convolutional Neural Networks (CNNs), which extract features using convolutional layers, ViTs break down images into smaller patches and treat them as individual tokens. This token-based approach enables scalable and efficient processing of large datasets, making ViTs particularly well-suited for high-dimensional tasks like image classification and object detection. The decoupling of how information flows between tokens from how features are extracted within tokens provides a flexible framework for tackling diverse computer vision challenges.

视觉变压器(ViT)已经成为计算机视觉领域的强大架构,这要归功于它们的自注意力机制,可以有效地处理图像数据。与使用卷积层提取特征的卷积神经网络 (CNN) 不同,ViT 将图像分解为更小的块,并将它们视为单独的标记。这种基于令牌的方法可以对大型数据集进行可扩展且高效的处理,使 ViT 特别适合图像分类和对象检测等高维任务。将令牌之间的信息流动方式与令牌内的特征提取方式解耦,为应对各种计算机视觉挑战提供了灵活的框架。

Despite their success, a key question that arises is whether pre-training is necessary for ViTs. It has been widely assumed that pre-training enhances downstream task performance by learning useful feature representations. However, recent research has begun to question whether these features are the sole contributors to performance improvements or whether other factors, such as attention patterns, might play a more significant role. This investigation challenges the traditional belief in the dominance of feature learning, suggesting that a deeper understanding of the mechanisms driving ViTs’ effectiveness could lead to more efficient training methodologies and improved performance.

尽管取得了成功,但出现的一个关键问题是 ViT 是否需要预训练。人们普遍认为预训练通过学习有用的特征表示来增强下游任务的性能。然而,最近的研究开始质疑这些功能是否是性能改进的唯一贡献者,或者其他因素(例如注意力模式)是否可能发挥更重要的作用。这项研究挑战了特征学习占主导地位的传统信念,表明对驱动 ViT 有效性的机制进行更深入的了解可能会带来更有效的训练方法和更高的性能。

Conventional approaches to utilizing pre-trained ViTs involve fine-tuning the entire model on specific downstream tasks. This process combines attention transfer and feature learning, making it difficult to isolate each contribution. While knowledge distillation frameworks have been employed to transfer logits or feature representations, they largely ignore the potential of attention patterns. The lack of focused analysis on attention mechanisms limits a comprehensive understanding of their role in improving downstream task outcomes. This gap highlights the need for methods to assess attention maps’ impact independently.

利用预训练 ViT 的传统方法涉及针对特定下游任务微调整个模型。这个过程结合了注意力转移和特征学习,使得很难分离出每个贡献。虽然知识蒸馏框架已被用来传输逻辑或特征表示,但它们在很大程度上忽略了注意力模式的潜力。缺乏对注意力机制的集中分析限制了对其在改善下游任务结果中的作用的全面理解。这一差距凸显了需要独立评估注意力图影响的方法。

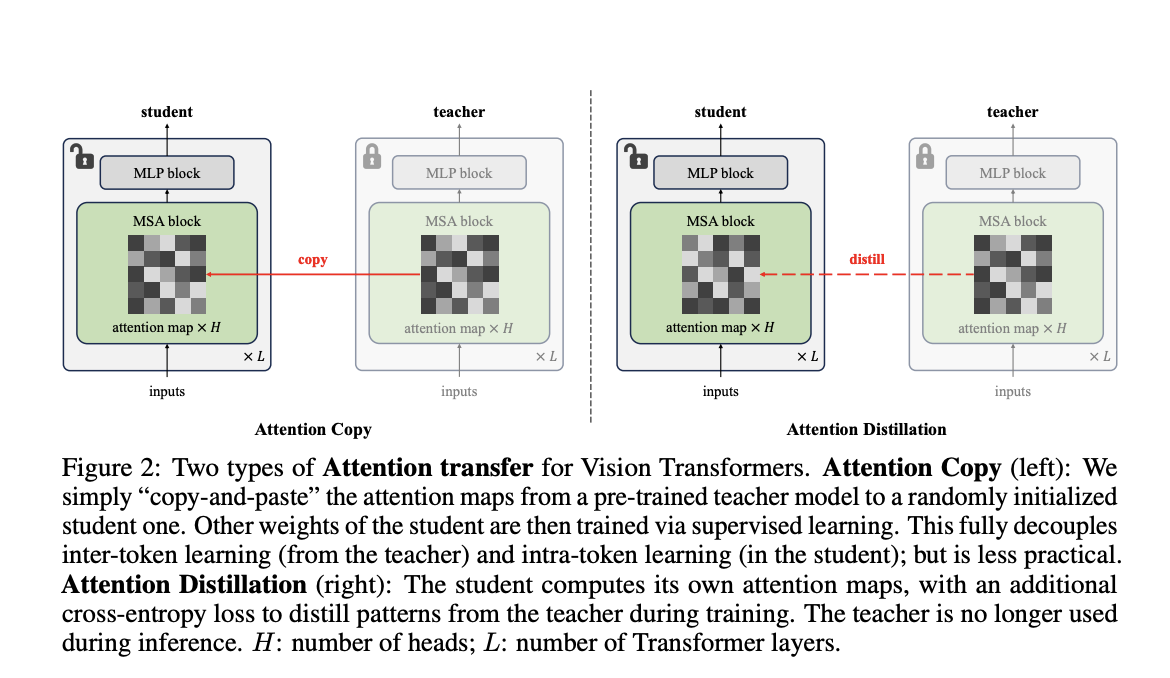

Researchers from Carnegie Mellon University and FAIR have introduced a novel method called “Attention Transfer,” designed to isolate and transfer only the attention patterns from pre-trained ViTs. The proposed framework consists of two methods: Attention Copy and Attention Distillation. In Attention Copy, the pre-trained teacher ViT generates attention maps directly applied to a student model while the student learns all other parameters from scratch. In contrast, Attention Distillation uses a distillation loss function to train the student model to align its attention maps with the teacher’s, requiring the teacher model only during training. These methods separate the intra-token computations from inter-token flow, offering a fresh perspective on pre-training dynamics in ViTs.

卡内基梅隆大学和 FAIR 的研究人员推出了一种名为“注意力转移”的新颖方法,旨在仅从预先训练的 ViT 中分离和转移注意力模式。所提出的框架由两种方法组成:注意力复制和注意力蒸馏。在注意力复制中,预先训练的教师 ViT 生成直接应用于学生模型的注意力图,而学生则从头开始学习所有其他参数。相比之下,注意力蒸馏使用蒸馏损失函数来训练学生模型,使其注意力图与教师的注意力图保持一致,仅在训练期间需要教师模型。这些方法将令牌内计算与令牌间流分开,为 ViT 中的预训练动态提供了全新的视角。

Attention Copy transfers pre-trained attention maps to a student model, effectively guiding how tokens interact without retaining learned features. This setup requires both the teacher and student models during inference, which may add computational complexity. Attention Distillation, on the other hand, refines the student model’s attention maps through a loss function that compares them to the teacher’s patterns. After training, the teacher model is no longer needed, making this approach more practical. Both methods leverage the unique architecture of ViTs, where self-attention maps dictate inter-token relationships, allowing the student to focus on learning its features from scratch.

注意力复制将预先训练的注意力图转移到学生模型,有效地指导令牌如何交互,而不保留学习的特征。此设置在推理过程中需要教师模型和学生模型,这可能会增加计算复杂性。另一方面,注意力蒸馏通过将学生模型的注意力图与教师的模式进行比较的损失函数来细化学生模型的注意力图。训练后,不再需要教师模型,使得这种方法更加实用。这两种方法都利用了 ViT 的独特架构,其中自注意力图决定了 token 之间的关系,使学生能够专注于从头开始学习其功能。

The performance of these methods demonstrates the effectiveness of attention patterns in pre-trained ViTs. Attention Distillation achieved a top-1 accuracy of 85.7% on the ImageNet-1K dataset, equaling the performance of fully fine-tuned models. While slightly less effective, Attention Copy closed 77.8% of the gap between training from scratch and fine-tuning, reaching 85.1% accuracy. Furthermore, ensembling the student and teacher models enhanced accuracy to 86.3%, showcasing the complementary nature of their predictions. The study also revealed that transferring attention maps from task-specific fine-tuned teachers further improved accuracy, demonstrating the adaptability of attention mechanisms to specific downstream requirements. However, challenges arose under data distribution shifts, where attention transfer underperformed compared to weight tuning, highlighting limitations in generalization.

这些方法的性能证明了预训练 ViT 中注意力模式的有效性。注意力蒸馏在 ImageNet-1K 数据集上实现了 85.7% 的 top-1 准确率,与完全微调模型的性能相当。虽然效果稍差,但注意力复制缩小了从头开始训练和微调之间 77.8% 的差距,达到 85.1% 的准确率。此外,集成学生和教师模型将准确率提高到 86.3%,展示了他们预测的互补性。该研究还表明,从特定任务的微调教师转移注意力图进一步提高了准确性,证明了注意力机制对特定下游要求的适应性。然而,数据分布变化带来了挑战,其中注意力转移与权重调整相比表现不佳,凸显了泛化的局限性。

This research illustrates that pre-trained attention patterns are sufficient for achieving high downstream task performance, questioning the necessity of traditional feature-centric pre-training paradigms. The proposed Attention Transfer method decouples attention mechanisms from feature learning, offering an alternative approach that reduces reliance on computationally intensive weight fine-tuning. While limitations such as distribution shift sensitivity and scalability across diverse tasks remain, this study opens new avenues for optimizing the use of ViTs in computer vision. Future work could address these challenges, refine attention transfer techniques, and explore their applicability to broader domains, paving the way for more efficient, effective machine learning models.

这项研究表明,预训练的注意力模式足以实现较高的下游任务性能,质疑传统的以特征为中心的预训练范式的必要性。所提出的注意力转移方法将注意力机制与特征学习解耦,提供了一种减少对计算密集型权重微调的依赖的替代方法。尽管分布转移敏感性和跨不同任务的可扩展性等限制仍然存在,但这项研究为优化 ViT 在计算机视觉中的使用开辟了新途径。未来的工作可以解决这些挑战,完善注意力转移技术,并探索其在更广泛领域的适用性,为更高效、更有效的机器学习模型铺平道路。

免责声明:info@kdj.com

所提供的信息并非交易建议。根据本文提供的信息进行的任何投资,kdj.com不承担任何责任。加密货币具有高波动性,强烈建议您深入研究后,谨慎投资!

如您认为本网站上使用的内容侵犯了您的版权,请立即联系我们(info@kdj.com),我们将及时删除。

-

-

- 令牌公司(TSE:1766)即将进行外部交易

- 2025-04-24 11:50:12

- 希望购买代币公司(TSE:1766)的股息股息将需要尽快采取行动,因为该股票即将进行交易。

-

- Zksync从其黑客中恢复了500万美元

- 2025-04-24 11:45:13

- ZK Nation宣布,其黑客合作并退还了在指定时间范围内从Zksync偷来的500万美元。

-

-

-

-

- 特朗普向他的$特朗普模因硬币的持有者提供晚餐

- 2025-04-24 11:35:13

- 在Crypto Hype和政治剧院的最新碰撞中,唐纳德·特朗普总统从字面上提供了权力。

-

-

- 以太坊(ETH)显示出强烈的恢复迹象,包括显着的向上移动

- 2025-04-24 11:30:12

- 经过数周的柔和活动,ETH已上涨了$ 1800的马克和50天的指数移动平均线(EMA),这是至关重要的