|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

這種方法以OpenAI O1和DeepSeek R1等模型為例,可以通過在推斷過程中利用其他計算資源來增強模型性能。

Recent advancements in AI scaling laws have shifted from merely increasing model size and training data to optimising inference-time computation. This approach, exemplified by models like OpenAI’s GPT-4 and DeepMind's DeepSeek R1, enhances model performance by leveraging additional computational resources during inference.

AI縮放定律的最新進展已從僅增加模型大小和訓練數據轉變為優化推理時間計算。這種方法以OpenAI的GPT-4和DeepMind的DeepSeek R1為例,可以通過在推理過程中利用其他計算資源來增強模型性能。

Test-time budget forcing has emerged as an efficient technique in large language models (LLMs), enabling improved performance with minimal token generation. Similarly, inference-time scaling has gained traction in diffusion models, particularly in reward-based sampling, where iterative refinement helps generate outputs that better align with user preferences. This method is crucial for text-to-image generation, where naïve sampling often fails to fully capture intricate specifications, such as object relationships and logical constraints.

在大型語言模型(LLMS)中,測試時間預算強迫已成為一種有效的技術,從而可以通過最少的代幣產生提高性能。同樣,推理時間縮放在擴散模型中也獲得了吸引力,尤其是在基於獎勵的採樣中,迭代改進有助於生成更好地與用戶偏好保持一致的輸出。此方法對於文本到圖像生成至關重要,在文本到圖像生成中,幼稚的採樣通常無法完全捕獲複雜的規範,例如對象關係和邏輯約束。

Inference-time scaling methods for diffusion models can be broadly categorized into fine-tuning-based and particle-sampling approaches. Fine-tuning improves model alignment with specific tasks but requires retraining for each use case, limiting scalability. In contrast, particle sampling—used in techniques like SVDD and CoDe—selects high-reward samples iteratively during denoising, significantly improving output quality.

擴散模型的推理時間縮放方法可以廣泛地分為基於微調的基於微調和粒子採樣方法。微調可以通過特定任務改善模型對齊方式,但需要為每種用例進行重新調整,從而限制可擴展性。相比之下,粒子採樣(以SVDD和代碼等技術為單位)在deNoSing期間迭代地選擇了高獎勵樣品,從而顯著提高了輸出質量。

While these methods have been effective for diffusion models, their application to flow models has been limited due to the deterministic nature of their generation process. Recent work, including SoP, has introduced stochasticity to flow models, enabling particle sampling-based inference-time scaling. This study expands on such efforts by modifying the reverse kernel, further enhancing sampling diversity and effectiveness in flow-based generative models.

儘管這些方法對於擴散模型有效,但由於其生成過程的確定性性質,它們在流模型上的應用受到限制。包括SOP在內的最新工作將隨機性引入了流模型,從而實現了基於粒子採樣的推理時間縮放。這項研究通過修改反向內核,進一步增強了基於流量的生成模型的採樣多樣性和有效性,從而擴展了這種工作。

Researchers from KAIST propose an inference-time scaling method for pretrained flow models, addressing their limitations in particle sampling due to a deterministic generative process. They introduce three key innovations:

來自KAIST的研究人員提出了一種預審預學流程模型的推理時間縮放方法,解決了由於確定性生成過程而導致的粒子採樣局限性。他們介紹了三個關鍵創新:

1. SDE-based generation to enable stochastic sampling.

1。基於SDE的生成以實現隨機抽樣。

2. VP interpolant conversion for enhancing sample diversity.

2。 VP插值轉換,以增強樣品多樣性。

3. Rollover Budget Forcing (RBF) for adaptive computational resource allocation.

3。自適應計算資源分配的滾動預算強迫(RBF)。

Experimental results on compositional text-to-image generation tasks with FLUX, a pretrained flow model, demonstrate that these techniques effectively improve reward alignment. The proposed approach outperforms prior methods, showcasing the advantages of inference-time scaling in flow models, particularly when combined with gradient-based techniques for differentiable rewards like aesthetic image generation.

驗證的流量模型對使用Flux的組成文本到圖像生成任務的實驗結果表明,這些技術有效地改善了獎勵比對。所提出的方法的表現優於先前的方法,展示了流程模型中推理時間縮放的優勢,尤其是與基於梯度的技術結合使用,以獲得諸如美學圖像產生的可區分獎勵時。

Inference-Time Reward Alignment in Pretrained Flow Models via Particle Sampling

通過粒子採樣預審計的流動模型中的推理時間獎勵對齊

通過粒子採樣預審計的流動模型中的推理時間獎勵對齊

The goal of inference-time reward alignment is to generate high-reward samples from a pretrained flow model without any retraining. The objective is defined as follows:

推理時間獎勵對齊的目的是從預審計的流程模型中產生高獎勵樣本,而無需進行任何重新訓練。該目標定義如下:

where R denotes the reward function and p(x) represents the original data distribution, which we aim to minimize using KL divergence to maintain image quality.

其中r表示獎勵函數,而p(x)表示原始數據分佈,我們旨在最大程度地減少KL差異以維持圖像質量。

Since direct sampling from p(x) is challenging, the study adapts particle sampling techniques commonly used in diffusion models. However, flow models rely on deterministic sampling, limiting exploration in new directions despite high-reward samples being found in early iterations. To address this, the researchers introduce inference-time stochastic sampling by converting deterministic processes into stochastic ones.

由於P(X)的直接採樣具有挑戰性,因此該研究適應了擴散模型中常用的粒子採樣技術。然而,儘管在早期迭代中發現了高獎勵樣本,但流程模型依賴於確定性抽樣,將新方向的探索限制在新方向上。為了解決這個問題,研究人員通過將確定性過程轉換為隨機過程來引入推理時間隨機抽樣。

Moreover, they propose interpolant conversion to enlarge the search space and improve diversity by aligning flow model sampling with diffusion models. A dynamic compute allocation strategy is employed to enhance efficiency during inference-time scaling.

此外,他們提出了插值轉換,以擴大搜索空間並通過將流程模型採樣與擴散模型對齊來改善多樣性。採用動態計算分配策略來提高推理時間縮放期間的效率。

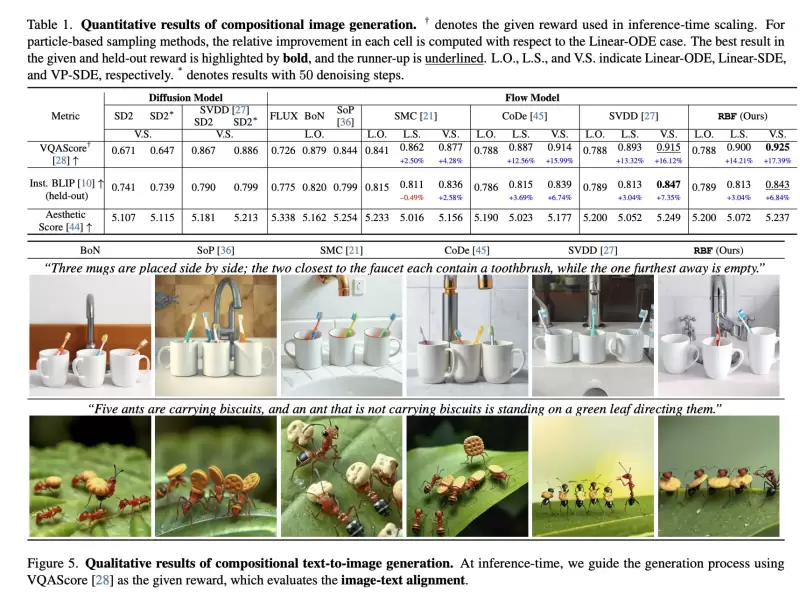

The study presents experimental results on particle sampling methods for inference-time reward alignment, focusing on compositional text-to-image and quantity-aware image generation tasks using FLUX as the pretrained flow model. Metrics such as VQAScore and RSS are used to assess alignment and accuracy.

該研究提出了針對推理時間獎勵比對的粒子採樣方法的實驗結果,重點是使用通量作為預審計的流動模型的組成文本對圖像和數量感知的圖像生成任務。 VQASCORE和RSS等指標用於評估對齊和準確性。

The results indicate that inference-time stochastic sampling improves efficiency, and interpolant conversion further enhances performance. Flow-based particle sampling yields high-reward outputs compared to diffusion models without compromising image quality. The proposed RBF method effectively optimizes budget allocation, achieving the best reward alignment and accuracy results.

結果表明,推理時間隨機採樣提高效率,插值轉化進一步提高了性能。與擴散模型相比,基於流動的粒子採樣可產生高回報的輸出,而不會損害圖像質量。擬議的RBF方法有效地優化了預算分配,實現了最佳的獎勵一致性和準確性結果。

Qualitative and quantitative findings confirm the effectiveness of this approach in generating precise, high-quality images.

定性和定量發現證實了這種方法在產生精確的高質量圖像中的有效性。

In summary, this research introduces an inference-time scaling method for flow models, incorporating three key innovations:

總而言之,這項研究引入了流動模型的推理時間縮放方法,結合了三個關鍵創新:

1. ODE-to-SDE conversion for enabling particle sampling.

1。啟用粒子採樣的ODE到SDE轉換。

2. Linear-to-VP interpolant conversion to enhance diversity and search efficiency.

2。線性到VP插值轉換,以提高多樣性和搜索效率。

3. RBF for adaptive compute allocation.

3。用於自適應計算分配的RBF。

While diffusion models benefit from stochastic sampling during denoising, flow models require tailored approaches due to their deterministic nature. The proposed VP-SDE-based generation effectively integrates particle sampling, and RBF optimizes compute usage. Experimental results demonstrate that this method surpasses existing inference-time scaling techniques, improving performance while maintaining high-quality outputs in flow-based image and video generation models.

儘管擴散模型受益於在脫諾過程中的隨機採樣,但由於其確定性性質,流程模型需要量身定制的方法。提出的基於VP-SDE的生成有效地整合了粒子採樣,RBF優化了計算用法。實驗結果表明,這種方法超過了現有的推理時間縮放技術,在維持基於流的圖像和視頻生成模型中保持高質量輸出的同時提高了性能。

免責聲明:info@kdj.com

所提供的資訊並非交易建議。 kDJ.com對任何基於本文提供的資訊進行的投資不承擔任何責任。加密貨幣波動性較大,建議您充分研究後謹慎投資!

如果您認為本網站使用的內容侵犯了您的版權,請立即聯絡我們(info@kdj.com),我們將及時刪除。

-

- 繫繩和微觀環境領導比特幣購買了近91781 BTC,Q1 2025

- 2025-04-02 20:45:12

- 然而,比特幣崩潰了12%!同時,長期持有人傾倒了178000 BTC,將比特幣的價格推向下降。

-

-

-

- 加密貨幣市場可能在接下來的兩個月中看到本地底部

- 2025-04-02 20:40:12

- 由於持續的進口關稅談判的全球不確定性,加密貨幣市場可能會在未來兩個月內看到本地底部

-

-

- PEPE硬幣(PEPE)價格預測:楔形突破跌落後可能有130%-140%的激增

- 2025-04-02 20:35:12

- Pepe硬幣價格目前正在稍作校正,同時測試楔形模式下的關鍵阻力水平。

-

- 在特朗普的解放日之前,加密市場今天發出混雜的信號

- 2025-04-02 20:30:12

- 在特朗普解放日之前,加密貨幣市場今天發出了混雜的信號,比特幣價格略有恢復,盤旋接近8.5萬美元

-

-