|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Cryptocurrency News Articles

Recent Advancements in AI Scaling Laws Have Shifted from Merely Increasing Model Size and Training Data to Optimizing Inference-Time Computation

Mar 30, 2025 at 02:48 am

This approach, exemplified by models like OpenAI o1 and DeepSeek R1, enhances model performance by leveraging additional computational resources during inference.

Recent advancements in AI scaling laws have shifted from merely increasing model size and training data to optimising inference-time computation. This approach, exemplified by models like OpenAI’s GPT-4 and DeepMind's DeepSeek R1, enhances model performance by leveraging additional computational resources during inference.

Test-time budget forcing has emerged as an efficient technique in large language models (LLMs), enabling improved performance with minimal token generation. Similarly, inference-time scaling has gained traction in diffusion models, particularly in reward-based sampling, where iterative refinement helps generate outputs that better align with user preferences. This method is crucial for text-to-image generation, where naïve sampling often fails to fully capture intricate specifications, such as object relationships and logical constraints.

Inference-time scaling methods for diffusion models can be broadly categorized into fine-tuning-based and particle-sampling approaches. Fine-tuning improves model alignment with specific tasks but requires retraining for each use case, limiting scalability. In contrast, particle sampling—used in techniques like SVDD and CoDe—selects high-reward samples iteratively during denoising, significantly improving output quality.

While these methods have been effective for diffusion models, their application to flow models has been limited due to the deterministic nature of their generation process. Recent work, including SoP, has introduced stochasticity to flow models, enabling particle sampling-based inference-time scaling. This study expands on such efforts by modifying the reverse kernel, further enhancing sampling diversity and effectiveness in flow-based generative models.

Researchers from KAIST propose an inference-time scaling method for pretrained flow models, addressing their limitations in particle sampling due to a deterministic generative process. They introduce three key innovations:

1. SDE-based generation to enable stochastic sampling.

2. VP interpolant conversion for enhancing sample diversity.

3. Rollover Budget Forcing (RBF) for adaptive computational resource allocation.

Experimental results on compositional text-to-image generation tasks with FLUX, a pretrained flow model, demonstrate that these techniques effectively improve reward alignment. The proposed approach outperforms prior methods, showcasing the advantages of inference-time scaling in flow models, particularly when combined with gradient-based techniques for differentiable rewards like aesthetic image generation.

Inference-Time Reward Alignment in Pretrained Flow Models via Particle Sampling

The goal of inference-time reward alignment is to generate high-reward samples from a pretrained flow model without any retraining. The objective is defined as follows:

where R denotes the reward function and p(x) represents the original data distribution, which we aim to minimize using KL divergence to maintain image quality.

Since direct sampling from p(x) is challenging, the study adapts particle sampling techniques commonly used in diffusion models. However, flow models rely on deterministic sampling, limiting exploration in new directions despite high-reward samples being found in early iterations. To address this, the researchers introduce inference-time stochastic sampling by converting deterministic processes into stochastic ones.

Moreover, they propose interpolant conversion to enlarge the search space and improve diversity by aligning flow model sampling with diffusion models. A dynamic compute allocation strategy is employed to enhance efficiency during inference-time scaling.

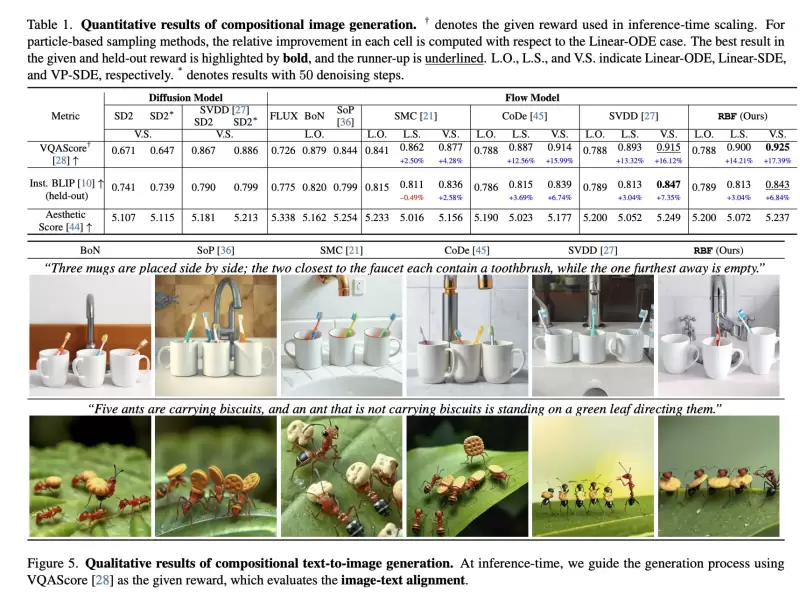

The study presents experimental results on particle sampling methods for inference-time reward alignment, focusing on compositional text-to-image and quantity-aware image generation tasks using FLUX as the pretrained flow model. Metrics such as VQAScore and RSS are used to assess alignment and accuracy.

The results indicate that inference-time stochastic sampling improves efficiency, and interpolant conversion further enhances performance. Flow-based particle sampling yields high-reward outputs compared to diffusion models without compromising image quality. The proposed RBF method effectively optimizes budget allocation, achieving the best reward alignment and accuracy results.

Qualitative and quantitative findings confirm the effectiveness of this approach in generating precise, high-quality images.

In summary, this research introduces an inference-time scaling method for flow models, incorporating three key innovations:

1. ODE-to-SDE conversion for enabling particle sampling.

2. Linear-to-VP interpolant conversion to enhance diversity and search efficiency.

3. RBF for adaptive compute allocation.

While diffusion models benefit from stochastic sampling during denoising, flow models require tailored approaches due to their deterministic nature. The proposed VP-SDE-based generation effectively integrates particle sampling, and RBF optimizes compute usage. Experimental results demonstrate that this method surpasses existing inference-time scaling techniques, improving performance while maintaining high-quality outputs in flow-based image and video generation models.

Disclaimer:info@kdj.com

The information provided is not trading advice. kdj.com does not assume any responsibility for any investments made based on the information provided in this article. Cryptocurrencies are highly volatile and it is highly recommended that you invest with caution after thorough research!

If you believe that the content used on this website infringes your copyright, please contact us immediately (info@kdj.com) and we will delete it promptly.

-

- Bitcoin (BTC) institutional investors piled over eleven times the all-time average into the US spot Bitcoin exchange-traded funds (ETFs) on April 22.

- Apr 24, 2025 at 03:55 am

- Fresh data from onchain analytics firm Glassnode confirms that the $912 million ETF inflows equal more than 500 times the 2025 daily average.

-

-

-

-

-

-

-

-