|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Cryptocurrency News Articles

FlashInfer: An AI Library and Kernel Generator Tailored for LLM Inference

Jan 05, 2025 at 11:11 am

Large Language Models (LLMs) have become an integral part of modern AI applications, powering tools like chatbots and code generators. However, the increased reliance on these models has revealed critical inefficiencies in inference processes. Attention mechanisms, such as FlashAttention and SparseAttention, often struggle with diverse workloads, dynamic input patterns, and GPU resource limitations. These challenges, coupled with high latency and memory bottlenecks, underscore the need for a more efficient and flexible solution to support scalable and responsive LLM inference.

Large Language Models (LLMs) have become ubiquitous in modern AI applications, powering tools ranging from chatbots to code generators. However, increased reliance on LLMs has highlighted critical inefficiencies in inference processes. Attention mechanisms, such as FlashAttention and SparseAttention, often encounter challenges with diverse workloads, dynamic input patterns, and GPU resource limitations. These hurdles, coupled with high latency and memory bottlenecks, underscore the need for a more efficient and flexible solution to support scalable and responsive LLM inference.

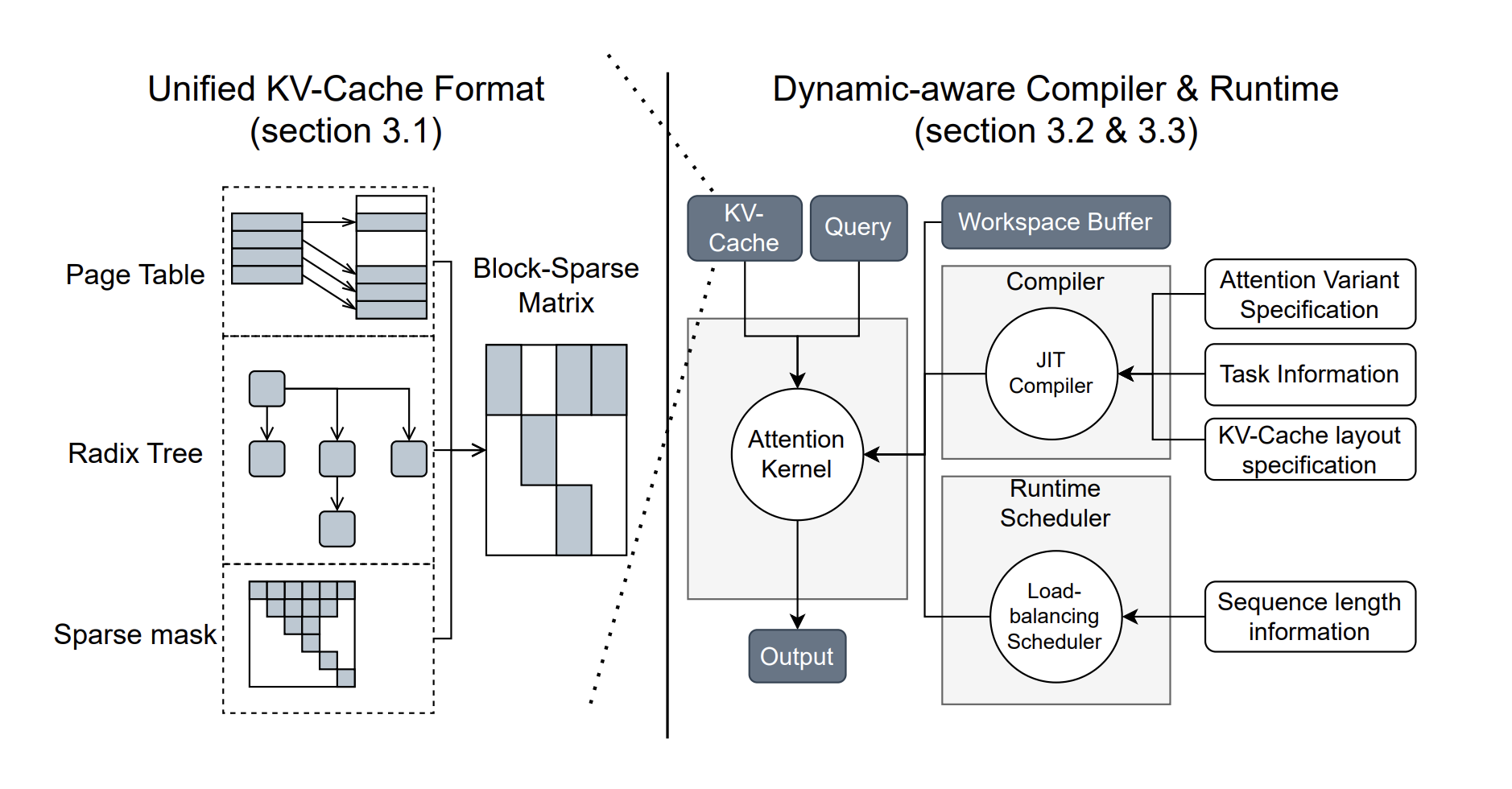

To address these challenges, researchers from the University of Washington, NVIDIA, Perplexity AI, and Carnegie Mellon University have developed FlashInfer, an AI library and kernel generator tailored for LLM inference. FlashInfer provides high-performance GPU kernel implementations for various attention mechanisms, including FlashAttention, SparseAttention, PageAttention, and sampling. Its design prioritizes flexibility and efficiency, addressing key challenges in LLM inference serving.

FlashInfer incorporates a block-sparse format to handle heterogeneous KV-cache storage efficiently and employs dynamic, load-balanced scheduling to optimize GPU usage. With integration into popular LLM serving frameworks like SGLang, vLLM, and MLC-Engine, FlashInfer offers a practical and adaptable approach to improving inference performance.

Technical Features and Benefits

FlashInfer introduces several technical innovations:

Performance Insights

FlashInfer demonstrates notable performance improvements across various benchmarks:

FlashInfer also excels in parallel decoding tasks, with composable formats enabling significant reductions in Time-To-First-Token (TTFT). For instance, tests on the Llama 3.1 model (70B parameters) show up to a 22.86% decrease in TTFT under specific configurations.

Conclusion

FlashInfer offers a practical and efficient solution to the challenges of LLM inference, providing significant improvements in performance and resource utilization. Its flexible design and integration capabilities make it a valuable tool for advancing LLM-serving frameworks. By addressing key inefficiencies and offering robust technical solutions, FlashInfer paves the way for more accessible and scalable AI applications. As an open-source project, it invites further collaboration and innovation from the research community, ensuring continuous improvement and adaptation to emerging challenges in AI infrastructure.

Check out the Paper and GitHub Page. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 60k+ ML SubReddit.

🚨 FREE UPCOMING AI WEBINAR (JAN 15, 2025): Boost LLM Accuracy with Synthetic Data and Evaluation Intelligence – Join this webinar to gain actionable insights into boosting LLM model performance and accuracy while safeguarding data privacy.

Disclaimer:info@kdj.com

The information provided is not trading advice. kdj.com does not assume any responsibility for any investments made based on the information provided in this article. Cryptocurrencies are highly volatile and it is highly recommended that you invest with caution after thorough research!

If you believe that the content used on this website infringes your copyright, please contact us immediately (info@kdj.com) and we will delete it promptly.

-

- Japan's Top Financial Officials to Meet Wednesday to Discuss Market Turbulence

- Apr 09, 2025 at 10:25 pm

- Top financial officials in Japan are meeting Wednesday to discuss the latest turbulence in the financial and capital markets. According to reports, representatives from the Ministry of Finance (MOF), the Financial Services Agency (FSA), and the Bank of Japan (BOJ) will attend the meeting following what reports called “extraordinary market events”.

-

-

-

-

- The launch of the Official Dogecoin Reserve has generated quite a stir in the cryptocurrency community

- Apr 09, 2025 at 10:15 pm

- The launch of the Official Dogecoin Reserve has generated quite a stir in the cryptocurrency community, pointing to new developments in the streamlining of digital currency

-

-

-

-