|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

随着大规模语言模型(LLM)的发展,自然语言处理(NLP)领域取得了重大进展。然而,这一进步也带来了一系列挑战。训练和推理需要大量的计算资源,多样化、高质量数据集的可用性至关重要,并且在专家混合 (MoE) 架构中实现平衡利用仍然很复杂。这些因素导致效率低下和成本增加,为扩展开源模型以匹配专有模型带来了障碍。此外,确保训练期间的稳健性和稳定性是一个持续存在的问题,因为即使是轻微的不稳定也会破坏性能并需要昂贵的干预措施。

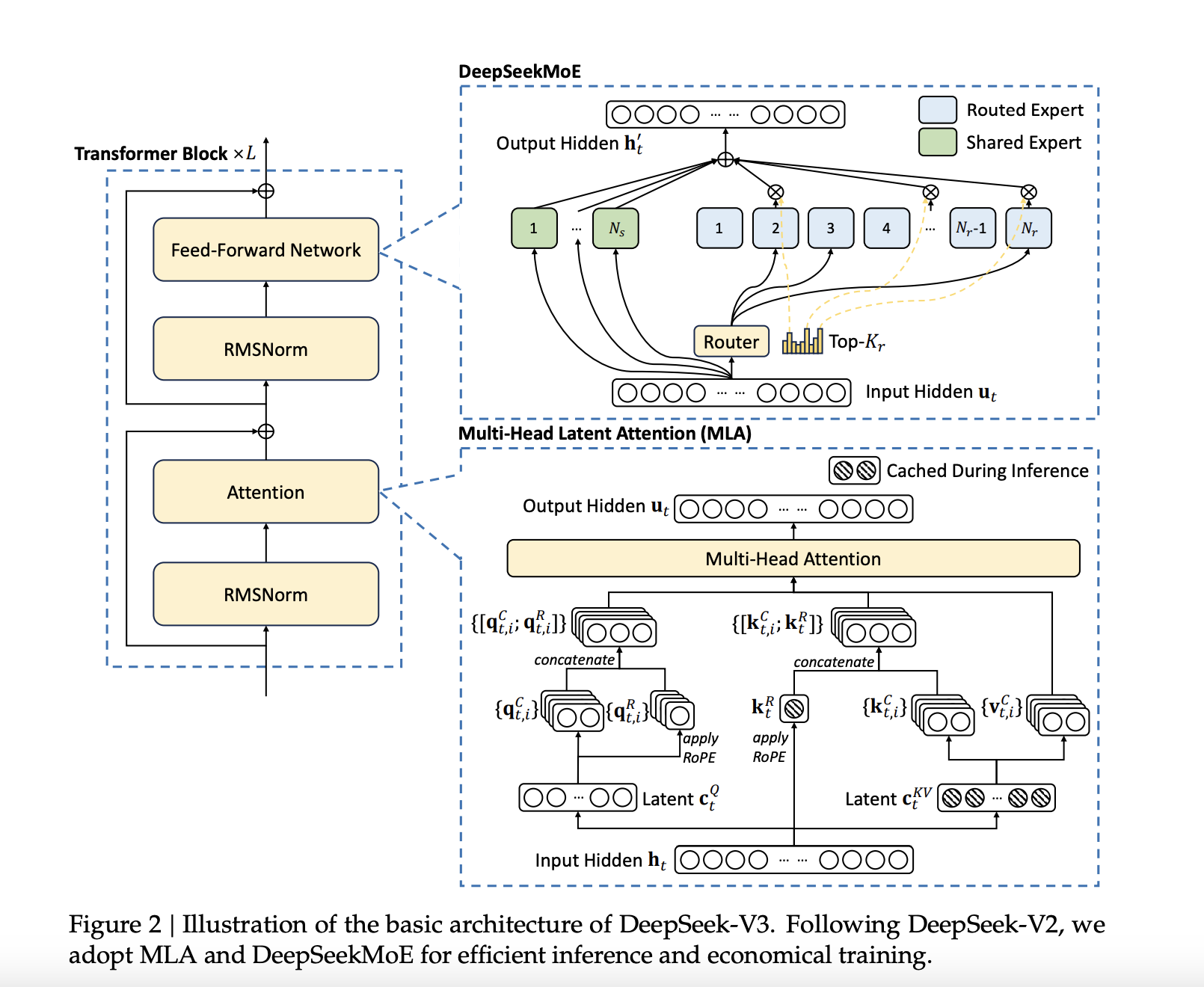

DeepSeek-AI has announced the release of DeepSeek-V3, a 671B Mixture-of-Experts (MoE) language model with 37B parameters activated per token. This latest model builds upon DeepSeek-AI's previous work on Multi-Head Latent Attention (MLA) and DeepSeekMoE architectures, which were refined in DeepSeek-V2 and DeepSeek-V2.5. DeepSeek-V3 is trained on a massive dataset of 14.8 trillion high-quality tokens, ensuring a broad and diverse knowledge base. Notably, DeepSeek-V3 is fully open-source, with accessible models, papers, and training frameworks for the research community to explore.

DeepSeek-AI 宣布发布 DeepSeek-V3,这是一种 671B 专家混合 (MoE) 语言模型,每个代币激活 37B 个参数。这个最新模型建立在 DeepSeek-AI 之前在多头潜在注意力 (MLA) 和 DeepSeekMoE 架构上的工作基础上,这些架构在 DeepSeek-V2 和 DeepSeek-V2.5 中得到了完善。 DeepSeek-V3 在包含 14.8 万亿个高质量代币的海量数据集上进行训练,确保了广泛且多样化的知识库。值得注意的是,DeepSeek-V3 是完全开源的,提供可供研究社区探索的模型、论文和培训框架。

Technical Details and BenefitsSeveral innovations are incorporated into DeepSeek-V3 to address long-standing challenges in the field. An auxiliary-loss-free load balancing strategy efficiently distributes computational loads across experts while maintaining model performance. Moreover, a multi-token prediction training objective enhances data efficiency and enables faster inference through speculative decoding.

技术细节和优势 DeepSeek-V3 中融入了多项创新,以解决该领域长期存在的挑战。辅助无损失负载平衡策略可以有效地在专家之间分配计算负载,同时保持模型性能。此外,多令牌预测训练目标提高了数据效率,并通过推测解码实现更快的推理。

Additionally, FP8 mixed precision training improves computational efficiency by reducing GPU memory usage without sacrificing accuracy. The DualPipe algorithm further minimizes pipeline bubbles by overlapping computation and communication phases, reducing all-to-all communication overhead. These advancements allow DeepSeek-V3 to process 60 tokens per second during inference—a significant improvement over DeepSeek-V2.5.

此外,FP8 混合精度训练可在不牺牲准确性的情况下减少 GPU 内存使用,从而提高计算效率。 DualPipe 算法通过重叠计算和通信阶段进一步最小化管道气泡,从而减少所有到所有的通信开销。这些进步使 DeepSeek-V3 在推理过程中每秒可以处理 60 个令牌,这是相对 DeepSeek-V2.5 的显着改进。

Performance Insights and ResultsDeepSeek-V3 is evaluated across multiple benchmarks, showcasing strong performance. On educational datasets like MMLU and MMLU-Pro, DeepSeek-V3 achieves scores of 88.5 and 75.9, respectively, outperforming other open-source models. In mathematical reasoning tasks, DeepSeek-V3 sets new standards with a score of 90.2 on MATH-500. The model also performs exceptionally in coding benchmarks such as LiveCodeBench.

性能洞察和结果DeepSeek-V3 通过多个基准进行评估,展示了强大的性能。在 MMLU 和 MMLU-Pro 等教育数据集上,DeepSeek-V3 的得分分别为 88.5 和 75.9,优于其他开源模型。在数学推理任务中,DeepSeek-V3 在 MATH-500 上取得了 90.2 分,树立了新标准。该模型在 LiveCodeBench 等编码基准测试中也表现出色。

Despite these achievements, the training cost is kept relatively low at $5.576 million, requiring only 2.788 million H800 GPU hours. These results highlight DeepSeek-V3’s efficiency and its potential to make high-performance LLMs more accessible.

尽管取得了这些成就,但训练成本仍保持在较低水平,为 557.6 万美元,仅需要 278.8 万小时的 H800 GPU 小时。这些结果凸显了 DeepSeek-V3 的效率及其让高性能法学硕士更容易获得的潜力。

ConclusionDeepSeek-V3 marks a significant advancement in open-source NLP research. By tackling the computational and architectural challenges associated with large-scale language models, DeepSeek-AI establishes a new benchmark for efficiency and performance. DeepSeek-V3 sets a new standard for open-source LLMs, achieving a balance of performance and efficiency that makes it a competitive alternative to proprietary models. DeepSeek-AI's commitment to open-source development ensures that the broader research community can benefit from its advancements.

结论DeepSeek-V3 标志着开源 NLP 研究的重大进步。通过解决与大规模语言模型相关的计算和架构挑战,DeepSeek-AI 建立了效率和性能的新基准。 DeepSeek-V3 为开源法学硕士设立了新标准,实现了性能和效率的平衡,使其成为专有模型的竞争替代品。 DeepSeek-AI 对开源开发的承诺确保更广泛的研究社区能够从其进步中受益。

Check out the Paper, GitHub Page, and Model on Hugging Face. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 60k+ ML SubReddit.

查看论文、GitHub 页面和 Hugging Face 模型。这项研究的所有功劳都归功于该项目的研究人员。另外,不要忘记在 Twitter 上关注我们并加入我们的 Telegram 频道和 LinkedIn 群组。不要忘记加入我们 60k+ ML SubReddit。

Trending: LG AI Research Releases EXAONE 3.5: Three Open-Source Bilingual Frontier AI-level Models

热门:LG AI Research 发布 EXAONE 3.5:三个开源双语前沿 AI 级模型

免责声明:info@kdj.com

所提供的信息并非交易建议。根据本文提供的信息进行的任何投资,kdj.com不承担任何责任。加密货币具有高波动性,强烈建议您深入研究后,谨慎投资!

如您认为本网站上使用的内容侵犯了您的版权,请立即联系我们(info@kdj.com),我们将及时删除。

-

- 加密素养揭示了“新鲸”对比特币市场的影响

- 2025-04-06 00:15:12

- 加密货币分析公司的加密货币已发布了一份新报告,揭示了“新鲸鱼”对比特币市场的影响不断增长。

-

-

- FX Guys(FXG)代币,下一个100x Altcoin

- 2025-04-06 00:10:12

- Cardano(ADA)投资者已经看到了最好的加密交易平台,该平台提供了100倍投资回报的可能性。

-

- Depin Track Leader Roam将作为主要赞助商参加2025年香港Web3狂欢节

- 2025-04-06 00:10:12

- 当时,漫游将与组织者合作,为与会者提供免费的ESIM数据和WiFi网络支持

-

-

-

- Solana的网络活动激增,创造了新记录,交易量为6777万

- 2025-04-06 00:00:20

- Solana网络活动最近的高峰,一天内交易达到6777万,标志着一个弹性平台。

-

-

- Rexas Finance接近预售的最后阶段,引起了投资者的关注

- 2025-04-05 23:55:12

- 该平台侧重于现实世界中的资产令牌化,从而允许房地产和商品等资产的分数所有权。