The field of Natural Language Processing (NLP) has made significant strides with the development of large-scale language models (LLMs). However, this progress has brought its own set of challenges. Training and inference require substantial computational resources, the availability of diverse, high-quality datasets is critical, and achieving balanced utilization in Mixture-of-Experts (MoE) architectures remains complex. These factors contribute to inefficiencies and increased costs, posing obstacles to scaling open-source models to match proprietary counterparts. Moreover, ensuring robustness and stability during training is an ongoing issue, as even minor instabilities can disrupt performance and necessitate costly interventions.

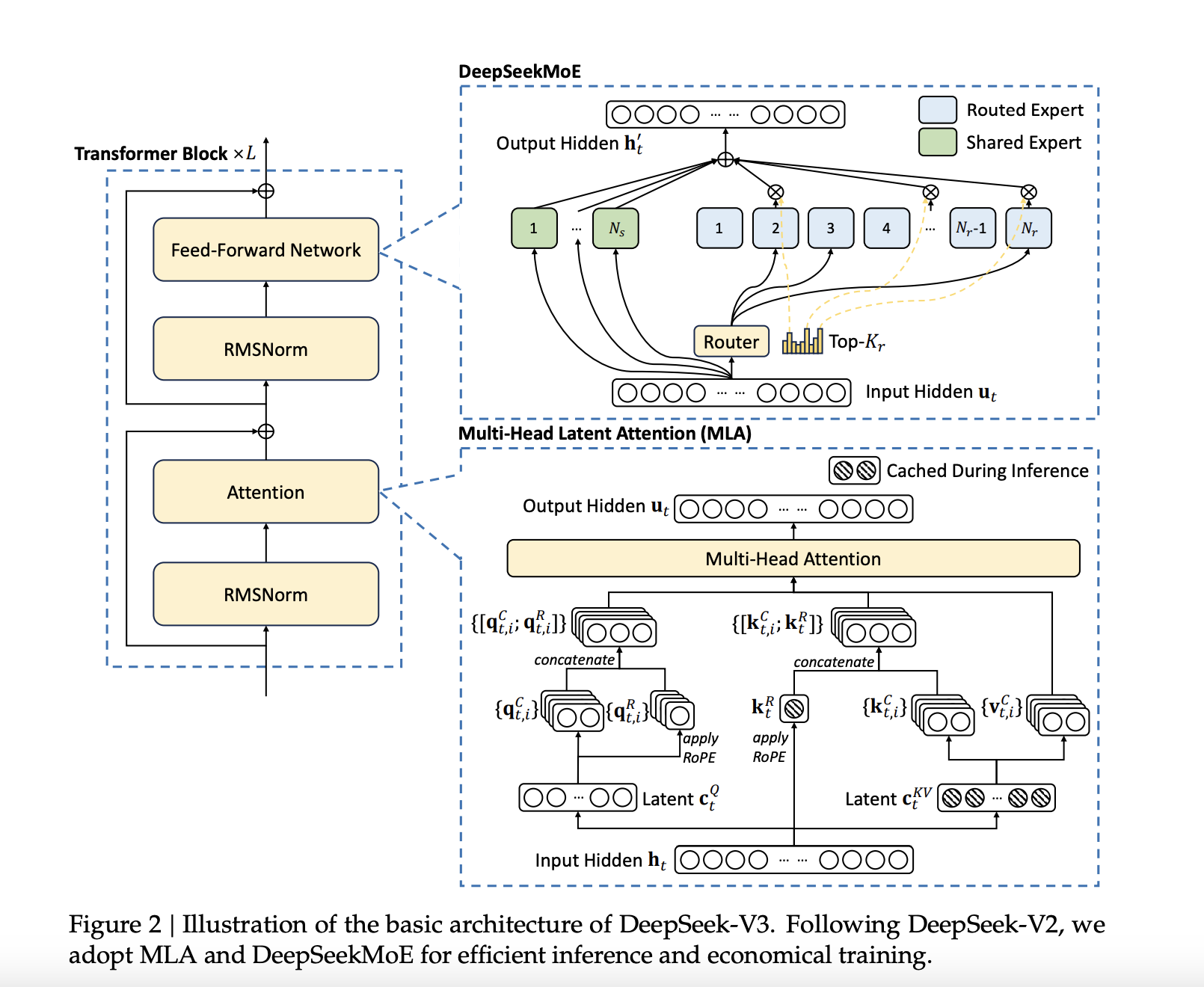

DeepSeek-AI has announced the release of DeepSeek-V3, a 671B Mixture-of-Experts (MoE) language model with 37B parameters activated per token. This latest model builds upon DeepSeek-AI's previous work on Multi-Head Latent Attention (MLA) and DeepSeekMoE architectures, which were refined in DeepSeek-V2 and DeepSeek-V2.5. DeepSeek-V3 is trained on a massive dataset of 14.8 trillion high-quality tokens, ensuring a broad and diverse knowledge base. Notably, DeepSeek-V3 is fully open-source, with accessible models, papers, and training frameworks for the research community to explore.

Technical Details and BenefitsSeveral innovations are incorporated into DeepSeek-V3 to address long-standing challenges in the field. An auxiliary-loss-free load balancing strategy efficiently distributes computational loads across experts while maintaining model performance. Moreover, a multi-token prediction training objective enhances data efficiency and enables faster inference through speculative decoding.

Additionally, FP8 mixed precision training improves computational efficiency by reducing GPU memory usage without sacrificing accuracy. The DualPipe algorithm further minimizes pipeline bubbles by overlapping computation and communication phases, reducing all-to-all communication overhead. These advancements allow DeepSeek-V3 to process 60 tokens per second during inference—a significant improvement over DeepSeek-V2.5.

Performance Insights and ResultsDeepSeek-V3 is evaluated across multiple benchmarks, showcasing strong performance. On educational datasets like MMLU and MMLU-Pro, DeepSeek-V3 achieves scores of 88.5 and 75.9, respectively, outperforming other open-source models. In mathematical reasoning tasks, DeepSeek-V3 sets new standards with a score of 90.2 on MATH-500. The model also performs exceptionally in coding benchmarks such as LiveCodeBench.

Despite these achievements, the training cost is kept relatively low at $5.576 million, requiring only 2.788 million H800 GPU hours. These results highlight DeepSeek-V3’s efficiency and its potential to make high-performance LLMs more accessible.

ConclusionDeepSeek-V3 marks a significant advancement in open-source NLP research. By tackling the computational and architectural challenges associated with large-scale language models, DeepSeek-AI establishes a new benchmark for efficiency and performance. DeepSeek-V3 sets a new standard for open-source LLMs, achieving a balance of performance and efficiency that makes it a competitive alternative to proprietary models. DeepSeek-AI's commitment to open-source development ensures that the broader research community can benefit from its advancements.

Check out the Paper, GitHub Page, and Model on Hugging Face. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 60k+ ML SubReddit.

Trending: LG AI Research Releases EXAONE 3.5: Three Open-Source Bilingual Frontier AI-level Models