|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

大型语言模型 (LLM) 在推理任务中显示出巨大的潜力,使用思想链 (CoT) 等方法将复杂的问题分解为可管理的步骤。然而,这种能力也伴随着挑战。 CoT 提示通常会增加代币使用量,从而导致更高的计算成本和能源消耗。对于同时需要精度和资源效率的应用程序来说,这种低效率是一个问题。目前的法学硕士往往会产生不必要的冗长输出,这并不总能转化为更好的准确性,还会产生额外的成本。关键的挑战是在推理性能和资源效率之间找到平衡。

A recent development in the field of artificial intelligence (AI) aims to address the excessive token usage and high computational costs associated with Chain-of-Thought (CoT) prompting methods for Large Language Models (LLMs). A team of researchers from Nanjing University, Rutgers University, and UMass Amherst have proposed a novel Token-Budget-Aware LLM Reasoning Framework to optimize token efficiency.

人工智能 (AI) 领域的最新发展旨在解决与大型语言模型 (LLM) 的思想链 (CoT) 提示方法相关的过度代币使用和高计算成本问题。来自南京大学、罗格斯大学和麻省大学阿默斯特分校的研究人员团队提出了一种新颖的令牌预算感知法学硕士推理框架来优化令牌效率。

The framework, named TALE (standing for Token-Budget-Aware LLM rEasoning), operates in two primary stages: budget estimation and token-budget-aware reasoning. Initially, TALE employs techniques like zero-shot prediction or regression-based estimators to assess the complexity of a reasoning task and derive an appropriate token budget. This budget is then seamlessly integrated into the CoT prompt, guiding the LLM to generate concise yet accurate responses.

该框架名为 TALE(代表代币预算感知 LLM 推理),分两个主要阶段运行:预算估算和代币预算感知推理。最初,TALE 采用零样本预测或基于回归的估计器等技术来评估推理任务的复杂性并得出适当的令牌预算。然后,该预算将无缝集成到 CoT 提示中,指导法学硕士生成简洁而准确的答复。

A key innovation within TALE is the concept of “Token Elasticity,” which identifies an optimal range of token budgets that minimizes token usage while preserving accuracy. By leveraging iterative search techniques like binary search, TALE can pinpoint the optimal budget for various tasks and LLM architectures. On average, the framework achieves a remarkable 68.64% reduction in token usage with less than a 5% decrease in accuracy, highlighting its effectiveness and practicality for token efficiency.

TALE 的一项关键创新是“代币弹性”的概念,它确定了代币预算的最佳范围,可以最大限度地减少代币使用,同时保持准确性。通过利用二分搜索等迭代搜索技术,TALE 可以为各种任务和 LLM 架构确定最佳预算。平均而言,该框架显着减少了 68.64% 的代币使用量,而准确率下降了不到 5%,凸显了其代币效率的有效性和实用性。

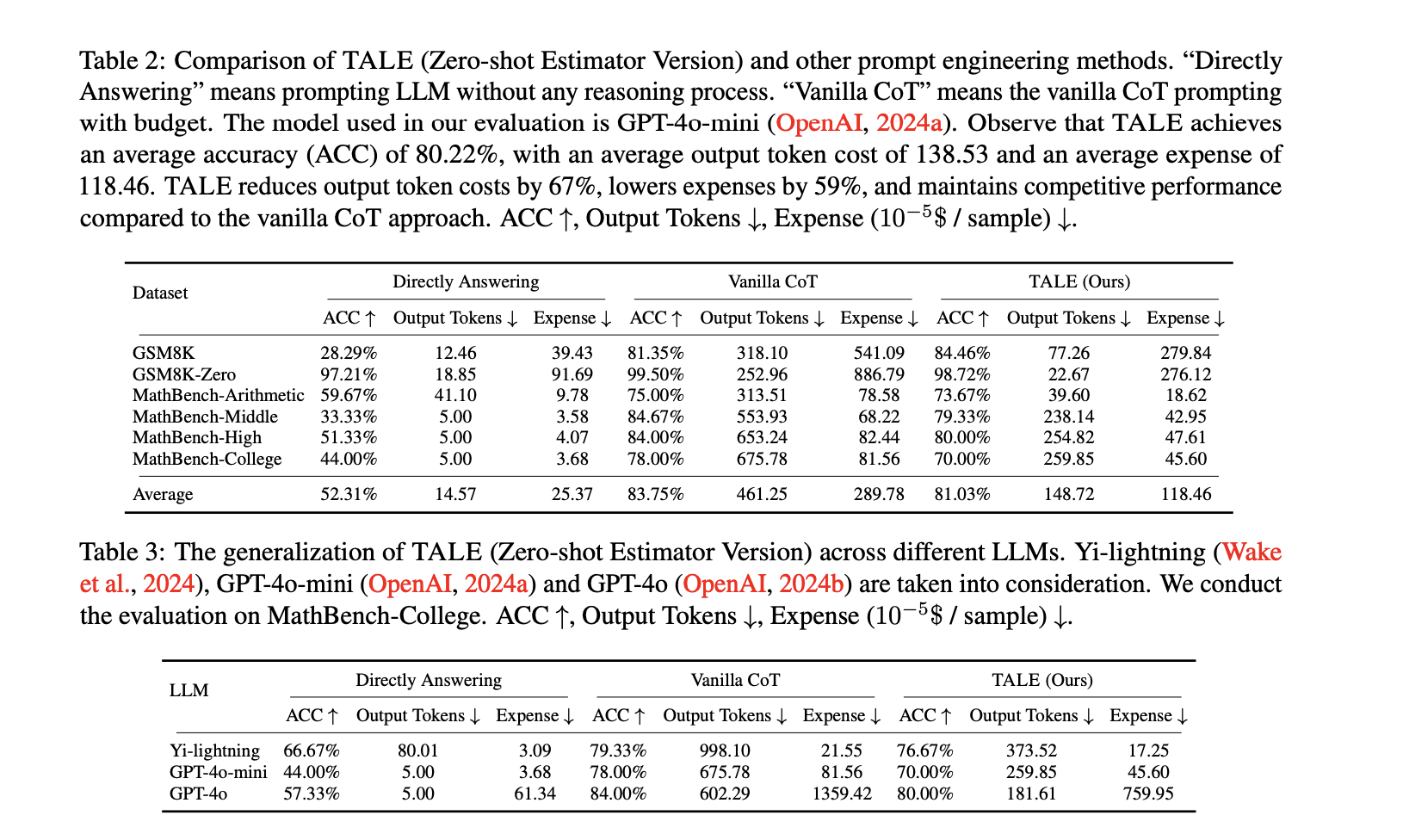

Experiments conducted on standard benchmarks, such as GSM8K and MathBench, showcase TALE's broad applicability and efficiency gains. For instance, on the GSM8K dataset, TALE achieved an impressive 84.46% accuracy, surpassing the Vanilla CoT method while simultaneously reducing token costs from 318.10 to 77.26 on average. When applied to the GSM8K-Zero setting, TALE achieved a stunning 91% reduction in token costs, all while maintaining an accuracy of 98.72%.

在 GSM8K 和 MathBench 等标准基准上进行的实验展示了 TALE 的广泛适用性和效率提升。例如,在 GSM8K 数据集上,TALE 达到了令人印象深刻的 84.46% 准确率,超越了 Vanilla CoT 方法,同时将代币成本平均从 318.10 降低到 77.26。当应用于 GSM8K-Zero 设置时,TALE 实现了令牌成本惊人的 91% 降低,同时保持了 98.72% 的准确度。

Furthermore, TALE demonstrates strong generalizability across different LLMs, including GPT-4o-mini and Yi-lightning. When employed on the MathBench-College dataset, TALE achieved reductions in token costs of up to 70% while maintaining competitive accuracy. Notably, the framework also leads to significant reductions in operational expenses, cutting costs by 59% on average compared to Vanilla CoT. These results underscore TALE's capability to enhance efficiency without sacrificing performance, making it suitable for a diverse range of applications.

此外,TALE 在不同的法学硕士(包括 GPT-4o-mini 和 Yi-lightning)中表现出强大的通用性。当在 MathBench-College 数据集上使用时,TALE 可将代币成本降低高达 70%,同时保持有竞争力的准确性。值得注意的是,该框架还显着降低了运营费用,与 Vanilla CoT 相比,平均成本降低了 59%。这些结果强调了 TALE 在不牺牲性能的情况下提高效率的能力,使其适合各种应用。

In conclusion, the Token-Budget-Aware LLM Reasoning Framework offers a practical solution to the inefficiency of token usage in reasoning tasks. By dynamically estimating and applying token budgets, TALE strikes a crucial balance between accuracy and cost-effectiveness. This approach ultimately reduces computational expenses and broadens the accessibility of advanced LLM capabilities. As AI continues to rapidly evolve, frameworks like TALE pave the way for more efficient and sustainable use of LLMs in both academic and industrial settings.

总之,令牌预算感知 LLM 推理框架为推理任务中令牌使用效率低下的问题提供了实用的解决方案。通过动态估算和应用代币预算,TALE 在准确性和成本效益之间取得了至关重要的平衡。这种方法最终减少了计算费用并扩大了高级法学硕士能力的可及性。随着人工智能的不断快速发展,像 TALE 这样的框架为在学术和工业环境中更有效和可持续地使用法学硕士铺平了道路。

免责声明:info@kdj.com

所提供的信息并非交易建议。根据本文提供的信息进行的任何投资,kdj.com不承担任何责任。加密货币具有高波动性,强烈建议您深入研究后,谨慎投资!

如您认为本网站上使用的内容侵犯了您的版权,请立即联系我们(info@kdj.com),我们将及时删除。

-

-

-

-

![SEI [SEI]区块链提议放弃宇宙兼容性并专注于以太坊的EVM SEI [SEI]区块链提议放弃宇宙兼容性并专注于以太坊的EVM](/assets/pc/images/moren/280_160.png)

- SEI [SEI]区块链提议放弃宇宙兼容性并专注于以太坊的EVM

- 2025-05-09 14:25:13

- SEI [SEI]区块链提议删除宇宙兼容性,并仅关注以太坊的EVM(以太坊虚拟机)模型。

-

-

-

- 大胆的XRP价格预测XRP的价格预测在加密社区中搅动讨论

- 2025-05-09 14:15:12

- 尽管没有提供特定的时间表,但海报将这一超级态度的预测奠定了XRP的历史行为,其增长机会有时会违背常规市场逻辑。

-

-

![SEI [SEI]区块链提议放弃宇宙兼容性并专注于以太坊的EVM SEI [SEI]区块链提议放弃宇宙兼容性并专注于以太坊的EVM](/uploads/2025/05/09/cryptocurrencies-news/articles/sei-sei-blockchain-proposes-drop-cosmos-compatibility-focus-ethereum-evm/image_500_300.webp)