Large Language Models (LLMs) have shown significant potential in reasoning tasks, using methods like Chain-of-Thought (CoT) to break down complex problems into manageable steps. However, this capability comes with challenges. CoT prompts often increase token usage, leading to higher computational costs and energy consumption. This inefficiency is a concern for applications that require both precision and resource efficiency. Current LLMs tend to generate unnecessarily lengthy outputs, which do not always translate into better accuracy but incur additional costs. The key challenge is finding a balance between reasoning performance and resource efficiency.

A recent development in the field of artificial intelligence (AI) aims to address the excessive token usage and high computational costs associated with Chain-of-Thought (CoT) prompting methods for Large Language Models (LLMs). A team of researchers from Nanjing University, Rutgers University, and UMass Amherst have proposed a novel Token-Budget-Aware LLM Reasoning Framework to optimize token efficiency.

The framework, named TALE (standing for Token-Budget-Aware LLM rEasoning), operates in two primary stages: budget estimation and token-budget-aware reasoning. Initially, TALE employs techniques like zero-shot prediction or regression-based estimators to assess the complexity of a reasoning task and derive an appropriate token budget. This budget is then seamlessly integrated into the CoT prompt, guiding the LLM to generate concise yet accurate responses.

A key innovation within TALE is the concept of “Token Elasticity,” which identifies an optimal range of token budgets that minimizes token usage while preserving accuracy. By leveraging iterative search techniques like binary search, TALE can pinpoint the optimal budget for various tasks and LLM architectures. On average, the framework achieves a remarkable 68.64% reduction in token usage with less than a 5% decrease in accuracy, highlighting its effectiveness and practicality for token efficiency.

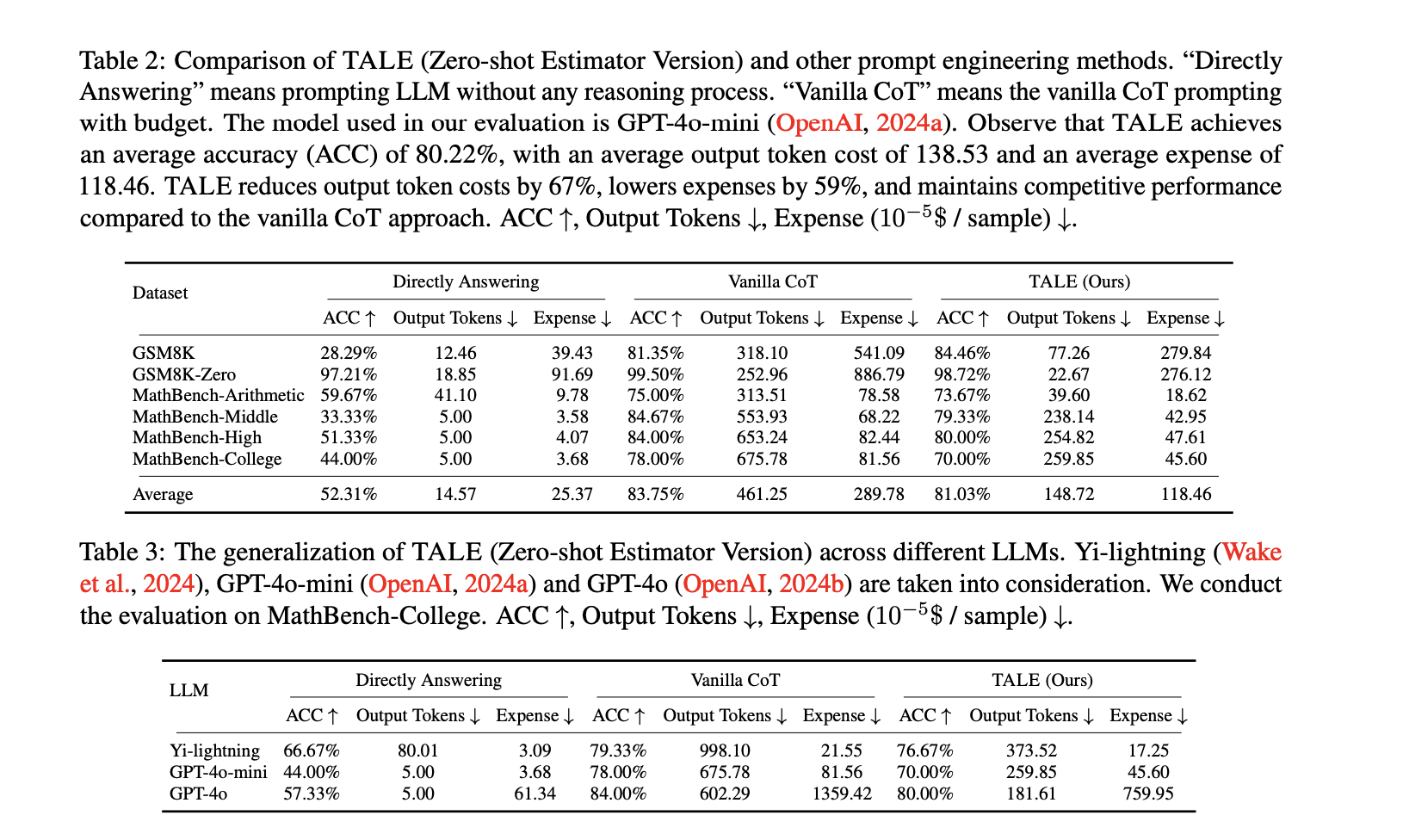

Experiments conducted on standard benchmarks, such as GSM8K and MathBench, showcase TALE's broad applicability and efficiency gains. For instance, on the GSM8K dataset, TALE achieved an impressive 84.46% accuracy, surpassing the Vanilla CoT method while simultaneously reducing token costs from 318.10 to 77.26 on average. When applied to the GSM8K-Zero setting, TALE achieved a stunning 91% reduction in token costs, all while maintaining an accuracy of 98.72%.

Furthermore, TALE demonstrates strong generalizability across different LLMs, including GPT-4o-mini and Yi-lightning. When employed on the MathBench-College dataset, TALE achieved reductions in token costs of up to 70% while maintaining competitive accuracy. Notably, the framework also leads to significant reductions in operational expenses, cutting costs by 59% on average compared to Vanilla CoT. These results underscore TALE's capability to enhance efficiency without sacrificing performance, making it suitable for a diverse range of applications.

In conclusion, the Token-Budget-Aware LLM Reasoning Framework offers a practical solution to the inefficiency of token usage in reasoning tasks. By dynamically estimating and applying token budgets, TALE strikes a crucial balance between accuracy and cost-effectiveness. This approach ultimately reduces computational expenses and broadens the accessibility of advanced LLM capabilities. As AI continues to rapidly evolve, frameworks like TALE pave the way for more efficient and sustainable use of LLMs in both academic and industrial settings.

Haftungsausschluss:info@kdj.com

Die bereitgestellten Informationen stellen keine Handelsberatung dar. kdj.com übernimmt keine Verantwortung für Investitionen, die auf der Grundlage der in diesem Artikel bereitgestellten Informationen getätigt werden. Kryptowährungen sind sehr volatil und es wird dringend empfohlen, nach gründlicher Recherche mit Vorsicht zu investieren!

Wenn Sie glauben, dass der auf dieser Website verwendete Inhalt Ihr Urheberrecht verletzt, kontaktieren Sie uns bitte umgehend (info@kdj.com) und wir werden ihn umgehend löschen.