|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

加密货币新闻

Sa2VA: A Unified Model for Dense Grounded Understanding of Images and Videos

2025/01/13 03:31

Multi-Modal Large Language Models (MLLMs) have seen rapid advancements in handling various image and video-related tasks, including visual question answering, narrative generation, and interactive editing. However, achieving fine-grained video content understanding, such as pixel-level segmentation, tracking with language descriptions, and performing visual question answering on specific video prompts, still poses a critical challenge in this field. State-of-the-art video perception models excel at tasks like segmentation and tracking but lack open-ended language understanding and conversation capabilities. At the same time, video MLLMs demonstrate strong performance in video comprehension and question answering but fall short in handling perception tasks and visual prompts.

Existing attempts to address video understanding challenges have followed two main approaches: MLLMs and Referring Segmentation systems. Initially, MLLMs focused on developing improved multi-modal fusion methods and feature extractors, eventually evolving towards instruction tuning on LLMs with frameworks like LLaVA. Recent developments have attempted to unify image, video, and multi-image analysis in single frameworks, such as LLaVA-OneVision. In parallel, Referring Segmentation systems have progressed from basic fusion modules to transformer-based methods that integrate segmentation and tracking within videos. However, these solutions lack a comprehensive integration of perception and language understanding capabilities.

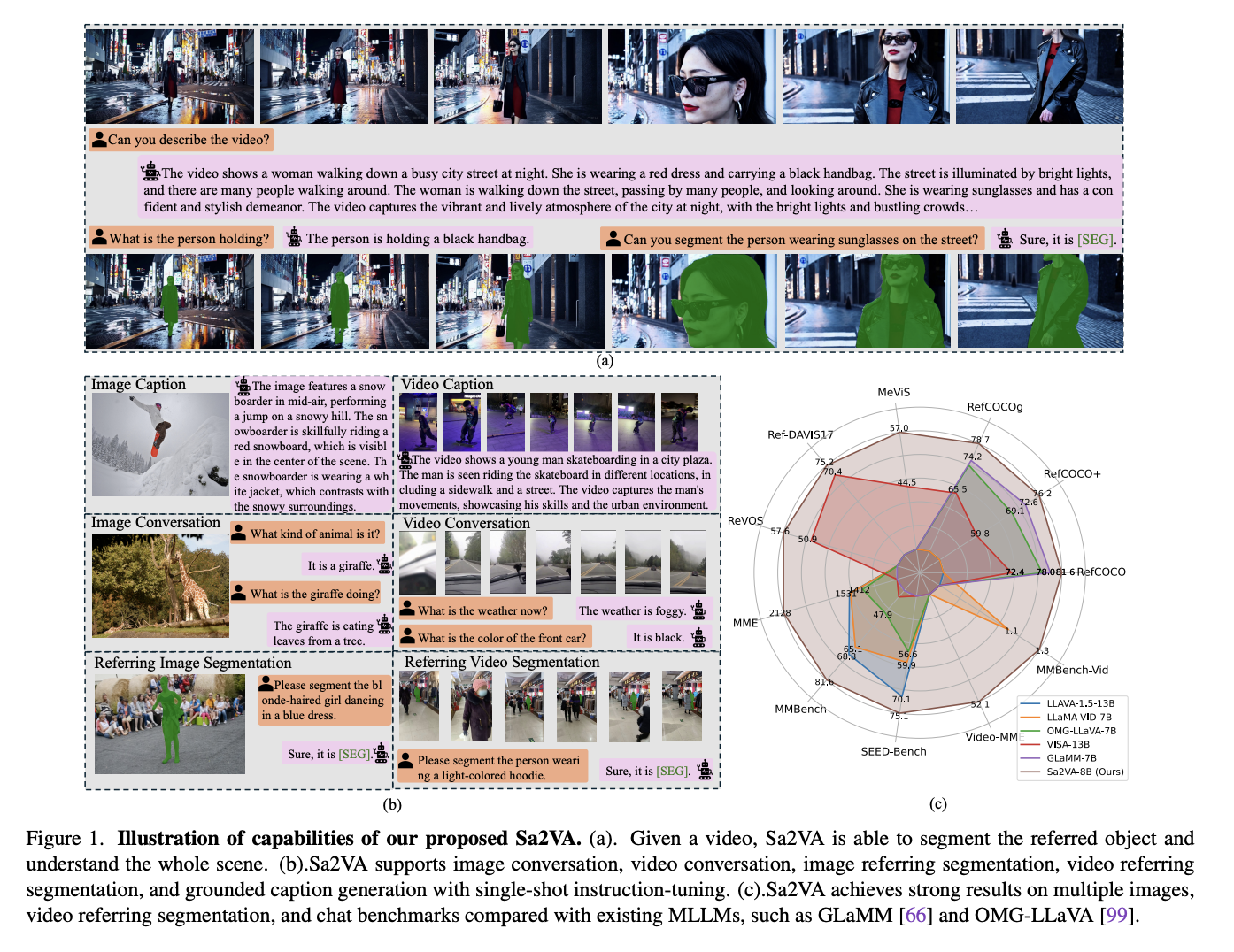

To overcome this limitation, researchers from UC Merced, Bytedance Seed, Wuhan University, and Peking University have proposed Sa2VA, a groundbreaking unified model for a dense grounded understanding of images and videos. The model differentiates itself by supporting a comprehensive range of image and video tasks through minimal one-shot instruction tuning, addressing the limitations of existing multi-modal large language models. Sa2VA’s innovative approach integrates SAM-2 with LLaVA, unifying text, image, and video in a shared LLM token space. The researchers have also introduced Ref-SAV, an extensive auto-labeled dataset containing over 72K object expressions in complex video scenes, with 2K manually validated video objects to ensure robust benchmarking capabilities.

Sa2VA’s architecture integrates two main components: a LLaVA-like model and SAM-2, connected through a novel decoupled design. The LLaVA-like component consists of a visual encoder processing images and videos, a visual projection layer, and an LLM for text token prediction. The system employs a unique decoupled approach where SAM-2 operates alongside the pre-trained LLaVA model without direct token exchange, maintaining computational efficiency and enabling plug-and-play functionality with various pre-trained MLLMs. The key innovation lies in the connection mechanism using a special “[SEG]” token, allowing SAM-2 to generate segmentation masks while enabling gradient backpropagation through the “[SEG]” token to optimize the MLLM’s prompt generation capabilities.

The Sa2VA model achieves state-of-the-art results on referring segmentation tasks, with Sa2VA-8B scoring 81.6, 76.2, and 78.9 cIoU on RefCOCO, RefCOCO+, and RefCOCOg respectively, outperforming previous systems like GLaMM-7B. In conversational capabilities, Sa2VA shows strong performance with scores of 2128 on MME, 81.6 on MMbench, and 75.1 on SEED-Bench. The model excels in video benchmarks, surpassing previous state-of-the-art VISA-13B by substantial margins on MeVIS, RefDAVIS17, and ReVOS. Moreover, Sa2VA’s performance is noteworthy considering its smaller model size compared to competitors, showing its efficiency and effectiveness across both image and video understanding tasks.

In this paper, researchers introduced Sa2VA which represents a significant advancement in multi-modal understanding by successfully integrating SAM-2’s video segmentation capabilities with LLaVA’s language processing abilities. The framework's versatility is shown through its ability to handle diverse image and video understanding tasks with minimal one-shot instruction tuning, addressing the long-standing challenge of combining perception and language understanding. Sa2VA’s strong performance across multiple benchmarks, from referring segmentation to conversational tasks, validates its effectiveness as a unified solution for a dense, grounded understanding of visual content, marking a significant step forward in the multi-modal AI systems field.

Check out the Paper and Model on Hugging Face. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 65k+ ML SubReddit.

FREE UPCOMING AI WEBINAR (JAN 15, 2025): Boost LLM Accuracy with Synthetic Data and Evaluation Intelligence

Join this webinar to gain actionable insights into boosting LLM model performance and accuracy while safeguarding data privacy.output

免责声明:info@kdj.com

所提供的信息并非交易建议。根据本文提供的信息进行的任何投资,kdj.com不承担任何责任。加密货币具有高波动性,强烈建议您深入研究后,谨慎投资!

如您认为本网站上使用的内容侵犯了您的版权,请立即联系我们(info@kdj.com),我们将及时删除。

-

-

-

- BTC Bull Token(BTCBULL)预售超过450万美元,并获得高奖励

- 2025-04-12 05:50:12

- $ btcbull Presale的势头增长了强大,现在超过了450万美元的每日增长。

-

- 今天的比特币(BTC)价格:降至$ 90k以下,但这是合并还是逆转?

- 2025-04-12 05:50:12

- 比特币目前的价格为8.8万美元。了解情感变化,重要市场数据和关键水平,以了解BTC的合并对

-

-

- 比特币(BTC)和黄金在2025年分开

- 2025-04-12 05:45:18

- 比特币BTC/USD和黄金在2025年分开,在地缘政治紧张局势上升的情况下,这两个资产遵循了急剧不同的轨迹

-

-

-