|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

加密貨幣新聞文章

Sa2VA: A Unified Model for Dense Grounded Understanding of Images and Videos

2025/01/13 03:31

Multi-Modal Large Language Models (MLLMs) have seen rapid advancements in handling various image and video-related tasks, including visual question answering, narrative generation, and interactive editing. However, achieving fine-grained video content understanding, such as pixel-level segmentation, tracking with language descriptions, and performing visual question answering on specific video prompts, still poses a critical challenge in this field. State-of-the-art video perception models excel at tasks like segmentation and tracking but lack open-ended language understanding and conversation capabilities. At the same time, video MLLMs demonstrate strong performance in video comprehension and question answering but fall short in handling perception tasks and visual prompts.

Existing attempts to address video understanding challenges have followed two main approaches: MLLMs and Referring Segmentation systems. Initially, MLLMs focused on developing improved multi-modal fusion methods and feature extractors, eventually evolving towards instruction tuning on LLMs with frameworks like LLaVA. Recent developments have attempted to unify image, video, and multi-image analysis in single frameworks, such as LLaVA-OneVision. In parallel, Referring Segmentation systems have progressed from basic fusion modules to transformer-based methods that integrate segmentation and tracking within videos. However, these solutions lack a comprehensive integration of perception and language understanding capabilities.

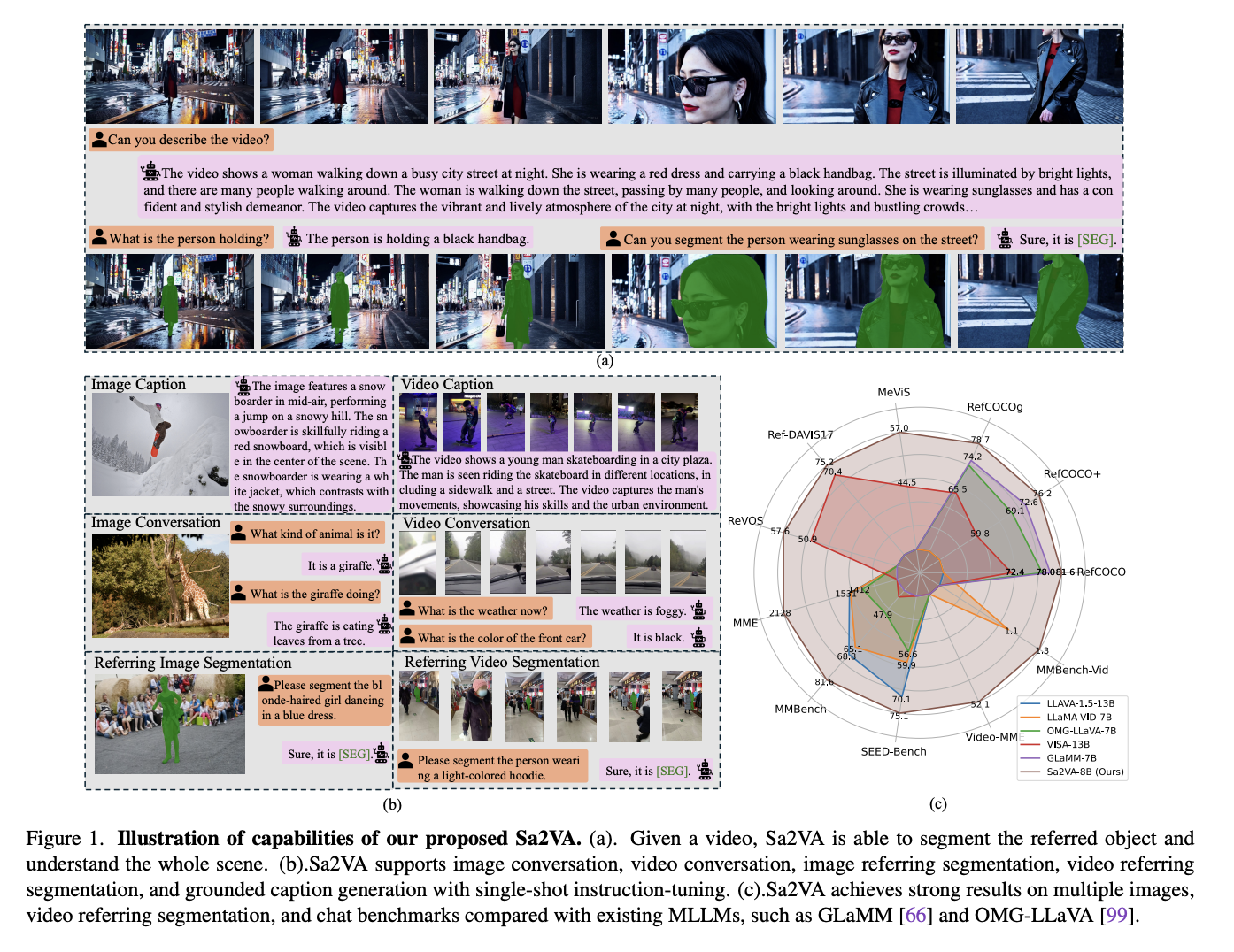

To overcome this limitation, researchers from UC Merced, Bytedance Seed, Wuhan University, and Peking University have proposed Sa2VA, a groundbreaking unified model for a dense grounded understanding of images and videos. The model differentiates itself by supporting a comprehensive range of image and video tasks through minimal one-shot instruction tuning, addressing the limitations of existing multi-modal large language models. Sa2VA’s innovative approach integrates SAM-2 with LLaVA, unifying text, image, and video in a shared LLM token space. The researchers have also introduced Ref-SAV, an extensive auto-labeled dataset containing over 72K object expressions in complex video scenes, with 2K manually validated video objects to ensure robust benchmarking capabilities.

Sa2VA’s architecture integrates two main components: a LLaVA-like model and SAM-2, connected through a novel decoupled design. The LLaVA-like component consists of a visual encoder processing images and videos, a visual projection layer, and an LLM for text token prediction. The system employs a unique decoupled approach where SAM-2 operates alongside the pre-trained LLaVA model without direct token exchange, maintaining computational efficiency and enabling plug-and-play functionality with various pre-trained MLLMs. The key innovation lies in the connection mechanism using a special “[SEG]” token, allowing SAM-2 to generate segmentation masks while enabling gradient backpropagation through the “[SEG]” token to optimize the MLLM’s prompt generation capabilities.

The Sa2VA model achieves state-of-the-art results on referring segmentation tasks, with Sa2VA-8B scoring 81.6, 76.2, and 78.9 cIoU on RefCOCO, RefCOCO+, and RefCOCOg respectively, outperforming previous systems like GLaMM-7B. In conversational capabilities, Sa2VA shows strong performance with scores of 2128 on MME, 81.6 on MMbench, and 75.1 on SEED-Bench. The model excels in video benchmarks, surpassing previous state-of-the-art VISA-13B by substantial margins on MeVIS, RefDAVIS17, and ReVOS. Moreover, Sa2VA’s performance is noteworthy considering its smaller model size compared to competitors, showing its efficiency and effectiveness across both image and video understanding tasks.

In this paper, researchers introduced Sa2VA which represents a significant advancement in multi-modal understanding by successfully integrating SAM-2’s video segmentation capabilities with LLaVA’s language processing abilities. The framework's versatility is shown through its ability to handle diverse image and video understanding tasks with minimal one-shot instruction tuning, addressing the long-standing challenge of combining perception and language understanding. Sa2VA’s strong performance across multiple benchmarks, from referring segmentation to conversational tasks, validates its effectiveness as a unified solution for a dense, grounded understanding of visual content, marking a significant step forward in the multi-modal AI systems field.

Check out the Paper and Model on Hugging Face. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 65k+ ML SubReddit.

FREE UPCOMING AI WEBINAR (JAN 15, 2025): Boost LLM Accuracy with Synthetic Data and Evaluation Intelligence

Join this webinar to gain actionable insights into boosting LLM model performance and accuracy while safeguarding data privacy.output

免責聲明:info@kdj.com

所提供的資訊並非交易建議。 kDJ.com對任何基於本文提供的資訊進行的投資不承擔任何責任。加密貨幣波動性較大,建議您充分研究後謹慎投資!

如果您認為本網站使用的內容侵犯了您的版權,請立即聯絡我們(info@kdj.com),我們將及時刪除。

-

-

-

- 查理王 5 便士硬幣:您口袋裡的同花大順?

- 2025-10-23 08:07:25

- 2320 萬枚查爾斯國王 5 便士硬幣正在流通,標誌著一個歷史性時刻。收藏家們,準備好尋找一段歷史吧!

-

-

-

- 嘉手納的路的盡頭? KDA 代幣因項目放棄而暴跌

- 2025-10-23 07:59:26

- Kadena 關閉運營,導致 KDA 代幣螺旋式上升。這是結束了,還是社區可以讓這條鏈繼續存在?

-

- 查爾斯國王 5 便士硬幣開始流通:硬幣收藏家的同花大順!

- 2025-10-23 07:07:25

- 查爾斯國王 5 便士硬幣現已在英國流通!了解熱門話題、橡樹葉設計,以及為什麼收藏家對這款皇家發佈如此興奮。

-

- 查爾斯國王 5 便士硬幣進入流通:收藏家指南

- 2025-10-23 07:07:25

- 查理三世國王的 5 便士硬幣現已流通!了解新設計、其意義以及收藏家為何如此興奮。準備好尋找這些歷史硬幣!

-