|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

介绍 MolE,一种基于 Transformer 的分子图学习模型。 MolE 通过提供原子标识符和图连接作为输入标记来直接使用分子图。原子标识符是通过将不同的原子属性散列成单个整数来计算的,并且图连接性以拓扑距离矩阵的形式给出。 MolE 使用 Transformer 作为其基础架构,该架构之前也已应用于图。 Transformer 的性能很大程度上归功于自注意力机制的广泛使用。在标准转换器中,输入标记嵌入到查询、键和值中 \(Q,K,V\in {R}^{N\times d}\),用于将自注意力计算为:

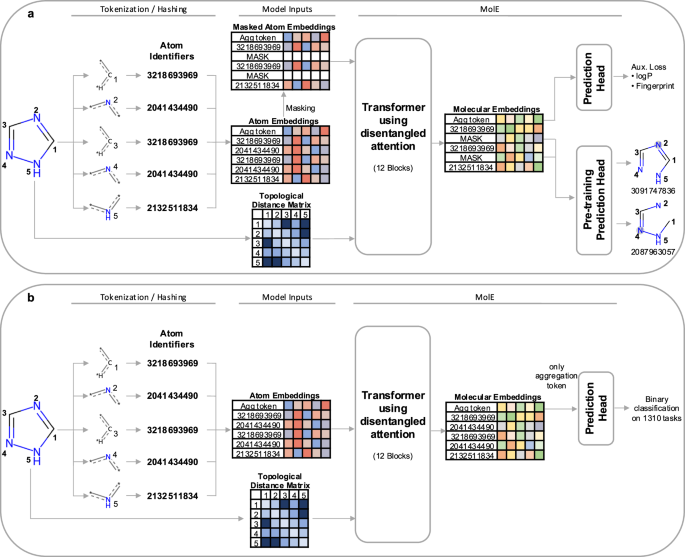

MolE is a transformer model designed specifically for molecular graphs. It directly works with graphs by providing both atom identifiers and graph connectivity as input tokens and relative position information, respectively. Atom identifiers are calculated by hashing different atomic properties into a single integer. In particular, this hash contains the following information:

MolE 是专门为分子图设计的 Transformer 模型。它通过分别提供原子标识符和图连接作为输入标记和相对位置信息来直接处理图。原子标识符是通过将不同的原子属性散列成单个整数来计算的。特别是,该哈希值包含以下信息:

- number of neighboring heavy atoms,

- 相邻重原子的数量,

- number of neighboring hydrogen atoms,

- 相邻氢原子的数量,

- valence minus the number of attached hydrogens,

- 化合价减去所连接的氢的数量,

- atomic charge,

- 原子电荷,

- atomic mass,

- 原子质量,

- attached bond types,

- 附加债券类型,

- and ring membership.

- 和戒指会员资格。

Atom identifiers (also known as atom environments of radius 0) were computed using the Morgan algorithm as implemented in RDKit.

原子标识符(也称为半径 0 的原子环境)是使用 RDKit 中实现的 Morgan 算法计算的。

In addition to tokens, MolE also takes graph connectivity information as input which is an important inductive bias since it encodes the relative position of atoms in the molecular graph. In this case, the graph connectivity is given as a topological distance matrix d where dij corresponds to the length of the shortest path over bonds separating atom i from atom j.

除了标记之外,MolE 还采用图连接信息作为输入,这是一个重要的归纳偏差,因为它编码了分子图中原子的相对位置。在这种情况下,图的连通性以拓扑距离矩阵 d 的形式给出,其中 dij 对应于将原子 i 与原子 j 分开的键上的最短路径的长度。

MolE uses a Transformer as its base architecture, which also has been applied to graphs previously. The performance of transformers can be attributed in large part to the extensive use of the self-attention mechanism. In standard transformers, the input tokens are embedded into queries, keys and values \(Q,K,V\in {R}^{N\times d}\), which are used to compute self-attention as:

MolE 使用 Transformer 作为其基础架构,该架构之前也已应用于图。 Transformer 的性能很大程度上归功于自注意力机制的广泛使用。在标准转换器中,输入标记嵌入到查询、键和值中 \(Q,K,V\in {R}^{N\times d}\),用于将自注意力计算为:

where \({H}_{0}\in {R}^{N\times d}\) are the output hidden vectors after self-attention, and \(d\) is the dimension of the hidden space.

其中\({H}_{0}\in {R}^{N\times d}\)是自注意力后的输出隐藏向量,\(d\)是隐藏空间的维度。

In order to explicitly carry positional information through each layer of the transformer, MolE uses the disentangled self-attention from DeBERTa:

为了通过 Transformer 的每一层明确携带位置信息,MolE 使用 DeBERTa 的解缠结自注意力:

where \({Q}^{c},{K}^{c},{V}^{c}\in {R}^{N\times d}\) are context queries, keys and values that contain token information (used in standard self-attention), and \({Q}_{i,j}^{p},{K}_{i,j}^{p}\in {R}^{N\times d}\) are the position queries and keys that encode the relative position of the \(i{{{\rm{th}}}}\) atom with respect to the \(j{{{\rm{th}}}}\) atom. The use of disentangled attention makes MolE invariant with respect to the order of the input atoms.

其中 \({Q}^{c},{K}^{c},{V}^{c}\in {R}^{N\times d}\) 是包含 token 的上下文查询、键和值信息(用于标准自注意力),以及 \({Q}_{i,j}^{p},{K}_{i,j}^{p}\in {R}^{N\times d}\) 是位置查询和键,编码 \(i{{{\rm{th}}}}\) 原子相对于 \(j{{{\rm{th}} 的相对位置}}\) 原子。解缠结注意力的使用使得 MolE 对于输入原子的顺序保持不变。

As mentioned earlier, self-supervised pretraining can effectively transfer information from large unlabeled datasets to smaller datasets with labels. Here we present a two-step pretraining strategy. The first step is a self-supervised approach to learn chemical structure representation. For this we use a BERT-like approach in which each atom is randomly masked with a probability of 15%, from which 80% of the selected tokens are replaced by a mask token, 10% replaced by a random token from the vocabulary, and 10% are not changed. Different from BERT, the prediction task is not to predict the identity of the masked token, but to predict the corresponding atom environment (or functional atom environment) of radius 2, meaning all atoms that are separated from the masked atom by two or less bonds. It is important to keep in mind that we used different tokenization strategies for inputs (radius 0) and labels (radius 2) and that input tokens do not contain overlapping data of neighboring atoms to avoid information leakage. This incentivizes the model to aggregate information from neighboring atoms while learning local molecular features. MolE learns via a classification task where each atom environment of radius 2 has a predefined label, contrary to the Context Prediction approach where the task is to match the embedding of atom environments of radius 4 to the embedding of context atoms (i.e., surrounding atoms beyond radius 4) via negative sampling. The second step uses a graph-level supervised pretraining with a large labeled dataset. As proposed by Hu et al., combining node- and graph-level pretraining helps to learn local and global features that improve the final prediction performance. More details regarding the pretraining steps can be found in the Methods section.

如前所述,自监督预训练可以有效地将信息从大型未标记数据集转移到带有标签的较小数据集。在这里,我们提出了一个两步预训练策略。第一步是采用自我监督的方法来学习化学结构表示。为此,我们使用类似 BERT 的方法,其中每个原子以 15% 的概率被随机屏蔽,其中 80% 的选定标记被掩码标记替换,10% 被词汇表中的随机标记替换,并且10%没有改变。与 BERT 不同,预测任务不是预测被屏蔽 token 的身份,而是预测半径为 2 的相应原子环境(或功能原子环境),即与被屏蔽原子相隔两个或更少键的所有原子。重要的是要记住,我们对输入(半径 0)和标签(半径 2)使用了不同的标记化策略,并且输入标记不包含相邻原子的重叠数据,以避免信息泄漏。这激励模型聚合来自邻近原子的信息,同时学习局部分子特征。 MolE 通过分类任务进行学习,其中半径为 2 的每个原子环境都有一个预定义的标签,这与上下文预测方法相反,上下文预测方法的任务是将半径为 4 的原子环境的嵌入与上下文原子的嵌入相匹配(即,超出半径的周围原子)半径 4) 通过负采样。第二步使用带有大型标记数据集的图级监督预训练。正如 Hu 等人提出的,结合节点级和图级预训练有助于学习局部和全局特征,从而提高最终的预测性能。有关预训练步骤的更多详细信息可以在方法部分找到。

MolE was pretrained using an ultra-large database of ~842 million molecules from ZINC and ExCAPE-DB, employing a self-supervised scheme (with an auxiliary loss) followed by a supervised pretraining with ~456K molecules (see Methods section for more details). We assess the quality of the molecular embedding by finetuning MolE on a set of downstream tasks. In this case, we use a set of 22 ADMET tasks included in the Therapeutic Data Commons (TDC) benchmark This benchmark is composed of 9 regression and 13 binary classification tasks on datasets that range from hundreds (e.g, DILI with 475 compounds) to thousands of compounds (such as CYP inhibition tasks with ~13,000 compounds). An advantage of using this benchmark is

MolE 使用来自 ZINC 和 ExCAPE-DB 的约 8.42 亿分子的超大型数据库进行预训练,采用自监督方案(带有辅助损失),然后使用约 456K 分子进行监督预训练(更多详细信息,请参阅方法部分) 。我们通过在一组下游任务上微调 MolE 来评估分子嵌入的质量。在本例中,我们使用治疗数据共享 (TDC) 基准中包含的一组 22 个 ADMET 任务。该基准由数据集上的 9 个回归任务和 13 个二元分类任务组成,数据集范围从数百个(例如,具有 475 种化合物的 DILI)到数千个化合物(例如约 13,000 种化合物的 CYP 抑制任务)。使用此基准测试的优点是

免责声明:info@kdj.com

所提供的信息并非交易建议。根据本文提供的信息进行的任何投资,kdj.com不承担任何责任。加密货币具有高波动性,强烈建议您深入研究后,谨慎投资!

如您认为本网站上使用的内容侵犯了您的版权,请立即联系我们(info@kdj.com),我们将及时删除。

-

-

- ZDEX预售:具有1000倍潜力的代币

- 2024-11-23 16:25:02

- ZDEX 预售已正式开始,为早期采用者提供了以 0.0019 美元的入门价格投资新兴 DeFi 明星的绝佳机会。

-

- 由于成千上万的足球和音乐迷前往观看比赛和音乐会,曼彻斯特的交通系统将“异常繁忙”

- 2024-11-23 16:25:02

- 大曼彻斯特交通局(TfGM)建议人们仔细计划行程,尽可能在安静的时间出行

-

-

- 如今 Farcaster 生态系统中流行的 9 个 Meme 币

- 2024-11-23 16:20:01

- 我简单整理了9款时下Farcaster生态中流行的Meme币,通过Clanker AI Agent发行。

-

-

- 比特币繁荣:高风险加密货币市场背后的机遇与争议

- 2024-11-23 16:20:01

- 比特币一直是人们讨论的频繁话题,其投机性质让许多人想知道它对日常生活和更广泛的经济的影响。

-

-