|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Cryptocurrency News Articles

MolE: A Transformer Model for Molecular Graph Learning

Nov 12, 2024 at 06:04 pm

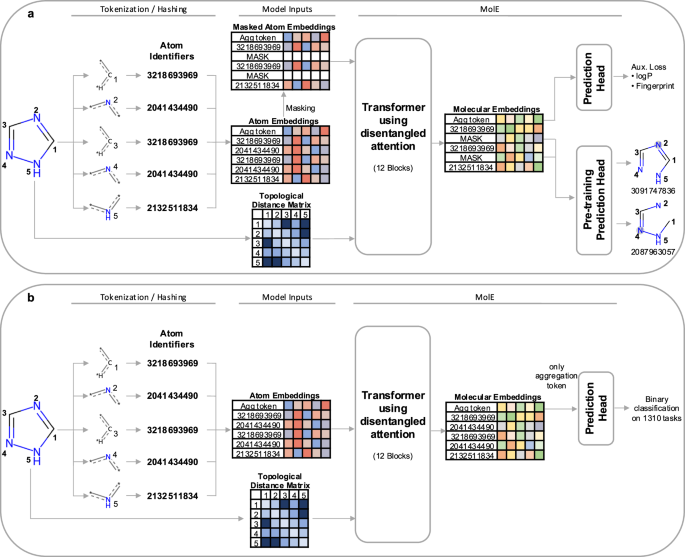

introduce MolE, a transformer-based model for molecular graph learning. MolE directly works with molecular graphs by providing both atom identifiers and graph connectivity as input tokens. Atom identifiers are calculated by hashing different atomic properties into a single integer, and graph connectivity is given as a topological distance matrix. MolE uses a Transformer as its base architecture, which also has been applied to graphs previously. The performance of transformers can be attributed in large part to the extensive use of the self-attention mechanism. In standard transformers, the input tokens are embedded into queries, keys and values \(Q,K,V\in {R}^{N\times d}\), which are used to compute self-attention as:

MolE is a transformer model designed specifically for molecular graphs. It directly works with graphs by providing both atom identifiers and graph connectivity as input tokens and relative position information, respectively. Atom identifiers are calculated by hashing different atomic properties into a single integer. In particular, this hash contains the following information:

- number of neighboring heavy atoms,

- number of neighboring hydrogen atoms,

- valence minus the number of attached hydrogens,

- atomic charge,

- atomic mass,

- attached bond types,

- and ring membership.

Atom identifiers (also known as atom environments of radius 0) were computed using the Morgan algorithm as implemented in RDKit.

In addition to tokens, MolE also takes graph connectivity information as input which is an important inductive bias since it encodes the relative position of atoms in the molecular graph. In this case, the graph connectivity is given as a topological distance matrix d where dij corresponds to the length of the shortest path over bonds separating atom i from atom j.

MolE uses a Transformer as its base architecture, which also has been applied to graphs previously. The performance of transformers can be attributed in large part to the extensive use of the self-attention mechanism. In standard transformers, the input tokens are embedded into queries, keys and values (Q,K,Vin {R}^{Ntimes d}), which are used to compute self-attention as:

where ({H}_{0}in {R}^{Ntimes d}) are the output hidden vectors after self-attention, and (d) is the dimension of the hidden space.

In order to explicitly carry positional information through each layer of the transformer, MolE uses the disentangled self-attention from DeBERTa:

where ({Q}^{c},{K}^{c},{V}^{c}in {R}^{Ntimes d}) are context queries, keys and values that contain token information (used in standard self-attention), and ({Q}_{i,j}^{p},{K}_{i,j}^{p}in {R}^{Ntimes d}) are the position queries and keys that encode the relative position of the (i{{{rm{th}}}}) atom with respect to the (j{{{rm{th}}}}) atom. The use of disentangled attention makes MolE invariant with respect to the order of the input atoms.

As mentioned earlier, self-supervised pretraining can effectively transfer information from large unlabeled datasets to smaller datasets with labels. Here we present a two-step pretraining strategy. The first step is a self-supervised approach to learn chemical structure representation. For this we use a BERT-like approach in which each atom is randomly masked with a probability of 15%, from which 80% of the selected tokens are replaced by a mask token, 10% replaced by a random token from the vocabulary, and 10% are not changed. Different from BERT, the prediction task is not to predict the identity of the masked token, but to predict the corresponding atom environment (or functional atom environment) of radius 2, meaning all atoms that are separated from the masked atom by two or less bonds. It is important to keep in mind that we used different tokenization strategies for inputs (radius 0) and labels (radius 2) and that input tokens do not contain overlapping data of neighboring atoms to avoid information leakage. This incentivizes the model to aggregate information from neighboring atoms while learning local molecular features. MolE learns via a classification task where each atom environment of radius 2 has a predefined label, contrary to the Context Prediction approach where the task is to match the embedding of atom environments of radius 4 to the embedding of context atoms (i.e., surrounding atoms beyond radius 4) via negative sampling. The second step uses a graph-level supervised pretraining with a large labeled dataset. As proposed by Hu et al., combining node- and graph-level pretraining helps to learn local and global features that improve the final prediction performance. More details regarding the pretraining steps can be found in the Methods section.

MolE was pretrained using an ultra-large database of ~842 million molecules from ZINC and ExCAPE-DB, employing a self-supervised scheme (with an auxiliary loss) followed by a supervised pretraining with ~456K molecules (see Methods section for more details). We assess the quality of the molecular embedding by finetuning MolE on a set of downstream tasks. In this case, we use a set of 22 ADMET tasks included in the Therapeutic Data Commons (TDC) benchmark This benchmark is composed of 9 regression and 13 binary classification tasks on datasets that range from hundreds (e.g, DILI with 475 compounds) to thousands of compounds (such as CYP inhibition tasks with ~13,000 compounds). An advantage of using this benchmark is

Disclaimer:info@kdj.com

The information provided is not trading advice. kdj.com does not assume any responsibility for any investments made based on the information provided in this article. Cryptocurrencies are highly volatile and it is highly recommended that you invest with caution after thorough research!

If you believe that the content used on this website infringes your copyright, please contact us immediately (info@kdj.com) and we will delete it promptly.

-

-

- Shiba Inu (SHIB) Meme Rallies To Break The Top Ten

- Nov 14, 2024 at 10:25 pm

- Shiba Inu is up an astonishing 29% in the last 7 days to hit $0.0000259. This brings the Shiba Inu market cap above $15.3 billion and secures it a place in the coveted coin market cap top 10 crypto index. This could be the beginning of an insane run for Shiba Inu, which has a history of surprising to the upside with the strength of its bullish impulses.

-

-

-

- TRON and Avalanche Prices Significantly Decline, Prompting Investors to Reconsider Their Investment Options

- Nov 14, 2024 at 10:15 pm

- While most coins are bullish following a favorable turn of externalities, TRX and AVAX have been the direct opposite. AVAX and Tron's prices have significantly declined in the past few weeks, prompting investors to reconsider their investment options.

-

-

-

-

- Dogecoin's Hidden Impact: Revolutionizing Industries and Community Dynamics

- Nov 14, 2024 at 10:15 pm

- Dogecoin, once dismissed as a mere internet meme, has now cemented its status as a formidable player in the cryptocurrency arena. While its rise has been largely discussed, some aspects haven't received the attention they deserve. In this article, we delve into unexplored facets of Dogecoin that could significantly influence lives globally.