|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

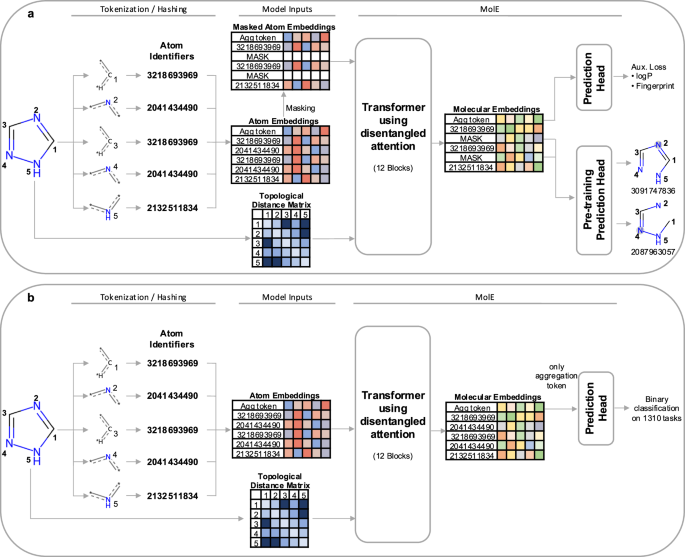

介紹 MolE,一個基於 Transformer 的分子圖學習模型。 MolE 透過提供原子標識符和圖連接作為輸入標記來直接使用分子圖。原子標識符是透過將不同的原子屬性散列成單一整數來計算的,並且圖連接性以拓撲距離矩陣的形式給出。 MolE 使用 Transformer 作為其基礎架構,該架構之前也已應用於圖。 Transformer 的表現很大程度上歸功於自註意力機制的廣泛使用。在標準轉換器中,輸入標記嵌入到查詢、鍵和值中 \(Q,K,V\in {R}^{N\times d}\),用於將自註意力計算為:

MolE is a transformer model designed specifically for molecular graphs. It directly works with graphs by providing both atom identifiers and graph connectivity as input tokens and relative position information, respectively. Atom identifiers are calculated by hashing different atomic properties into a single integer. In particular, this hash contains the following information:

MolE 是專為分子圖設計的 Transformer 模型。它透過分別提供原子標識符和圖連接作為輸入標記和相對位置資訊來直接處理圖。原子標識符是透過將不同的原子屬性散列成單一整數來計算的。特別是,該哈希值包含以下資訊:

- number of neighboring heavy atoms,

- 相鄰重原子的數量,

- number of neighboring hydrogen atoms,

- 相鄰氫原子的數量,

- valence minus the number of attached hydrogens,

- 化合價減去所連接的氫的數量,

- atomic charge,

- 原子電荷,

- atomic mass,

- 原子質量,

- attached bond types,

- 附加債券類型,

- and ring membership.

- 和戒指會員資格。

Atom identifiers (also known as atom environments of radius 0) were computed using the Morgan algorithm as implemented in RDKit.

原子標識符(也稱為半徑 0 的原子環境)是使用 RDKit 中實現的 Morgan 演算法計算的。

In addition to tokens, MolE also takes graph connectivity information as input which is an important inductive bias since it encodes the relative position of atoms in the molecular graph. In this case, the graph connectivity is given as a topological distance matrix d where dij corresponds to the length of the shortest path over bonds separating atom i from atom j.

除了標記之外,MolE 還採用圖連接資訊作為輸入,這是一個重要的歸納偏差,因為它編碼了分子圖中原子的相對位置。在這種情況下,圖的連通性以拓撲距離矩陣 d 的形式給出,其中 dij 對應於將原子 i 與原子 j 分開的鍵上的最短路徑的長度。

MolE uses a Transformer as its base architecture, which also has been applied to graphs previously. The performance of transformers can be attributed in large part to the extensive use of the self-attention mechanism. In standard transformers, the input tokens are embedded into queries, keys and values \(Q,K,V\in {R}^{N\times d}\), which are used to compute self-attention as:

MolE 使用 Transformer 作為其基礎架構,該架構之前也已應用於圖。 Transformer 的表現很大程度上歸功於自註意力機制的廣泛使用。在標準轉換器中,輸入標記嵌入到查詢、鍵和值中 \(Q,K,V\in {R}^{N\times d}\),用於將自註意力計算為:

where \({H}_{0}\in {R}^{N\times d}\) are the output hidden vectors after self-attention, and \(d\) is the dimension of the hidden space.

其中\({H}_{0}\in {R}^{N\times d}\)是自註意力後的輸出隱藏向量,\(d\)是隱藏空間的維度。

In order to explicitly carry positional information through each layer of the transformer, MolE uses the disentangled self-attention from DeBERTa:

為了透過 Transformer 的每一層明確攜帶位置訊息,MolE 使用 DeBERTa 的解纏結自註意力:

where \({Q}^{c},{K}^{c},{V}^{c}\in {R}^{N\times d}\) are context queries, keys and values that contain token information (used in standard self-attention), and \({Q}_{i,j}^{p},{K}_{i,j}^{p}\in {R}^{N\times d}\) are the position queries and keys that encode the relative position of the \(i{{{\rm{th}}}}\) atom with respect to the \(j{{{\rm{th}}}}\) atom. The use of disentangled attention makes MolE invariant with respect to the order of the input atoms.

其中 \({Q}^{c},{K}^{c},{V}^{c}\in {R}^{N\times d}\) 是包含 token 的上下文查詢、鍵和值訊息(用於標準自註意力),以及\({Q}_{i,j}^{p},{K}_{i,j}^{p}\in {R}^{N\times d}\) 是位置查詢和鍵,編碼\(i{{{\rm{th}}}}\) 原子相對於\(j{{{\rm{th}} 的相對位置}}\) 原子。解纏結注意力的使用使得 MolE 對於輸入原子的順序保持不變。

As mentioned earlier, self-supervised pretraining can effectively transfer information from large unlabeled datasets to smaller datasets with labels. Here we present a two-step pretraining strategy. The first step is a self-supervised approach to learn chemical structure representation. For this we use a BERT-like approach in which each atom is randomly masked with a probability of 15%, from which 80% of the selected tokens are replaced by a mask token, 10% replaced by a random token from the vocabulary, and 10% are not changed. Different from BERT, the prediction task is not to predict the identity of the masked token, but to predict the corresponding atom environment (or functional atom environment) of radius 2, meaning all atoms that are separated from the masked atom by two or less bonds. It is important to keep in mind that we used different tokenization strategies for inputs (radius 0) and labels (radius 2) and that input tokens do not contain overlapping data of neighboring atoms to avoid information leakage. This incentivizes the model to aggregate information from neighboring atoms while learning local molecular features. MolE learns via a classification task where each atom environment of radius 2 has a predefined label, contrary to the Context Prediction approach where the task is to match the embedding of atom environments of radius 4 to the embedding of context atoms (i.e., surrounding atoms beyond radius 4) via negative sampling. The second step uses a graph-level supervised pretraining with a large labeled dataset. As proposed by Hu et al., combining node- and graph-level pretraining helps to learn local and global features that improve the final prediction performance. More details regarding the pretraining steps can be found in the Methods section.

如前所述,自監督預訓練可以有效地將資訊從大型未標記資料集轉移到帶有標籤的較小資料集。在這裡,我們提出了一個兩步驟預訓練策略。第一步是採用自我監督的方法來學習化學結構表示。為此,我們使用類似 BERT 的方法,其中每個原子以 15% 的機率被隨機屏蔽,其中 80% 的選定標記被掩碼標記替換,10% 被詞彙表中的隨機標記替換,並且10%沒有改變。與 BERT 不同,預測任務不是預測被屏蔽 token 的身份,而是預測半徑為 2 的相應原子環境(或功能原子環境),即與被屏蔽原子相隔兩個或更少鍵的所有原子。重要的是要記住,我們對輸入(半徑 0)和標籤(半徑 2)使用了不同的標記化策略,並且輸入標記不包含相鄰原子的重疊數據,以避免資訊外洩。這激勵模型聚合來自鄰近原子的訊息,同時學習局部分子特徵。 MolE 透過分類任務進行學習,其中半徑為2 的每個原子環境都有一個預定義的標籤,這與上下文預測方法相反,上下文預測方法的任務是將半徑為4 的原子環境的嵌入與上下文原子的嵌入相匹配(即,超出半徑的周圍原子)半徑 4) 通過負採樣。第二步使用帶有大型標記資料集的圖級監督預訓練。正如 Hu 等人所提出的,結合節點級和圖級預訓練有助於學習局部和全局特徵,從而提高最終的預測性能。有關預訓練步驟的更多詳細資訊可以在方法部分找到。

MolE was pretrained using an ultra-large database of ~842 million molecules from ZINC and ExCAPE-DB, employing a self-supervised scheme (with an auxiliary loss) followed by a supervised pretraining with ~456K molecules (see Methods section for more details). We assess the quality of the molecular embedding by finetuning MolE on a set of downstream tasks. In this case, we use a set of 22 ADMET tasks included in the Therapeutic Data Commons (TDC) benchmark This benchmark is composed of 9 regression and 13 binary classification tasks on datasets that range from hundreds (e.g, DILI with 475 compounds) to thousands of compounds (such as CYP inhibition tasks with ~13,000 compounds). An advantage of using this benchmark is

MolE 使用來自ZINC 和ExCAPE-DB 的約8.42 億分子的超大型資料庫進行預訓練,採用自監督方案(帶有輔助損失),然後使用約456K 分子進行監督預訓練(更多詳細信息,請參閱方法部分) 。我們透過在一組下游任務上微調 MolE 來評估分子嵌入的品質。在本例中,我們使用治療資料共享(TDC) 基準中包含的一組22 個ADMET 任務。個(例如,具有475 種化合物的DILI)到數千個化合物(例如約 13,000 種化合物的 CYP 抑制任務)。使用此基準測試的優點是

免責聲明:info@kdj.com

所提供的資訊並非交易建議。 kDJ.com對任何基於本文提供的資訊進行的投資不承擔任何責任。加密貨幣波動性較大,建議您充分研究後謹慎投資!

如果您認為本網站使用的內容侵犯了您的版權,請立即聯絡我們(info@kdj.com),我們將及時刪除。

-

-

-

-

-

-

- 梅拉尼婭·特朗普、加密貨幣和欺詐指控:深入探討

- 2025-10-22 07:29:06

- 探索梅拉尼婭·特朗普、加密貨幣和加密世界中的欺詐指控之間的聯繫。揭開 $MELANIA 硬幣爭議的面紗。

-

- Kadena 代幣退出:KDA 發生了什麼以及下一步是什麼?

- 2025-10-22 07:20:56

- Kadena 的創始團隊因“市場狀況”而關閉,導致 KDA 暴跌。這對於區塊鍊及其代幣的未來意味著什麼?

-

- Solana 的狂野之旅:ATH 夢想與回調現實

- 2025-10-22 07:03:27

- 索拉納 (Solana) 正觸及歷史新高,但可能會出現回調。我們對關鍵水平、鏈上數據和市場情緒進行了細分。

-

![MaxxoRMeN 的 dooMEd(硬惡魔)[1 幣] |幾何衝刺 MaxxoRMeN 的 dooMEd(硬惡魔)[1 幣] |幾何衝刺](/uploads/2025/10/22/cryptocurrencies-news/videos/doomed-hard-demon-maxxormen-coin-geometry-dash/68f8029c3b212_image_500_375.webp)