|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

自回归 (AR) 模型改变了图像生成领域,为生成高质量视觉效果树立了新的基准。这些模型将图像创建过程分解为连续的步骤,每个标记都是基于先前的标记生成的,从而创建具有异常真实性和连贯性的输出。

Autoregressive (AR) models have revolutionized the field of image generation, pushing the boundaries of visual realism and coherence. These models operate sequentially, generating each token based on the preceding ones, resulting in outputs of exceptional quality. Researchers have widely employed AR techniques in computer vision, gaming, and digital content creation applications. However, the potential of AR models is often limited by inherent inefficiencies, particularly their slow generation speed, which poses a significant challenge in real-time scenarios.

自回归 (AR) 模型彻底改变了图像生成领域,突破了视觉真实感和连贯性的界限。这些模型按顺序运行,根据前面的令牌生成每个令牌,从而产生卓越质量的输出。研究人员已在计算机视觉、游戏和数字内容创建应用中广泛采用 AR 技术。然而,AR 模型的潜力往往受到固有的低效率的限制,特别是其生成速度慢,这在实时场景中提出了重大挑战。

Among various concerns, a critical aspect that hinders the practical deployment of AR models is their speed. The sequential nature of token-by-token generation inherently limits scalability and introduces high latency during image generation tasks. For instance, generating a 256×256 image using traditional AR models like LlamaGen requires 256 steps, which translates to approximately five seconds on modern GPUs. Such delays hinder their application in scenarios demanding instantaneous results. Moreover, while AR models excel in maintaining the fidelity of their outputs, they face difficulties in meeting the growing demand for both speed and quality in large-scale implementations.

在各种担忧中,阻碍 AR 模型实际部署的一个关键因素是它们的速度。逐个令牌生成的顺序本质本质上限制了可扩展性,并在图像生成任务期间引入了高延迟。例如,使用 LlamaGen 等传统 AR 模型生成 256×256 图像需要 256 个步骤,这在现代 GPU 上大约需要 5 秒。这种延迟阻碍了它们在需要即时结果的场景中的应用。此外,虽然 AR 模型在保持输出的保真度方面表现出色,但它们在满足大规模实施中对速度和质量不断增长的需求方面面临着困难。

Efforts to accelerate AR models have led to various methods, such as predicting multiple tokens simultaneously or adopting masking strategies during generation. These approaches aim to reduce the required steps but often compromise the quality of the generated images. For example, in multi-token generation techniques, the assumption of conditional independence among tokens introduces artifacts, ultimately undermining the cohesiveness of the output. Similarly, masking-based methods allow for faster generation by training models to predict specific tokens based on others, but their effectiveness diminishes when generation steps are drastically reduced. These limitations highlight the need for a novel approach to enhance AR model efficiency.

加速 AR 模型的努力催生了各种方法,例如同时预测多个令牌或在生成过程中采用屏蔽策略。这些方法旨在减少所需的步骤,但通常会损害生成图像的质量。例如,在多令牌生成技术中,令牌之间条件独立的假设引入了伪影,最终破坏了输出的凝聚力。类似地,基于掩码的方法允许通过训练模型来根据其他标记预测特定标记来更快地生成,但当生成步骤大幅减少时,它们的有效性会降低。这些限制突出表明需要一种新颖的方法来提高 AR 模型的效率。

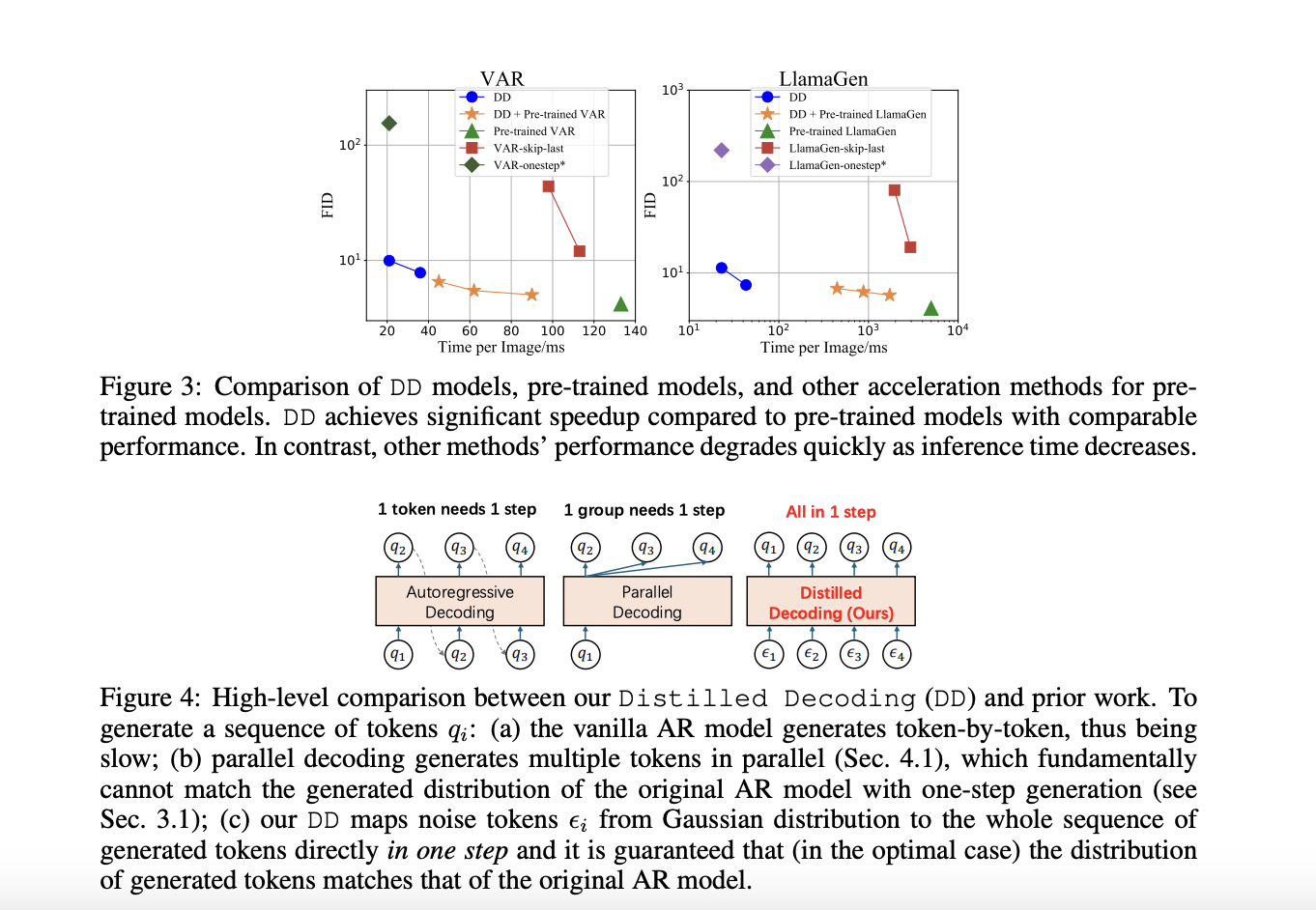

A recent research collaboration between Tsinghua University and Microsoft Research has devised a solution to these challenges: Distilled Decoding (DD). This method builds on flow matching, a deterministic mapping that connects Gaussian noise to the output distribution of pre-trained AR models. Unlike conventional methods, DD does not require access to the original training data of the AR models, making it more practical for deployment. The research demonstrated that DD can transform the generation process from hundreds of steps to as few as one or two while preserving the quality of the output. For example, on ImageNet-256, DD achieved a speed-up of 6.3x for VAR models and an impressive 217.8x for LlamaGen, reducing generation steps from 256 to just one.

清华大学和微软研究院最近的一项研究合作设计了应对这些挑战的解决方案:蒸馏解码(DD)。该方法建立在流匹配的基础上,流匹配是一种将高斯噪声与预训练 AR 模型的输出分布连接起来的确定性映射。与传统方法不同,DD不需要访问AR模型的原始训练数据,使其更易于部署。研究表明,DD 可以将生成过程从数百个步骤减少到一两个步骤,同时保持输出的质量。例如,在 ImageNet-256 上,DD 为 VAR 模型实现了 6.3 倍的加速,为 LlamaGen 实现了令人印象深刻的 217.8 倍的加速,将生成步骤从 256 个减少到了 1 个。

The technical foundation of DD is based on its ability to create a deterministic trajectory for token generation. Using flow matching, DD maps noisy inputs to tokens to align their distribution with the pre-trained AR model. During training, the mapping is distilled into a lightweight network that can directly predict the final data sequence from a noise input. This process ensures faster generation and provides flexibility in balancing speed and quality by allowing intermediate steps when needed. Unlike existing methods, DD eliminates the trade-off between speed and fidelity, enabling scalable implementations across diverse tasks.

DD 的技术基础基于其为代币生成创建确定性轨迹的能力。使用流匹配,DD 将噪声输入映射到令牌,以使其分布与预训练的 AR 模型保持一致。在训练过程中,映射被提炼成一个轻量级网络,可以直接从噪声输入预测最终数据序列。此过程可确保更快的生成,并通过在需要时允许中间步骤来提供平衡速度和质量的灵活性。与现有方法不同,DD 消除了速度和保真度之间的权衡,从而实现了跨不同任务的可扩展实施。

In experiments, DD highlights its superiority over traditional methods. For instance, using VAR-d16 models, DD achieved one-step generation with an FID score increase from 4.19 to 9.96, showcasing minimal quality degradation despite a 6.3x speed-up. For LlamaGen models, the reduction in steps from 256 to one resulted in an FID score of 11.35, compared to 4.11 in the original model, with a remarkable 217.8x speed improvement. DD demonstrated similar efficiency in text-to-image tasks, reducing generation steps from 256 to two while maintaining a comparable FID score of 28.95 against 25.70. The results underline DD’s ability to drastically enhance speed without significant loss in image quality, a feat unmatched by baseline methods.

在实验中,DD凸显了其相对于传统方法的优越性。例如,使用 VAR-d16 模型,DD 实现了一步生成,FID 分数从 4.19 增加到 9.96,尽管速度提高了 6.3 倍,但质量下降最小。对于 LlamaGen 模型,步数从 256 减少到 1,FID 得分为 11.35,而原始模型为 4.11,速度显着提高了 217.8 倍。 DD 在文本到图像任务中表现出类似的效率,将生成步骤从 256 个减少到 2 个,同时保持可比较的 FID 分数 28.95 与 25.70。结果强调了 DD 能够在不显着损失图像质量的情况下大幅提高速度,这是基线方法无法比拟的壮举。

Several key takeaways from the research on DD include:

DD 研究的几个关键要点包括:

In conclusion, with the introduction of Distilled Decoding, researchers have successfully addressed the longstanding speed-quality trade-off that has plagued AR generation processes by leveraging flow matching and deterministic mappings. The method accelerates image synthesis by reducing steps drastically and preserves the outputs’ fidelity and scalability. With its robust performance, adaptability, and practical deployment advantages, Distilled Decoding opens new frontiers in real-time applications of AR models. It sets the stage for further innovation in generative modeling.

总之,随着蒸馏解码的引入,研究人员通过利用流匹配和确定性映射,成功解决了长期以来困扰 AR 生成过程的速度与质量的权衡问题。该方法通过大幅减少步骤来加速图像合成,并保持输出的保真度和可扩展性。凭借其强大的性能、适应性和实际部署优势,Distilled Decoding 开辟了 AR 模型实时应用的新领域。它为生成建模的进一步创新奠定了基础。

Check out the Paper and GitHub Page. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 60k+ ML SubReddit.

查看 Paper 和 GitHub 页面。这项研究的所有功劳都归功于该项目的研究人员。另外,不要忘记在 Twitter 上关注我们并加入我们的 Telegram 频道和 LinkedIn 群组。不要忘记加入我们 60k+ ML SubReddit。

Trending: LG AI Research Releases EXAONE 3.5: Three Open-Source Bilingual Frontier AI-level Models Delivering Unmatched Instruction Following and Long Context Understanding for Global Leadership in Generative AI Excellence

热门话题:LG AI Research 发布 EXAONE 3.5:三个开源双语前沿 AI 级模型,提供无与伦比的指令跟踪和长上下文理解,实现卓越生成 AI 的全球领先地位

免责声明:info@kdj.com

所提供的信息并非交易建议。根据本文提供的信息进行的任何投资,kdj.com不承担任何责任。加密货币具有高波动性,强烈建议您深入研究后,谨慎投资!

如您认为本网站上使用的内容侵犯了您的版权,请立即联系我们(info@kdj.com),我们将及时删除。

-

-

-

- 查理王 5 便士硬币:您口袋里的同花大顺?

- 2025-10-23 08:07:25

- 2320 万枚查尔斯国王 5 便士硬币正在流通,标志着一个历史性时刻。收藏家们,准备好寻找一段历史吧!

-

-

-

- 嘉手纳的路的尽头? KDA 代币因项目放弃而暴跌

- 2025-10-23 07:59:26

- Kadena 关闭运营,导致 KDA 代币螺旋式上涨。这是结束了,还是社区可以让这条链继续存在?

-

- 查尔斯国王 5 便士硬币开始流通:硬币收藏家的同花大顺!

- 2025-10-23 07:07:25

- 查尔斯国王 5 便士硬币现已在英国流通!了解热门话题、橡树叶设计,以及为什么收藏家对这款皇家发布如此兴奋。

-

- 查尔斯国王 5 便士硬币进入流通:收藏家指南

- 2025-10-23 07:07:25

- 查理三世国王的 5 便士硬币现已流通!了解新设计、其意义以及收藏家为何如此兴奋。准备好寻找这些历史硬币!

-