|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

在当前的人工智能时代精神中,序列模型因其分析数据和预测下一步该做什么的能力而迅速流行。

Sequence models have become increasingly popular in the AI domain for their ability to analyze data and predict下一步做什么. For instance, you've likely used next-token prediction models like ChatGPT, which anticipate each word (token) in a sequence to form answers to users' queries. There are also full-sequence diffusion models like Sora, which convert words into dazzling, realistic visuals by successively "denoising" an entire video sequence.

序列模型因其分析数据和预测下一步做什么的能力而在人工智能领域变得越来越流行。例如,您可能使用过 ChatGPT 等下一个标记预测模型,它预测序列中的每个单词(标记)以形成用户查询的答案。还有像 Sora 这样的全序列扩散模型,它通过连续对整个视频序列进行“去噪”,将单词转换为令人眼花缭乱、逼真的视觉效果。

Researchers from MIT's Computer Science and Artificial Intelligence Laboratory (CSAIL) have proposed a simple change to the diffusion training scheme that makes this sequence denoising considerably more flexible.

麻省理工学院计算机科学和人工智能实验室 (CSAIL) 的研究人员提出了对扩散训练方案的简单更改,使该序列去噪变得更加灵活。

When applied to fields like computer vision and robotics, the next-token and full-sequence diffusion models have capability trade-offs. Next-token models can spit out sequences that vary in length.

当应用于计算机视觉和机器人等领域时,下一个令牌和全序列扩散模型需要进行能力权衡。下一个令牌模型可以输出长度不同的序列。

However, they make these generations while being unaware of desirable states in the far future—such as steering its sequence generation toward a certain goal 10 tokens away—and thus require additional mechanisms for long-horizon (long-term) planning. Diffusion models can perform such future-conditioned sampling, but lack the ability of next-token models to generate variable-length sequences.

然而,他们在进行这些生成时并没有意识到遥远的未来的理想状态——例如将其序列生成引导到 10 个令牌之外的某个目标——因此需要额外的机制来进行长期规划。扩散模型可以执行此类未来条件采样,但缺乏下一个令牌模型生成可变长度序列的能力。

Researchers from CSAIL want to combine the strengths of both models, so they created a sequence model training technique called "Diffusion Forcing." The name comes from "Teacher Forcing," the conventional training scheme that breaks down full sequence generation into the smaller, easier steps of next-token generation (much like a good teacher simplifying a complex concept).

CSAIL 的研究人员希望结合这两种模型的优势,因此他们创建了一种称为“扩散强迫”的序列模型训练技术。这个名字来自“Teacher Forcing”,这是一种传统的训练方案,它将完整的序列生成分解为更小、更容易的下一代令牌生成步骤(就像一个好老师简化一个复杂的概念)。

Diffusion Forcing found common ground between diffusion models and teacher forcing: They both use training schemes that involve predicting masked (noisy) tokens from unmasked ones. In the case of diffusion models, they gradually add noise to data, which can be viewed as fractional masking.

扩散强迫发现了扩散模型和教师强迫之间的共同点:它们都使用涉及从未屏蔽标记中预测屏蔽(噪声)标记的训练方案。在扩散模型的情况下,它们逐渐向数据添加噪声,这可以被视为分数掩蔽。

The MIT researchers' Diffusion Forcing method trains neural networks to cleanse a collection of tokens, removing different amounts of noise within each one while simultaneously predicting the next few tokens. The result: a flexible, reliable sequence model that resulted in higher-quality artificial videos and more precise decision-making for robots and AI agents.

麻省理工学院研究人员的扩散强迫方法训练神经网络来清理一组标记,消除每个标记中不同量的噪声,同时预测接下来的几个标记。结果是:灵活、可靠的序列模型,为机器人和人工智能代理带来更高质量的人工视频和更精确的决策。

By sorting through noisy data and reliably predicting the next steps in a task, Diffusion Forcing can aid a robot in ignoring visual distractions to complete manipulation tasks. It can also generate stable and consistent video sequences and even guide an AI agent through digital mazes.

通过对噪声数据进行排序并可靠地预测任务的后续步骤,扩散强迫可以帮助机器人忽略视觉干扰来完成操作任务。它还可以生成稳定一致的视频序列,甚至引导人工智能代理穿过数字迷宫。

This method could potentially enable household and factory robots to generalize to new tasks and improve AI-generated entertainment.

这种方法有可能使家庭和工厂机器人能够推广到新任务并改善人工智能生成的娱乐。

"Sequence models aim to condition on the known past and predict the unknown future, a type of binary masking. However, masking doesn't need to be binary," says lead author, MIT electrical engineering and computer science (EECS) Ph.D. student, and CSAIL member Boyuan Chen.

“序列模型旨在以已知的过去为条件并预测未知的未来,这是一种二元屏蔽。然而,屏蔽不一定是二元的,”主要作者、麻省理工学院电气工程和计算机科学 (EECS) 博士说道。 。学生,CSAIL会员陈博源。

"With Diffusion Forcing, we add different levels of noise to each token, effectively serving as a type of fractional masking. At test time, our system can 'unmask' a collection of tokens and diffuse a sequence in the near future at a lower noise level. It knows what to trust within its data to overcome out-of-distribution inputs."

“通过扩散强迫,我们向每个标记添加不同级别的噪声,有效地充当一种分数掩蔽。在测试时,我们的系统可以“揭开”标记集合,并在不久的将来以较低的噪声扩散序列它知道在其数据中应该信任什么来克服分布外的输入。”

In several experiments, Diffusion Forcing thrived at ignoring misleading data to execute tasks while anticipating future actions.

在多项实验中,扩散强迫在忽略误导性数据来执行任务同时预测未来行动方面表现出色。

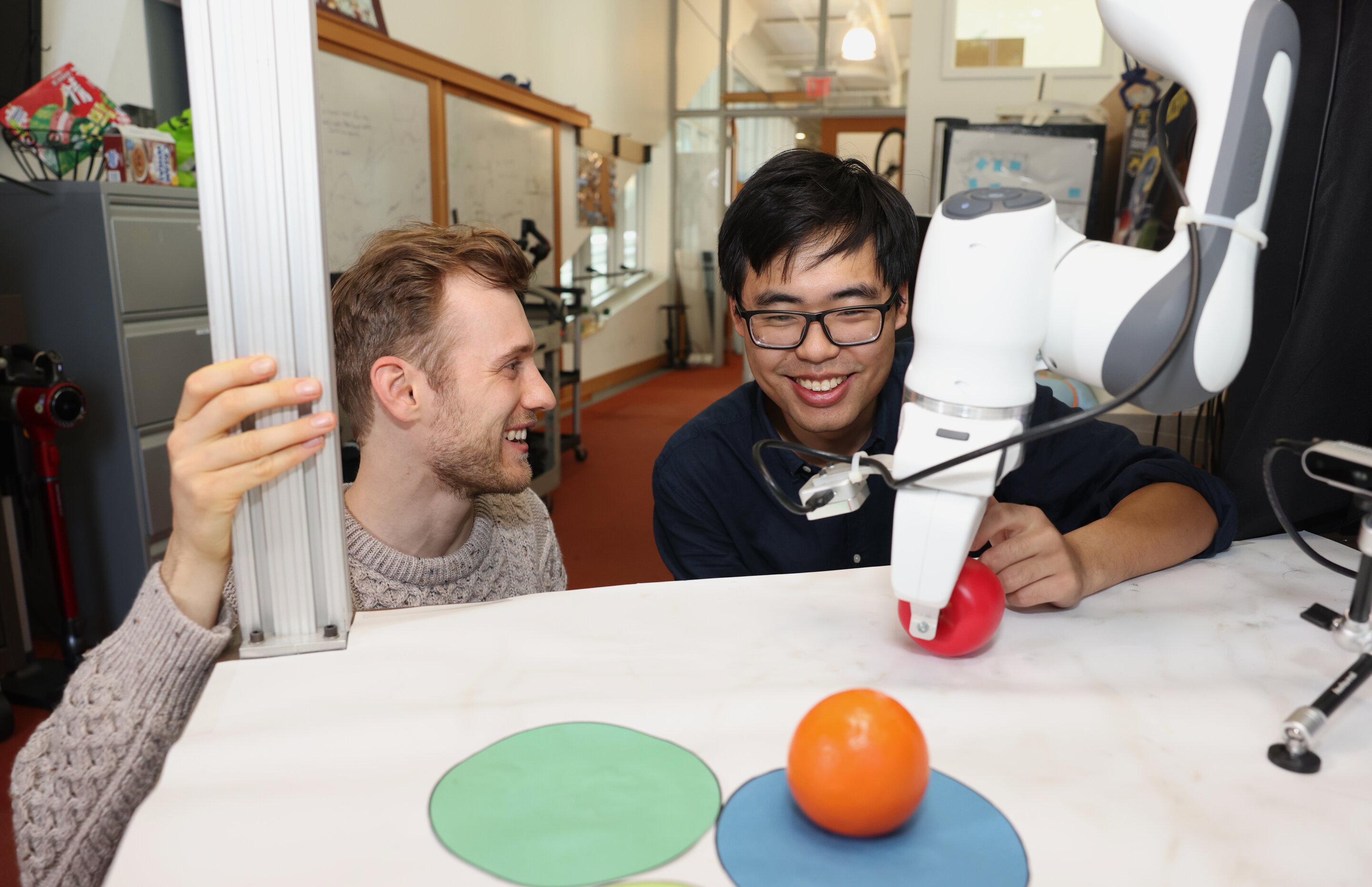

When implemented into a robotic arm, for example, it helped swap two toy fruits across three circular mats, a minimal example of a family of long-horizon tasks that require memories. The researchers trained the robot by controlling it from a distance (or teleoperating it) in virtual reality.

例如,当应用到机械臂中时,它可以帮助在三个圆形垫子上交换两个玩具水果,这是需要记忆的一系列长期任务的最小例子。研究人员通过在虚拟现实中远距离控制(或远程操作)机器人来训练机器人。

The robot is trained to mimic the user's movements from its camera. Despite starting from random positions and seeing distractions like a shopping bag blocking the markers, it placed the objects into its target spots.

机器人经过训练可以通过摄像头模仿用户的动作。尽管从随机位置开始,并看到诸如购物袋挡住标记之类的干扰,但它还是将物体放置到了目标位置。

To generate videos, they trained Diffusion Forcing on "Minecraft" game play and colorful digital environments created within Google's DeepMind Lab Simulator. When given a single frame of footage, the method produced more stable, higher-resolution videos than comparable baselines like a Sora-like full-sequence diffusion model and ChatGPT-like next-token models.

为了生成视频,他们在“我的世界”游戏和 Google DeepMind 实验室模拟器中创建的丰富多彩的数字环境中训练了扩散力。当给定单帧镜头时,与类似 Sora 的全序列扩散模型和类似 ChatGPT 的下一个令牌模型等类似基线相比,该方法产生了更稳定、更高分辨率的视频。

These approaches created videos that appeared inconsistent, with the latter sometimes failing to generate working video past just 72 frames.

这些方法创建的视频看起来不一致,后者有时无法生成仅超过 72 帧的工作视频。

Diffusion Forcing not only generates fancy videos, but can also serve as a motion planner that steers toward desired outcomes or rewards. Thanks to its flexibility, Diffusion Forcing can uniquely generate plans with varying horizon, perform tree search, and incorporate the intuition that the distant future is more uncertain than the near future.

扩散强迫不仅可以生成精美的视频,还可以作为运动规划器来引导所需的结果或奖励。由于其灵活性,扩散强迫可以独特地生成不同视野的计划,执行树搜索,并结合遥远的未来比近期的未来更不确定的直觉。

In the task of solving a 2D maze, Diffusion Forcing outperformed six baselines by generating faster plans leading to the goal location, indicating that it could be an effective planner for robots in the future.

在解决二维迷宫的任务中,扩散力通过生成更快的到达目标位置的计划,表现优于六个基线,这表明它可能成为未来机器人的有效规划器。

Across each demo, Diffusion Forcing acted as a full sequence model, a next-token prediction model, or both. According to Chen, this versatile approach could potentially serve as a powerful backbone for a "world model," an AI system that can simulate the dynamics of the world by training on billions of internet videos.

在每个演示中,扩散强迫充当完整序列模型、下一个令牌预测模型或两者兼而有之。陈表示,这种多功能方法有可能成为“世界模型”的强大支柱,“世界模型”是一种人工智能系统,可以通过数十亿互联网视频的训练来模拟世界的动态。

This would allow robots

这将使机器人

免责声明:info@kdj.com

所提供的信息并非交易建议。根据本文提供的信息进行的任何投资,kdj.com不承担任何责任。加密货币具有高波动性,强烈建议您深入研究后,谨慎投资!

如您认为本网站上使用的内容侵犯了您的版权,请立即联系我们(info@kdj.com),我们将及时删除。

-

- 自适应支付、数字商务、支付基础设施:新系统重塑交易格局

- 2026-02-11 17:58:05

- 数字商务世界正在迅速发展,要求支付基础设施不仅强大而且具有固有的适应性。新的创新正在重新定义企业处理交易的方式。

-

-

- 掌握 WoW 代币:HDG 的终极销售指南

- 2026-02-11 17:18:57

- 解开魔兽世界代币的秘密!本指南深入探讨了 HDG 玩家的销售策略、定价趋势和价值最大化。

-

-

-

-

-

-

- 比特币的双刃剑:规避 FOMO、机遇和陷阱

- 2026-02-11 14:00:01

- 分析比特币当前的市场动态,探讨 FOMO、机构采用以及投资者固有的风险和回报之间的相互作用。