|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

在當前的人工智慧時代精神中,序列模型因其分析數據和預測下一步該做什麼的能力而迅速流行。

Sequence models have become increasingly popular in the AI domain for their ability to analyze data and predict下一步做什么. For instance, you've likely used next-token prediction models like ChatGPT, which anticipate each word (token) in a sequence to form answers to users' queries. There are also full-sequence diffusion models like Sora, which convert words into dazzling, realistic visuals by successively "denoising" an entire video sequence.

序列模型因其分析數據和預測下一步要做什麼的能力而在人工智慧領域變得越來越流行。例如,您可能使用過 ChatGPT 等下一個標記預測模型,它預測序列中的每個單字(標記)以形成使用者查詢的答案。還有像 Sora 這樣的全序列擴散模型,它透過連續對整個視頻序列進行“去噪”,將單字轉換為令人眼花繚亂、逼真的視覺效果。

Researchers from MIT's Computer Science and Artificial Intelligence Laboratory (CSAIL) have proposed a simple change to the diffusion training scheme that makes this sequence denoising considerably more flexible.

麻省理工學院計算機科學和人工智慧實驗室 (CSAIL) 的研究人員提出了對擴散訓練方案的簡單更改,使該序列去噪變得更加靈活。

When applied to fields like computer vision and robotics, the next-token and full-sequence diffusion models have capability trade-offs. Next-token models can spit out sequences that vary in length.

當應用於電腦視覺和機器人等領域時,下一個令牌和全序列擴散模型需要進行能力權衡。下一個令牌模型可以輸出長度不同的序列。

However, they make these generations while being unaware of desirable states in the far future—such as steering its sequence generation toward a certain goal 10 tokens away—and thus require additional mechanisms for long-horizon (long-term) planning. Diffusion models can perform such future-conditioned sampling, but lack the ability of next-token models to generate variable-length sequences.

然而,他們在進行這些生成時並沒有意識到遙遠的未來的理想狀態——例如將其序列生成引導到 10 個令牌之外的某個目標——因此需要額外的機制來進行長期規劃。擴散模型可以執行此類未來條件採樣,但缺乏下一個令牌模型產生可變長度序列的能力。

Researchers from CSAIL want to combine the strengths of both models, so they created a sequence model training technique called "Diffusion Forcing." The name comes from "Teacher Forcing," the conventional training scheme that breaks down full sequence generation into the smaller, easier steps of next-token generation (much like a good teacher simplifying a complex concept).

CSAIL 的研究人員希望結合這兩種模型的優勢,因此他們創建了一種稱為「擴散強迫」的序列模型訓練技術。這個名字來自“Teacher Forcing”,這是一種傳統的訓練方案,它將完整的序列生成分解為更小、更容易的下一代令牌生成步驟(就像一個好老師簡化一個複雜的概念)。

Diffusion Forcing found common ground between diffusion models and teacher forcing: They both use training schemes that involve predicting masked (noisy) tokens from unmasked ones. In the case of diffusion models, they gradually add noise to data, which can be viewed as fractional masking.

擴散強迫發現了擴散模型和教師強迫之間的共同點:它們都使用涉及從未屏蔽標記中預測屏蔽(噪音)標記的訓練方案。在擴散模型的情況下,它們逐漸向資料添加噪聲,這可以被視為分數掩蔽。

The MIT researchers' Diffusion Forcing method trains neural networks to cleanse a collection of tokens, removing different amounts of noise within each one while simultaneously predicting the next few tokens. The result: a flexible, reliable sequence model that resulted in higher-quality artificial videos and more precise decision-making for robots and AI agents.

麻省理工學院研究人員的擴散強迫方法訓練神經網路來清理一組標記,消除每個標記中不同量的噪聲,同時預測接下來的幾個標記。結果是:靈活、可靠的序列模型,為機器人和人工智慧代理帶來更高品質的人工影片和更精確的決策。

By sorting through noisy data and reliably predicting the next steps in a task, Diffusion Forcing can aid a robot in ignoring visual distractions to complete manipulation tasks. It can also generate stable and consistent video sequences and even guide an AI agent through digital mazes.

透過對噪音資料進行排序並可靠地預測任務的後續步驟,擴散強迫可以幫助機器人忽略視覺幹擾來完成操作任務。它還可以產生穩定一致的影片序列,甚至引導人工智慧代理穿越數位迷宮。

This method could potentially enable household and factory robots to generalize to new tasks and improve AI-generated entertainment.

這種方法有可能使家庭和工廠機器人能夠推廣到新任務並改善人工智慧生成的娛樂。

"Sequence models aim to condition on the known past and predict the unknown future, a type of binary masking. However, masking doesn't need to be binary," says lead author, MIT electrical engineering and computer science (EECS) Ph.D. student, and CSAIL member Boyuan Chen.

「序列模型旨在以已知的過去為條件並預測未知的未來,這是一種二元屏蔽。然而,屏蔽不一定是二元的,」主要作者、麻省理工學院電氣工程和計算機科學( EECS) 博士說。學生,CSAIL會員陳博源。

"With Diffusion Forcing, we add different levels of noise to each token, effectively serving as a type of fractional masking. At test time, our system can 'unmask' a collection of tokens and diffuse a sequence in the near future at a lower noise level. It knows what to trust within its data to overcome out-of-distribution inputs."

「透過擴散強迫,我們為每個標記添加不同級別的噪聲,有效地充當一種分數掩蔽。在測試時,我們的系統可以「揭開」標記集合,並在不久的將來以較低的噪聲擴散序列它知道在其數據中應該信任什麼來克服分佈外的輸入。

In several experiments, Diffusion Forcing thrived at ignoring misleading data to execute tasks while anticipating future actions.

在多項實驗中,擴散強迫在忽略誤導性數據來執行任務同時預測未來行動方面表現出色。

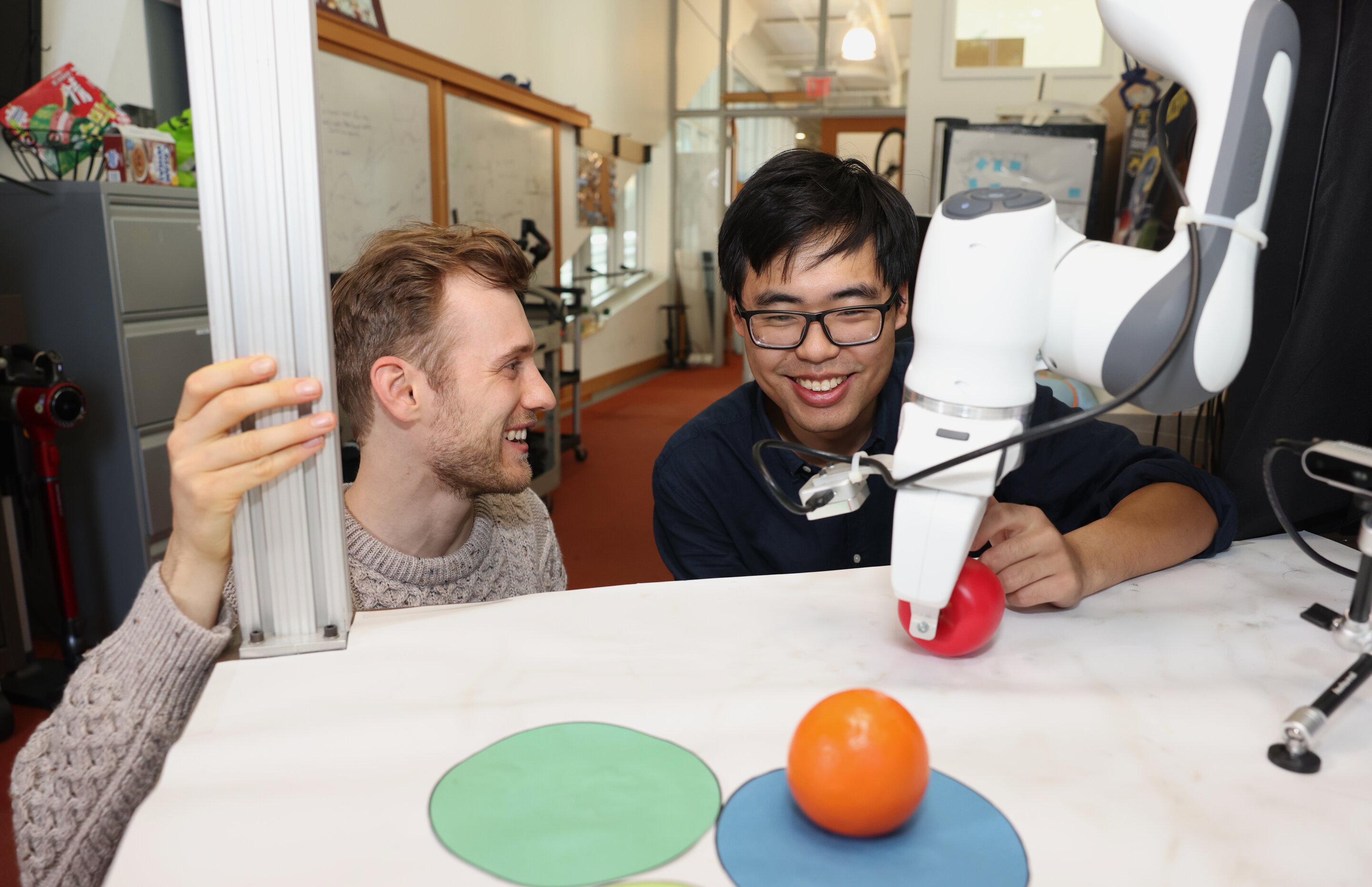

When implemented into a robotic arm, for example, it helped swap two toy fruits across three circular mats, a minimal example of a family of long-horizon tasks that require memories. The researchers trained the robot by controlling it from a distance (or teleoperating it) in virtual reality.

例如,當應用到機械手臂時,它可以幫助在三個圓形墊子上交換兩個玩具水果,這是需要記憶的一系列長期任務的最小例子。研究人員透過在虛擬實境中遠距離控制(或遠端操作)機器人來訓練機器人。

The robot is trained to mimic the user's movements from its camera. Despite starting from random positions and seeing distractions like a shopping bag blocking the markers, it placed the objects into its target spots.

機器人經過訓練可以透過攝影機模仿使用者的動作。儘管從隨機位置開始,並看到諸如購物袋擋住標記之類的干擾,但它還是將物體放置到了目標位置。

To generate videos, they trained Diffusion Forcing on "Minecraft" game play and colorful digital environments created within Google's DeepMind Lab Simulator. When given a single frame of footage, the method produced more stable, higher-resolution videos than comparable baselines like a Sora-like full-sequence diffusion model and ChatGPT-like next-token models.

為了生成視頻,他們在“我的世界”遊戲和 Google DeepMind 實驗室模擬器中創建的豐富多彩的數位環境中訓練了擴散力。當給定單幀鏡頭時,與類似 Sora 的全序列擴散模型和類似 ChatGPT 的下一個令牌模型等類似基線相比,該方法產生了更穩定、更高解析度的影片。

These approaches created videos that appeared inconsistent, with the latter sometimes failing to generate working video past just 72 frames.

這些方法創建的影片看起來不一致,後者有時無法產生僅超過 72 幀的工作影片。

Diffusion Forcing not only generates fancy videos, but can also serve as a motion planner that steers toward desired outcomes or rewards. Thanks to its flexibility, Diffusion Forcing can uniquely generate plans with varying horizon, perform tree search, and incorporate the intuition that the distant future is more uncertain than the near future.

擴散強迫不僅可以產生精美的視頻,還可以作為運動規劃器來引導所需的結果或獎勵。由於其靈活性,擴散強迫可以獨特地產生不同視野的計劃,執行樹搜索,並結合遙遠的未來比近期的未來更不確定的直覺。

In the task of solving a 2D maze, Diffusion Forcing outperformed six baselines by generating faster plans leading to the goal location, indicating that it could be an effective planner for robots in the future.

在解決二維迷宮的任務中,擴散力透過產生更快的到達目標位置的計劃,表現優於六個基線,這表明它可能成為未來機器人的有效規劃器。

Across each demo, Diffusion Forcing acted as a full sequence model, a next-token prediction model, or both. According to Chen, this versatile approach could potentially serve as a powerful backbone for a "world model," an AI system that can simulate the dynamics of the world by training on billions of internet videos.

在每個演示中,擴散強迫充當完整序列模型、下一個令牌預測模型或兩者兼而有之。陳表示,這種多功能方法有可能成為「世界模型」的強大支柱,「世界模型」是一種人工智慧系統,可以透過數十億網路影片的訓練來模擬世界的動態。

This would allow robots

這將使機器人

免責聲明:info@kdj.com

所提供的資訊並非交易建議。 kDJ.com對任何基於本文提供的資訊進行的投資不承擔任何責任。加密貨幣波動性較大,建議您充分研究後謹慎投資!

如果您認為本網站使用的內容侵犯了您的版權,請立即聯絡我們(info@kdj.com),我們將及時刪除。

-

-

- 比特幣的“無聲IPO”:配置和橫盤階段

- 2025-11-06 07:30:27

- 比特幣的橫盤交易並不是一個危險信號。這是一個“沉默的IPO”時刻,標誌著向機構配置的轉變和成熟階段。

-

-

-

-

-

-

- PumpFun、Solana、價格預測:駕馭 Solana 浪潮

- 2025-11-06 06:00:00

- 深入研究 Solana 上的 PumpFun (PUMP),分析價格預測、市場趨勢和潛在的增長催化劑。

-

- 比特幣金庫在歐洲紮根:機構平台出現

- 2025-11-06 05:48:42

- 歐洲的比特幣金庫格局正在隨著 FUTURE 和創新 ETP 等機構平台的發展而不斷發展,標誌著向主流採用的轉變。

![[4K 60fps] Morcee by DTMaster09 (1 金幣) [4K 60fps] Morcee by DTMaster09 (1 金幣)](/uploads/2025/11/06/cryptocurrencies-news/videos/690bf31847bc1_image_500_375.webp)