|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

來自卡內基美隆大學、NVIDIA、上海交通大學和加州大學柏克萊分校的研究人員開發了 XGrammar,這是一種突破性的結構化生成引擎,旨在解決這些限制。

As LLMs continue to advance, structured generation has become increasingly important. These models are now tasked with generating outputs that follow rigid formats, such as JSON, SQL, or other domain-specific languages. This capability is crucial for applications like code generation, robotic control, or structured querying. However, ensuring that outputs conform to specific structures without compromising speed or efficiency remains a significant challenge. While structured outputs enable seamless downstream processing, achieving these results requires innovative solutions due to the inherent complexity.

隨著法學碩士的不斷進步,結構化生成變得越來越重要。這些模型現在的任務是產生遵循嚴格格式的輸出,例如 JSON、SQL 或其他特定領域的語言。此功能對於程式碼生成、機器人控製或結構化查詢等應用至關重要。然而,確保輸出符合特定結構而不影響速度或效率仍然是一項重大挑戰。雖然結構化輸出可實現無縫下游處理,但由於其固有的複雜性,實現這些結果需要創新的解決方案。

Despite the recent advances in LLMs, there are still inefficiencies in structured output generation. One major challenge is handling the computational demands of adhering to grammatical constraints during output generation. Traditional methods, like context-free grammar (CFG) interpretation, require processing each possible token in the model’s vocabulary, which can exceed 128,000 tokens. Moreover, maintaining stack states to track recursive grammar rules adds to runtime delays. As a result, existing systems often experience high latency and increased resource usage, making them unsuitable for real-time or large-scale applications.

儘管法學碩士最近取得了進展,但結構化產出生成仍然效率低下。一項主要挑戰是處理輸出產生過程中遵守語法限制的計算需求。傳統方法,例如上下文無關語法 (CFG) 解釋,需要處理模型詞彙表中的每個可能的標記,該詞彙表可能超過 128,000 個標記。此外,維護堆疊狀態以追蹤遞歸語法規則會增加運行時延遲。因此,現有系統經常會出現高延遲和資源使用增加的情況,使其不適合即時或大規模應用。

Current tools for structured generation utilize constrained decoding methods to ensure outputs align with predefined rules. These approaches filter out invalid tokens by setting their probabilities to zero at each decoding step. While effective, constrained decoding often needs to improve its efficiency due to evaluating each token against the entire stack state. Also, the recursive nature of CFGs further complicates these runtime processes. These challenges have limited the scalability and practicality of existing systems, particularly when handling complex structures or large vocabularies.

目前的結構化產生工具利用約束解碼方法來確保輸出符合預先定義的規則。這些方法透過在每個解碼步驟將無效標記的機率設為零來過濾掉無效標記。雖然有效,但受限解碼通常需要提高其效率,因為要根據整個堆疊狀態評估每個令牌。此外,CFG 的遞歸性質使這些運行時過程更加複雜。這些挑戰限制了現有系統的可擴展性和實用性,特別是在處理複雜結構或大詞彙量時。

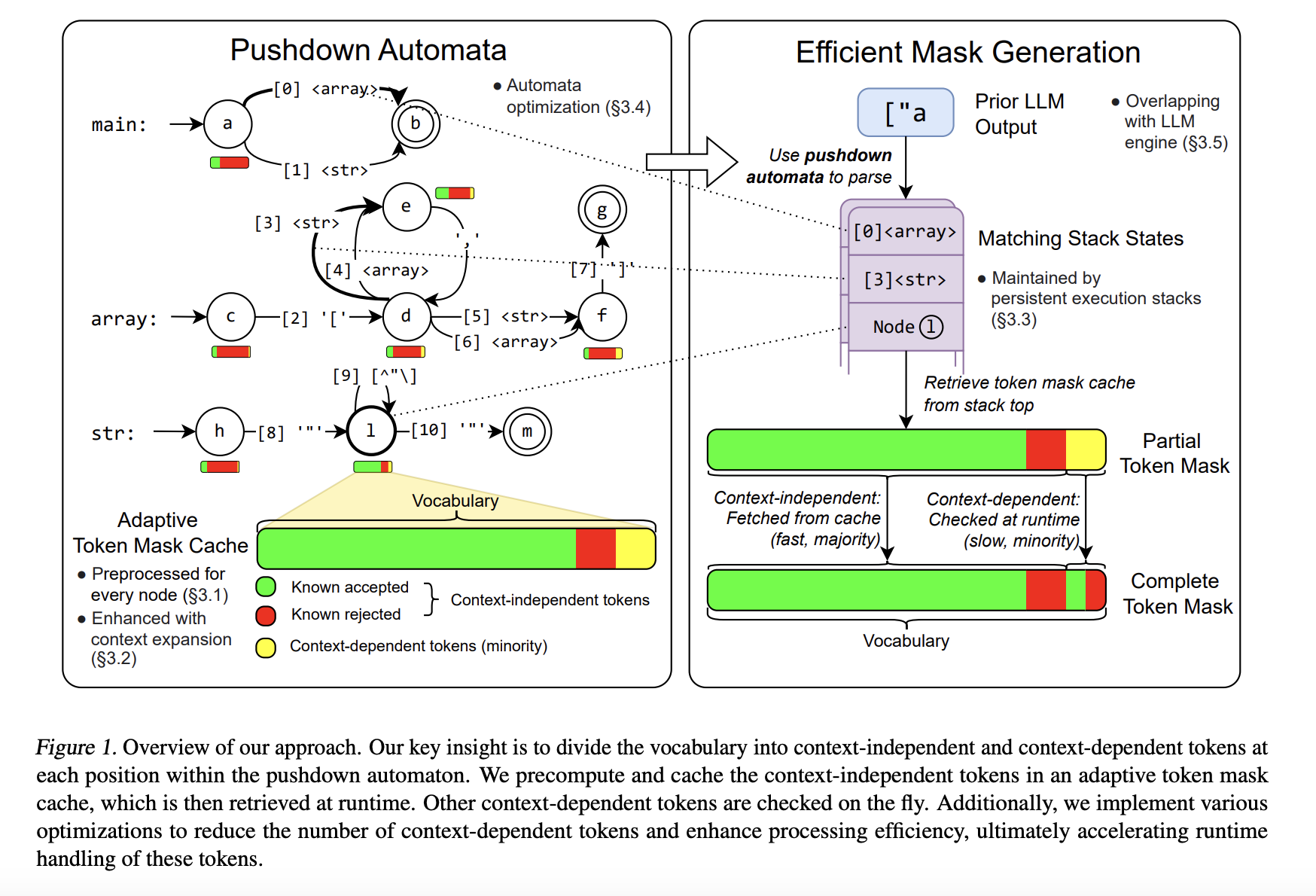

To address these limitations, researchers from Carnegie Mellon University, NVIDIA, Shanghai Jiao Tong University, and the University of California Berkeley developed XGrammar, a groundbreaking structured generation engine. XGrammar introduces a novel approach by dividing tokens into two categories: context-independent tokens that can be prevalidated and context-dependent tokens requiring runtime evaluation. This separation significantly reduces the computational burden during output generation. Also, the system incorporates a co-designed grammar and inference engine, enabling it to overlap grammar computations with GPU-based LLM operations, thereby minimizing overhead.

為了解決這些限制,來自卡內基美隆大學、NVIDIA、上海交通大學和加州大學柏克萊分校的研究人員開發了 XGrammar,這是一種突破性的結構化生成引擎。 XGrammar 引入了一種新穎的方法,將令牌分為兩類:可以預先驗證的上下文無關令牌和需要運行時評估的上下文相關令牌。這種分離顯著減少了輸出產生期間的計算負擔。此外,該系統還採用了共同設計的語法和推理引擎,使其能夠將語法計算與基於 GPU 的 LLM 操作重疊,從而最大限度地減少開銷。

XGrammar’s technical implementation includes several key innovations. It uses a byte-level pushdown automaton to process CFGs efficiently, enabling it to handle irregular token boundaries and nested structures. The adaptive token mask cache precomputes and stores validity for context-independent tokens, covering over 99% of tokens in most cases. Context-dependent tokens, representing less than 1% of the total, are processed using a persistent execution stack that allows for rapid branching and rollback operations. XGrammar’s preprocessing phase overlaps with the LLM’s initial prompt processing, ensuring near-zero latency for structured generation.

XGrammar 的技術實作包含多項關鍵創新。它使用位元組級下推自動機來高效處理 CFG,使其能夠處理不規則的標記邊界和嵌套結構。自適應令牌掩碼快取預先計算並儲存上下文無關令牌的有效性,在大多數情況下覆蓋超過 99% 的令牌。上下文相關的令牌佔總數的不到 1%,使用持久執行堆疊進行處理,該堆疊允許快速分支和回滾操作。 XGrammar 的預處理階段與 LLM 的初始提示處理重疊,確保結構化產生的延遲接近零。

Performance evaluations reveal the significant advantages of XGrammar. For JSON grammar tasks, the system achieves a token mask generation time of less than 40 microseconds, delivering up to a 100x speedup compared to traditional methods. Integrated with the Llama 3.1 model, XGrammar enables an 80x improvement in end-to-end structured output generation on the NVIDIA H100 GPU. Moreover, memory optimization techniques reduce storage requirements to just 0.2% of the original size, from 160 MB to 0.46 MB. These results demonstrate XGrammar’s ability to handle large-scale tasks with unprecedented efficiency.

效能評估揭示了 XGrammar 的顯著優勢。對於 JSON 語法任務,該系統實現了小於 40 微秒的令牌掩碼生成時間,與傳統方法相比,速度提高了 100 倍。 XGrammar 與 Llama 3.1 模型集成,使 NVIDIA H100 GPU 上的端到端結構化輸出產生能力提高了 80 倍。此外,記憶體最佳化技術將儲存需求從 160 MB 減少到 0.46 MB,僅為原始大小的 0.2%。這些結果證明了 XGrammar 能夠以前所未有的效率處理大規模任務。

The researchers’ efforts have several key takeaways:

研究人員的努力有幾個關鍵要點:

In conclusion, XGrammar represents a transformative step in structured generation for large language models. Addressing inefficiencies in traditional CFG processing and constrained decoding offers a scalable, high-performance solution for generating structured outputs. Its innovative techniques, such as token categorization, memory optimization, and platform compatibility, make it an essential tool for advancing AI applications. With results up to 100x speedup and reduced latency, XGrammar sets a new standard for structured generation, enabling LLMs to meet modern AI systems’ demands effectively.

總之,XGrammar 代表了大型語言模型結構化產生的變革性一步。解決傳統 CFG 處理和受限解碼中的低效率問題,為產生結構化輸出提供了可擴展的高效能解決方案。其創新技術,如代幣分類、記憶體優化和平台相容性,使其成為推進人工智慧應用的重要工具。 XGrammar 的結果提高了 100 倍並減少了延遲,為結構化生成設立了新標準,使法學碩士能夠有效滿足現代人工智慧系統的需求。

Check out the Paper and GitHub Page. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

請參閱 Paper 和 GitHub 頁面。這項研究的所有功勞都歸功於該計畫的研究人員。另外,不要忘記在 Twitter 上關注我們並加入我們的 Telegram 頻道和 LinkedIn 群組。如果您喜歡我們的工作,您一定會喜歡我們的電子報。

[FREE AI VIRTUAL CONFERENCE] SmallCon: Free Virtual GenAI Conference ft. Meta, Mistral, Salesforce, Harvey AI & more. Join us on Dec 11th for this free virtual event to learn what it takes to build big with small models from AI trailblazers like Meta, Mistral AI, Salesforce, Harvey AI, Upstage, Nubank, Nvidia, Hugging Face, and more.

[免費 AI 虛擬會議] SmallCon:免費虛擬 GenAI 會議 ft. Meta、Mistral、Salesforce、Harvey AI 等。 12 月 11 日加入我們,參加這個免費的虛擬活動,了解如何利用 Meta、Mistral AI、Salesforce、Harvey AI、Upstage、Nubank、Nvidia、Hugging Face 等 AI 先驅者的小模型構建大模型。

免責聲明:info@kdj.com

所提供的資訊並非交易建議。 kDJ.com對任何基於本文提供的資訊進行的投資不承擔任何責任。加密貨幣波動性較大,建議您充分研究後謹慎投資!

如果您認為本網站使用的內容侵犯了您的版權,請立即聯絡我們(info@kdj.com),我們將及時刪除。

-

- 隨著加密貨幣市場清算的5億美元,比特幣價格掙扎在其日常圖表上。

- 2025-04-03 16:40:12

- 在特朗普的關稅和通貨膨脹恐懼的貿易緊張局勢之後,今天的加密貨幣市場受到了巨大打擊。

-

- Tron創始人Justin Sun指控破產的第一筆數字信託(FDT)

- 2025-04-03 16:40:12

- 賈斯汀·孫(Justin Sun)週三提出了索賠

-

-

-

- 隨著輕鬆的Memecoin Generation的推出,Solana於去年一月開始

- 2025-04-03 16:30:12

- 隨著輕鬆的Memecoin Generation的推出,Solana於去年一月開始

-

- EOS,Story和Litecoin隨著市場的合併而激增 - 這些山寨幣的下一步

- 2025-04-03 16:30:12

- 由於關稅破壞了傳統和加密市場,一些山寨幣正在與日益增長的看跌活動作鬥爭

-

- 市場將隨著對比特幣和替代幣的需求增加而上升:分析師預測

- 2025-04-03 16:25:12

- 昨天,美國政府對包括中國,英國和韓國在內的一些著名貿易夥伴徵收了互惠關稅。

-

- 明尼蘇達州和阿拉巴馬州議員介紹伴侶法案以購買比特幣

- 2025-04-03 16:25:12

- 美國明尼蘇達州和阿拉巴馬州的立法者已向同一現有法案提交了同類法案,這些法案將使每個州都能購買比特幣。

-