|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

来自卡内基梅隆大学、NVIDIA、上海交通大学和加州大学伯克利分校的研究人员开发了 XGrammar,这是一种突破性的结构化生成引擎,旨在解决这些限制。

As LLMs continue to advance, structured generation has become increasingly important. These models are now tasked with generating outputs that follow rigid formats, such as JSON, SQL, or other domain-specific languages. This capability is crucial for applications like code generation, robotic control, or structured querying. However, ensuring that outputs conform to specific structures without compromising speed or efficiency remains a significant challenge. While structured outputs enable seamless downstream processing, achieving these results requires innovative solutions due to the inherent complexity.

随着法学硕士的不断进步,结构化生成变得越来越重要。这些模型现在的任务是生成遵循严格格式的输出,例如 JSON、SQL 或其他特定于领域的语言。此功能对于代码生成、机器人控制或结构化查询等应用至关重要。然而,确保输出符合特定结构而不影响速度或效率仍然是一个重大挑战。虽然结构化输出可实现无缝下游处理,但由于其固有的复杂性,实现这些结果需要创新的解决方案。

Despite the recent advances in LLMs, there are still inefficiencies in structured output generation. One major challenge is handling the computational demands of adhering to grammatical constraints during output generation. Traditional methods, like context-free grammar (CFG) interpretation, require processing each possible token in the model’s vocabulary, which can exceed 128,000 tokens. Moreover, maintaining stack states to track recursive grammar rules adds to runtime delays. As a result, existing systems often experience high latency and increased resource usage, making them unsuitable for real-time or large-scale applications.

尽管法学硕士最近取得了进展,但结构化产出生成仍然效率低下。一项主要挑战是处理输出生成过程中遵守语法约束的计算需求。传统方法,例如上下文无关语法 (CFG) 解释,需要处理模型词汇表中的每个可能的标记,该词汇表可能超过 128,000 个标记。此外,维护堆栈状态以跟踪递归语法规则会增加运行时延迟。因此,现有系统经常会出现高延迟和资源使用增加的情况,使其不适合实时或大规模应用。

Current tools for structured generation utilize constrained decoding methods to ensure outputs align with predefined rules. These approaches filter out invalid tokens by setting their probabilities to zero at each decoding step. While effective, constrained decoding often needs to improve its efficiency due to evaluating each token against the entire stack state. Also, the recursive nature of CFGs further complicates these runtime processes. These challenges have limited the scalability and practicality of existing systems, particularly when handling complex structures or large vocabularies.

当前的结构化生成工具利用约束解码方法来确保输出符合预定义的规则。这些方法通过在每个解码步骤将无效标记的概率设置为零来过滤掉无效标记。虽然有效,但受限解码通常需要提高其效率,因为要根据整个堆栈状态评估每个令牌。此外,CFG 的递归性质使这些运行时过程进一步复杂化。这些挑战限制了现有系统的可扩展性和实用性,特别是在处理复杂结构或大词汇量时。

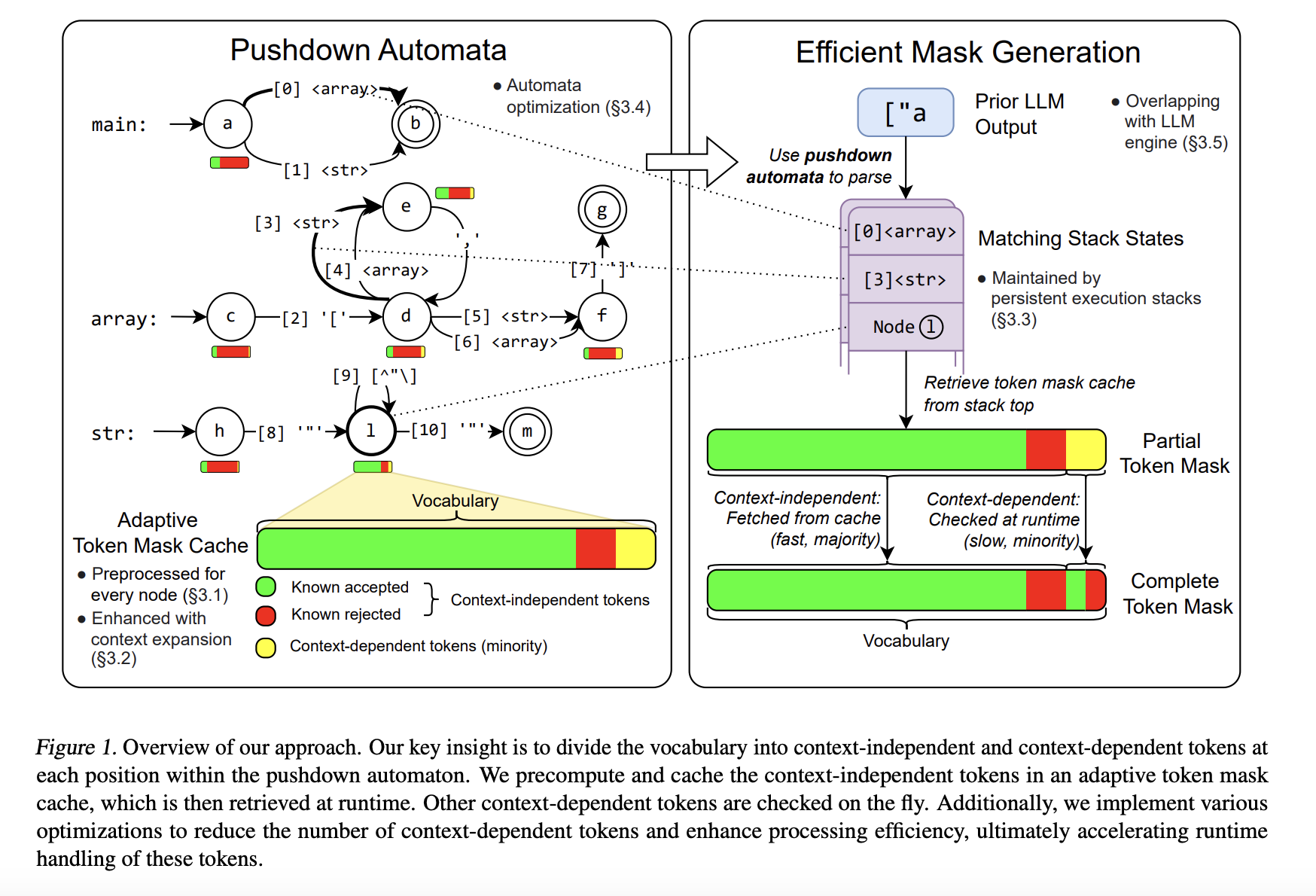

To address these limitations, researchers from Carnegie Mellon University, NVIDIA, Shanghai Jiao Tong University, and the University of California Berkeley developed XGrammar, a groundbreaking structured generation engine. XGrammar introduces a novel approach by dividing tokens into two categories: context-independent tokens that can be prevalidated and context-dependent tokens requiring runtime evaluation. This separation significantly reduces the computational burden during output generation. Also, the system incorporates a co-designed grammar and inference engine, enabling it to overlap grammar computations with GPU-based LLM operations, thereby minimizing overhead.

为了解决这些限制,来自卡内基梅隆大学、NVIDIA、上海交通大学和加州大学伯克利分校的研究人员开发了 XGrammar,这是一种突破性的结构化生成引擎。 XGrammar 引入了一种新颖的方法,将令牌分为两类:可以预先验证的上下文无关令牌和需要运行时评估的上下文相关令牌。这种分离显着减少了输出生成期间的计算负担。此外,该系统还采用了共同设计的语法和推理引擎,使其能够将语法计算与基于 GPU 的 LLM 操作重叠,从而最大限度地减少开销。

XGrammar’s technical implementation includes several key innovations. It uses a byte-level pushdown automaton to process CFGs efficiently, enabling it to handle irregular token boundaries and nested structures. The adaptive token mask cache precomputes and stores validity for context-independent tokens, covering over 99% of tokens in most cases. Context-dependent tokens, representing less than 1% of the total, are processed using a persistent execution stack that allows for rapid branching and rollback operations. XGrammar’s preprocessing phase overlaps with the LLM’s initial prompt processing, ensuring near-zero latency for structured generation.

XGrammar 的技术实现包括多项关键创新。它使用字节级下推自动机来高效处理 CFG,使其能够处理不规则的标记边界和嵌套结构。自适应令牌掩码缓存预先计算并存储上下文无关令牌的有效性,在大多数情况下覆盖超过 99% 的令牌。上下文相关的令牌占总数的不到 1%,使用持久执行堆栈进行处理,该堆栈允许快速分支和回滚操作。 XGrammar 的预处理阶段与 LLM 的初始提示处理重叠,确保结构化生成的延迟接近于零。

Performance evaluations reveal the significant advantages of XGrammar. For JSON grammar tasks, the system achieves a token mask generation time of less than 40 microseconds, delivering up to a 100x speedup compared to traditional methods. Integrated with the Llama 3.1 model, XGrammar enables an 80x improvement in end-to-end structured output generation on the NVIDIA H100 GPU. Moreover, memory optimization techniques reduce storage requirements to just 0.2% of the original size, from 160 MB to 0.46 MB. These results demonstrate XGrammar’s ability to handle large-scale tasks with unprecedented efficiency.

性能评估揭示了 XGrammar 的显着优势。对于 JSON 语法任务,该系统实现了小于 40 微秒的令牌掩码生成时间,与传统方法相比,速度提高了 100 倍。 XGrammar 与 Llama 3.1 模型集成,使 NVIDIA H100 GPU 上的端到端结构化输出生成能力提高了 80 倍。此外,内存优化技术将存储需求从 160 MB 减少到 0.46 MB,仅为原始大小的 0.2%。这些结果证明了 XGrammar 能够以前所未有的效率处理大规模任务。

The researchers’ efforts have several key takeaways:

研究人员的努力有几个关键要点:

In conclusion, XGrammar represents a transformative step in structured generation for large language models. Addressing inefficiencies in traditional CFG processing and constrained decoding offers a scalable, high-performance solution for generating structured outputs. Its innovative techniques, such as token categorization, memory optimization, and platform compatibility, make it an essential tool for advancing AI applications. With results up to 100x speedup and reduced latency, XGrammar sets a new standard for structured generation, enabling LLMs to meet modern AI systems’ demands effectively.

总之,XGrammar 代表了大型语言模型结构化生成的变革性一步。解决传统 CFG 处理和受限解码中的低效率问题,为生成结构化输出提供了可扩展的高性能解决方案。其创新技术,如代币分类、内存优化和平台兼容性,使其成为推进人工智能应用的重要工具。 XGrammar 的结果提高了 100 倍并减少了延迟,为结构化生成设立了新标准,使法学硕士能够有效满足现代人工智能系统的需求。

Check out the Paper and GitHub Page. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

查看 Paper 和 GitHub 页面。这项研究的所有功劳都归功于该项目的研究人员。另外,不要忘记在 Twitter 上关注我们并加入我们的 Telegram 频道和 LinkedIn 群组。如果您喜欢我们的工作,您一定会喜欢我们的时事通讯。不要忘记加入我们 55k+ ML SubReddit。

[FREE AI VIRTUAL CONFERENCE] SmallCon: Free Virtual GenAI Conference ft. Meta, Mistral, Salesforce, Harvey AI & more. Join us on Dec 11th for this free virtual event to learn what it takes to build big with small models from AI trailblazers like Meta, Mistral AI, Salesforce, Harvey AI, Upstage, Nubank, Nvidia, Hugging Face, and more.

[免费 AI 虚拟会议] SmallCon:免费虚拟 GenAI 会议 ft. Meta、Mistral、Salesforce、Harvey AI 等。 12 月 11 日加入我们,参加这个免费的虚拟活动,了解如何利用 Meta、Mistral AI、Salesforce、Harvey AI、Upstage、Nubank、Nvidia、Hugging Face 等 AI 先驱者的小模型构建大模型。

免责声明:info@kdj.com

所提供的信息并非交易建议。根据本文提供的信息进行的任何投资,kdj.com不承担任何责任。加密货币具有高波动性,强烈建议您深入研究后,谨慎投资!

如您认为本网站上使用的内容侵犯了您的版权,请立即联系我们(info@kdj.com),我们将及时删除。

-

-

-

-

-

- 6个即将到来的Kraken列表,这可能是加密货币的下一件大事

- 2025-04-09 05:00:13

- 每天出现数百种新的加密货币和令牌。许多人毫无价值,但有些可能只是加密货币中的下一个大事。

-

- COTI公布新的以隐私为中心的区块链重塑Web3交易

- 2025-04-09 05:00:13

- 随着COTI的新层2网络的推出,区块链隐私的重大飞跃已经到来。

-

- Qubetics($ TICS)正在塑造区块链互操作性的未来

- 2025-04-09 04:55:12

- 从目的看来,以目前的价格为0.0455美元的60万美元投资将确保约13,186,813个令牌。

-

- 全球加密交易所BTCC通过上市10个趋势山寨币对扩展其现货市场产品

- 2025-04-09 04:55:12

- 这一举动加强了BTCC致力于为全球用户多样化的交易机会。

-

- 该提议需要什么?

- 2025-04-09 04:50:12

- 根据Jiexhuang的说法,如果主要的全球经济体在其战略储备中采用比特币,则可能导致其价值稳定。这次轮班可能