|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

強化學習(RL)和大型語言模型(LLM)的融合開闢了計算語言學的新途徑。法學碩士具有非凡的理解和生成文本的能力,但他們的培訓需要應對確保他們的反應符合人類偏好的挑戰。直接偏好最佳化 (DPO) 作為 LLM 訓練的一種簡化方法而出現,消除了單獨獎勵學習的需要。相反,DPO 將獎勵函數直接整合到策略輸出中,從而能夠更好地控制語言生成。

Exploring the Synergy between Reinforcement Learning and Large Language Models: Direct Preference Optimization for Enhanced Text Generation

探索強化學習和大型語言模型之間的協同作用:增強文本生成的直接偏好優化

The intersection of reinforcement learning (RL) and large language models (LLMs) has emerged as a vibrant field within computational linguistics. These models, initially trained on vast text corpora, exhibit exceptional capabilities in understanding and producing human-like language. As research progresses, the challenge lies in refining these models to effectively capture nuanced human preferences and generate responses that accurately align with specific intents.

強化學習(RL)和大語言模型(LLM)的交叉已經成為計算語言學中一個充滿活力的領域。這些模型最初是在龐大的文本語料庫上進行訓練的,在理解和生成類人語言方面表現出了卓越的能力。隨著研究的進展,挑戰在於完善這些模型,以有效捕捉人類的細微偏好並產生準確符合特定意圖的反應。

Traditional approaches to language model training face limitations in handling the complexity and subtlety required in these tasks. This necessitates advancements that bridge the gap between human expectations and machine output. Reinforcement learning from human feedback (RLHF) frameworks, such as proximal policy optimization (PPO), have been explored for aligning LLMs with human preferences. Further innovations include incorporating Monte Carlo tree search (MCTS) and diffusion models into text generation pipelines, enhancing the quality and adaptability of model responses.

傳統的語言模型訓練方法在處理這些任務所需的複雜性和微妙性方面面臨限制。這就需要彌合人類期望和機器輸出之間差距的進步。人們已經探索了基於人類回饋(RLHF)框架的強化學習,例如近端策略優化(PPO),以使法學碩士與人類偏好保持一致。進一步的創新包括將蒙特卡羅樹搜尋(MCTS)和擴散模型納入文本生成管道,從而提高模型響應的品質和適應性。

Stanford University's Direct Preference Optimization (DPO)

史丹佛大學的直接偏好優化(DPO)

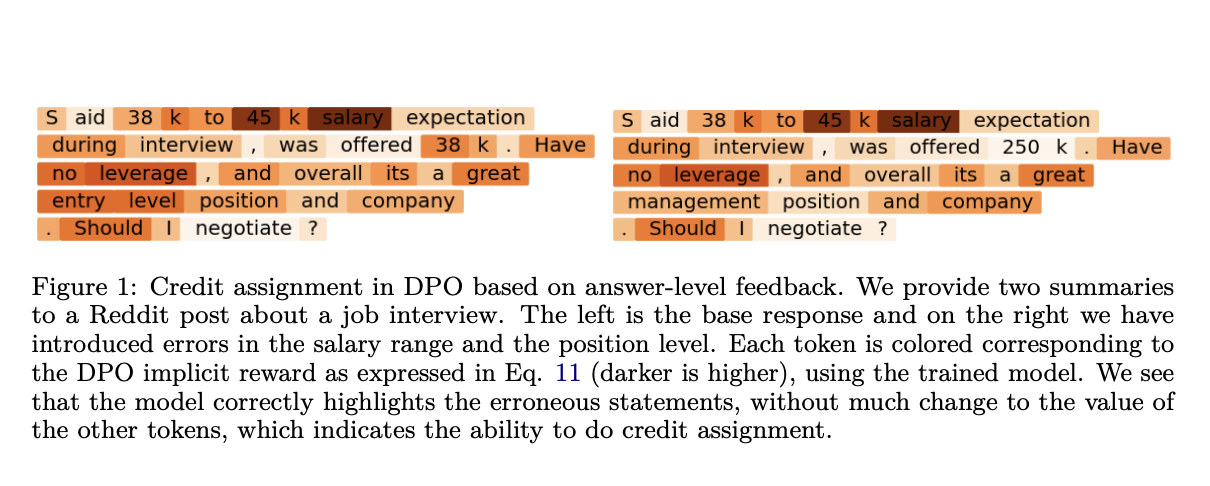

Stanford researchers have developed a streamlined approach for training LLMs known as Direct Preference Optimization (DPO). DPO integrates reward functions directly within policy outputs, eliminating the need for separate reward learning stages. This approach, based on Markov decision processes (MDPs) at the token level, provides finer control over the model's language generation capabilities.

史丹佛大學的研究人員開發了一種簡化的法學碩士培訓方法,稱為直接偏好優化(DPO)。 DPO 將獎勵功能直接整合到政策輸出中,從而無需單獨的獎勵學習階段。這種方法基於令牌層級的馬可夫決策過程 (MDP),可以更好地控制模型的語言生成功能。

Implementation and Evaluation

實施與評估

The study employed the Reddit TL;DR summarization dataset to assess the practical efficacy of DPO. Training and evaluation utilized precision-enhancing techniques such as beam search and MCTS, tailored to optimize decision-making at each point in the model's output. These methods facilitated the incorporation of detailed and immediate feedback directly into the policy learning process, effectively improving the relevance and alignment of textual output with human preferences.

研究採用 Reddit TL;DR 總結資料集來評估 DPO 的實際效果。訓練和評估利用了波束搜尋和 MCTS 等精度增強技術,旨在優化模型輸出中每個點的決策。這些方法有助於將詳細和即時的回饋直接納入政策學習過程,有效提高文本輸出與人類偏好的相關性和一致性。

Quantitative Results

定量結果

The implementation of DPO demonstrated measurable improvements in model performance. Employing beam search within the DPO framework yielded a win rate increase of 10-15% on held-out test prompts from the Reddit TL;DR dataset, as evaluated by GPT-4. These results showcase DPO's effectiveness in enhancing the alignment and accuracy of language model responses under specific test conditions.

DPO 的實施證明了模型性能的顯著改進。根據 GPT-4 的評估,在 DPO 框架內使用波束搜索,Reddit TL;DR 資料集的保留測試提示勝率提高了 10-15%。這些結果展示了 DPO 在特定測試條件下增強語言模型反應的一致性和準確性方面的有效性。

Conclusion

結論

The research introduced Direct Preference Optimization (DPO), a streamlined approach for training LLMs using a token-level Markov Decision Process. DPO integrates reward functions directly with policy outputs, simplifying the training process and enhancing the accuracy and alignment of language model responses with human feedback. These findings underscore the potential of DPO to advance the development and application of generative AI models.

該研究引入了直接偏好優化(DPO),這是一種使用令牌級馬可夫決策過程來訓練法學碩士的簡化方法。 DPO 將獎勵函數直接與策略輸出集成,簡化了訓練過程,並提高了語言模型反應與人類回饋的準確性和一致性。這些發現強調了 DPO 推動生成式 AI 模型的開發和應用的潛力。

Contributions to the Field

對該領域的貢獻

- Introduces a novel training approach for LLMs that leverages direct preference optimization.

- Integrates reward functions within policy outputs, eliminating the need for separate reward learning.

- Demonstrates improved model performance and alignment with human preferences, as evidenced by quantitative results on the Reddit TL;DR dataset.

- Simplifies and enhances the training processes of generative AI models.

為法學碩士引入了一種利用直接偏好優化的新穎訓練方法。與人類偏好的一致性.簡化並增強生成式人工智慧模型的訓練過程。

免責聲明:info@kdj.com

所提供的資訊並非交易建議。 kDJ.com對任何基於本文提供的資訊進行的投資不承擔任何責任。加密貨幣波動性較大,建議您充分研究後謹慎投資!

如果您認為本網站使用的內容侵犯了您的版權,請立即聯絡我們(info@kdj.com),我們將及時刪除。

-

-

-

-

- 加密貨幣仍然是最令人興奮的部門之一

- 2025-04-12 07:45:13

- 無論是長期以來的比特幣,新興的渲染(2025),還是創新的碼頭($ TICS),每個幣都有自己獨特的產品

-

- 索拉納(SOL)價格突破到200美元,因為看漲的合併將硬幣翻轉為新的每周高

- 2025-04-12 07:40:13

- 隨著目前的看漲鞏固將硬幣轉變為新的每周高點,Solana(Sol)的價格再次引起了人們的關注。

-

-

- 比特幣在DXY的背面增長,該比特幣已顯示為99.86

- 2025-04-12 07:35:14

- 在唐納德·特朗普(Donald Trump)徵收的巨大關稅並為中國(China)放寬時,我們都看過比特幣的下降。

-

- 2025年的前10個雲採礦地點(BTC)和Dogecoin(Doge)

- 2025-04-12 07:35:14

- 在2025年,傳統的加密礦業開採越來越成為責任而不是機會。電力價格處於創紀錄的高點和ASIC機器

-