|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

强化学习(RL)和大型语言模型(LLM)的融合开辟了计算语言学的新途径。法学硕士具有非凡的理解和生成文本的能力,但他们的培训需要应对确保他们的反应符合人类偏好的挑战。直接偏好优化 (DPO) 作为 LLM 培训的一种简化方法而出现,消除了单独奖励学习的需要。相反,DPO 将奖励函数直接集成到策略输出中,从而能够更好地控制语言生成。

Exploring the Synergy between Reinforcement Learning and Large Language Models: Direct Preference Optimization for Enhanced Text Generation

探索强化学习和大型语言模型之间的协同作用:增强文本生成的直接偏好优化

The intersection of reinforcement learning (RL) and large language models (LLMs) has emerged as a vibrant field within computational linguistics. These models, initially trained on vast text corpora, exhibit exceptional capabilities in understanding and producing human-like language. As research progresses, the challenge lies in refining these models to effectively capture nuanced human preferences and generate responses that accurately align with specific intents.

强化学习(RL)和大语言模型(LLM)的交叉已经成为计算语言学中一个充满活力的领域。这些模型最初是在庞大的文本语料库上进行训练的,在理解和生成类人语言方面表现出了卓越的能力。随着研究的进展,挑战在于完善这些模型,以有效捕获人类的细微偏好并生成准确符合特定意图的响应。

Traditional approaches to language model training face limitations in handling the complexity and subtlety required in these tasks. This necessitates advancements that bridge the gap between human expectations and machine output. Reinforcement learning from human feedback (RLHF) frameworks, such as proximal policy optimization (PPO), have been explored for aligning LLMs with human preferences. Further innovations include incorporating Monte Carlo tree search (MCTS) and diffusion models into text generation pipelines, enhancing the quality and adaptability of model responses.

传统的语言模型训练方法在处理这些任务所需的复杂性和微妙性方面面临局限性。这就需要弥合人类期望和机器输出之间差距的进步。人们已经探索了基于人类反馈(RLHF)框架的强化学习,例如近端策略优化(PPO),以使法学硕士与人类偏好保持一致。进一步的创新包括将蒙特卡罗树搜索(MCTS)和扩散模型纳入文本生成管道,从而提高模型响应的质量和适应性。

Stanford University's Direct Preference Optimization (DPO)

斯坦福大学的直接偏好优化(DPO)

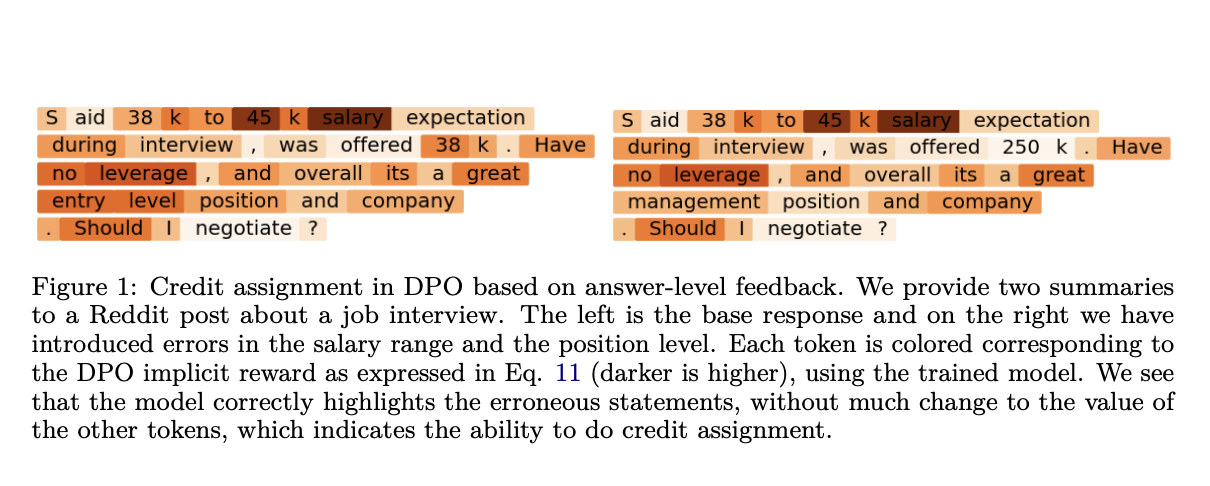

Stanford researchers have developed a streamlined approach for training LLMs known as Direct Preference Optimization (DPO). DPO integrates reward functions directly within policy outputs, eliminating the need for separate reward learning stages. This approach, based on Markov decision processes (MDPs) at the token level, provides finer control over the model's language generation capabilities.

斯坦福大学的研究人员开发了一种简化的法学硕士培训方法,称为直接偏好优化(DPO)。 DPO 将奖励功能直接集成到政策输出中,从而无需单独的奖励学习阶段。这种方法基于令牌级别的马尔可夫决策过程 (MDP),可以更好地控制模型的语言生成功能。

Implementation and Evaluation

实施与评估

The study employed the Reddit TL;DR summarization dataset to assess the practical efficacy of DPO. Training and evaluation utilized precision-enhancing techniques such as beam search and MCTS, tailored to optimize decision-making at each point in the model's output. These methods facilitated the incorporation of detailed and immediate feedback directly into the policy learning process, effectively improving the relevance and alignment of textual output with human preferences.

该研究采用 Reddit TL;DR 总结数据集来评估 DPO 的实际效果。训练和评估利用了波束搜索和 MCTS 等精度增强技术,旨在优化模型输出中每个点的决策。这些方法有助于将详细和即时的反馈直接纳入政策学习过程,有效提高文本输出与人类偏好的相关性和一致性。

Quantitative Results

定量结果

The implementation of DPO demonstrated measurable improvements in model performance. Employing beam search within the DPO framework yielded a win rate increase of 10-15% on held-out test prompts from the Reddit TL;DR dataset, as evaluated by GPT-4. These results showcase DPO's effectiveness in enhancing the alignment and accuracy of language model responses under specific test conditions.

DPO 的实施证明了模型性能的显着改进。根据 GPT-4 的评估,在 DPO 框架内使用波束搜索,Reddit TL;DR 数据集的保留测试提示胜率提高了 10-15%。这些结果展示了 DPO 在特定测试条件下增强语言模型响应的一致性和准确性方面的有效性。

Conclusion

结论

The research introduced Direct Preference Optimization (DPO), a streamlined approach for training LLMs using a token-level Markov Decision Process. DPO integrates reward functions directly with policy outputs, simplifying the training process and enhancing the accuracy and alignment of language model responses with human feedback. These findings underscore the potential of DPO to advance the development and application of generative AI models.

该研究引入了直接偏好优化(DPO),这是一种使用令牌级马尔可夫决策过程来训练法学硕士的简化方法。 DPO 将奖励函数直接与策略输出集成,简化了训练过程,并提高了语言模型响应与人类反馈的准确性和一致性。这些发现强调了 DPO 推动生成式 AI 模型的开发和应用的潜力。

Contributions to the Field

对该领域的贡献

- Introduces a novel training approach for LLMs that leverages direct preference optimization.

- Integrates reward functions within policy outputs, eliminating the need for separate reward learning.

- Demonstrates improved model performance and alignment with human preferences, as evidenced by quantitative results on the Reddit TL;DR dataset.

- Simplifies and enhances the training processes of generative AI models.

为法学硕士引入了一种利用直接偏好优化的新颖训练方法。将奖励函数集成到政策输出中,消除了单独奖励学习的需要。Reddit TL;DR 数据集上的定量结果证明了模型性能的改进以及与人类偏好的一致性.简化并增强生成式人工智能模型的训练过程。

免责声明:info@kdj.com

所提供的信息并非交易建议。根据本文提供的信息进行的任何投资,kdj.com不承担任何责任。加密货币具有高波动性,强烈建议您深入研究后,谨慎投资!

如您认为本网站上使用的内容侵犯了您的版权,请立即联系我们(info@kdj.com),我们将及时删除。

-

-

-

- 随着轻松的Memecoin Generation的推出,Solana于去年一月开始

- 2025-04-03 16:30:12

- 随着轻松的Memecoin Generation的推出,Solana于去年一月开始

-

- EOS,Story和Litecoin随着市场的合并而激增 - 这些山寨币的下一步

- 2025-04-03 16:30:12

- 由于关税破坏了传统和加密市场,一些山寨币正在与日益增长的看跌活动作斗争

-

- 市场将随着对比特币和替代币的需求增加而上升:分析师预测

- 2025-04-03 16:25:12

- 昨天,美国政府对包括中国,英国和韩国在内的一些著名贸易伙伴征收了互惠关税。

-

- 明尼苏达州和阿拉巴马州议员介绍伴侣法案以购买比特币

- 2025-04-03 16:25:12

- 美国明尼苏达州和阿拉巴马州的立法者已向同一现有法案提交了同类法案,这些法案将使每个州都能购买比特币。

-

-

-

- Metaplanet以1330万美元的价格扩大其比特币持有量,将其BTC储藏在

- 2025-04-03 16:15:12

- 此举增强了Metaplanet在亚洲和全球第9大公司持有人中最大的比特币持有人的地位。