|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Cryptocurrency News Articles

Stanford Unveils DPO: A Breakthrough in Language Model Training with Direct Preference Optimization

Apr 21, 2024 at 01:00 pm

The convergence of reinforcement learning (RL) and large language models (LLMs) opens up new avenues in computational linguistics. LLMs have extraordinary capabilities for understanding and generating text, but their training requires addressing the challenge of ensuring their responses align with human preferences. Direct Preference Optimization (DPO) emerges as a streamlined approach to LLM training, eliminating the need for separate reward learning. Instead, DPO integrates reward functions directly into policy outputs, enabling finer control over language generation.

Exploring the Synergy between Reinforcement Learning and Large Language Models: Direct Preference Optimization for Enhanced Text Generation

The intersection of reinforcement learning (RL) and large language models (LLMs) has emerged as a vibrant field within computational linguistics. These models, initially trained on vast text corpora, exhibit exceptional capabilities in understanding and producing human-like language. As research progresses, the challenge lies in refining these models to effectively capture nuanced human preferences and generate responses that accurately align with specific intents.

Traditional approaches to language model training face limitations in handling the complexity and subtlety required in these tasks. This necessitates advancements that bridge the gap between human expectations and machine output. Reinforcement learning from human feedback (RLHF) frameworks, such as proximal policy optimization (PPO), have been explored for aligning LLMs with human preferences. Further innovations include incorporating Monte Carlo tree search (MCTS) and diffusion models into text generation pipelines, enhancing the quality and adaptability of model responses.

Stanford University's Direct Preference Optimization (DPO)

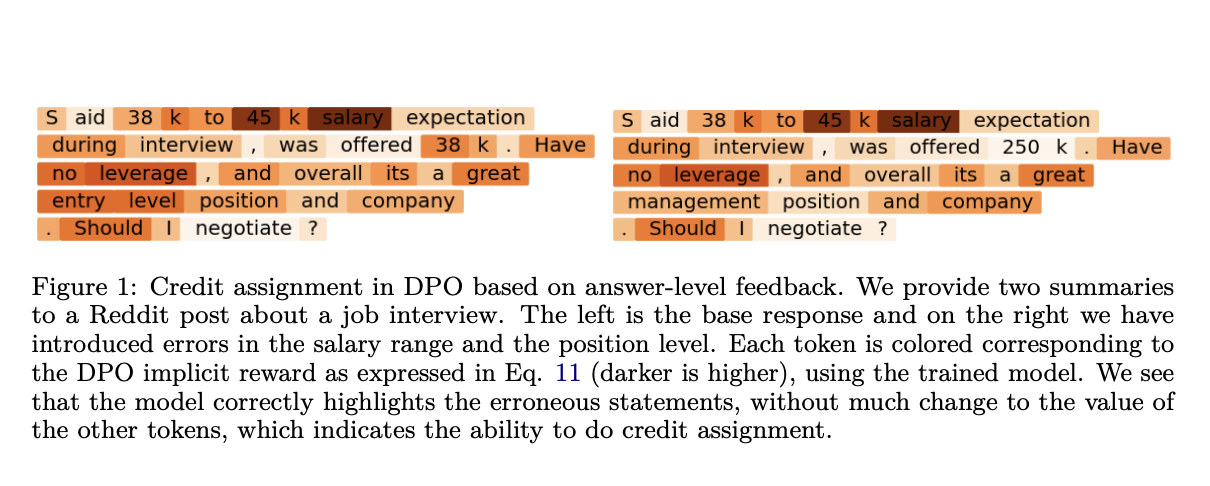

Stanford researchers have developed a streamlined approach for training LLMs known as Direct Preference Optimization (DPO). DPO integrates reward functions directly within policy outputs, eliminating the need for separate reward learning stages. This approach, based on Markov decision processes (MDPs) at the token level, provides finer control over the model's language generation capabilities.

Implementation and Evaluation

The study employed the Reddit TL;DR summarization dataset to assess the practical efficacy of DPO. Training and evaluation utilized precision-enhancing techniques such as beam search and MCTS, tailored to optimize decision-making at each point in the model's output. These methods facilitated the incorporation of detailed and immediate feedback directly into the policy learning process, effectively improving the relevance and alignment of textual output with human preferences.

Quantitative Results

The implementation of DPO demonstrated measurable improvements in model performance. Employing beam search within the DPO framework yielded a win rate increase of 10-15% on held-out test prompts from the Reddit TL;DR dataset, as evaluated by GPT-4. These results showcase DPO's effectiveness in enhancing the alignment and accuracy of language model responses under specific test conditions.

Conclusion

The research introduced Direct Preference Optimization (DPO), a streamlined approach for training LLMs using a token-level Markov Decision Process. DPO integrates reward functions directly with policy outputs, simplifying the training process and enhancing the accuracy and alignment of language model responses with human feedback. These findings underscore the potential of DPO to advance the development and application of generative AI models.

Contributions to the Field

- Introduces a novel training approach for LLMs that leverages direct preference optimization.

- Integrates reward functions within policy outputs, eliminating the need for separate reward learning.

- Demonstrates improved model performance and alignment with human preferences, as evidenced by quantitative results on the Reddit TL;DR dataset.

- Simplifies and enhances the training processes of generative AI models.

Disclaimer:info@kdj.com

The information provided is not trading advice. kdj.com does not assume any responsibility for any investments made based on the information provided in this article. Cryptocurrencies are highly volatile and it is highly recommended that you invest with caution after thorough research!

If you believe that the content used on this website infringes your copyright, please contact us immediately (info@kdj.com) and we will delete it promptly.

-

-

-

-

- Meta Earth Official Launch Event: The Unmissable Web3 Industry Gathering

- Apr 26, 2025 at 11:50 am

- From April 30 to May 1, 2025, Token2049 Dubai will host the most influential players in the blockchain space. Among them, Meta Earth stands out as the rising unicorn in the modular blockchain sector, being the only modular blockchain project among the title and platinum sponsors.

-

-

-

- Microsoft has unveiled a new sales strategy targeting small and medium -sized businesses (SMEs) by recovering third -party companies to promote its IA software offers.

- Apr 26, 2025 at 11:40 am

- Microsoft has unveiled a new sales strategy targeting small and medium -sized businesses (SMEs) by recovering third -party companies to promote its IA software offers.

-

-

![Trading is to follow [Review Video] Gold Bitcoin Crude Oil Orders Make Profits! Trading is to follow [Review Video] Gold Bitcoin Crude Oil Orders Make Profits!](/uploads/2025/04/26/cryptocurrencies-news/videos/trading-follow-review-video-gold-bitcoin-crude-oil-profits/image-1.webp)