|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Graph generation is a critical task in diverse fields like molecular design and social network analysis, owing to its capacity to model intricate relationships and structured data. Despite recent advances, many graph generative models heavily rely on adjacency matrix representations. While effective, these methods can be computationally demanding and often lack flexibility, making it challenging to efficiently capture the complex dependencies between nodes and edges, especially for large and sparse graphs. Current approaches, including diffusion-based and auto-regressive models, encounter difficulties in terms of scalability and accuracy, highlighting the need for more refined solutions.

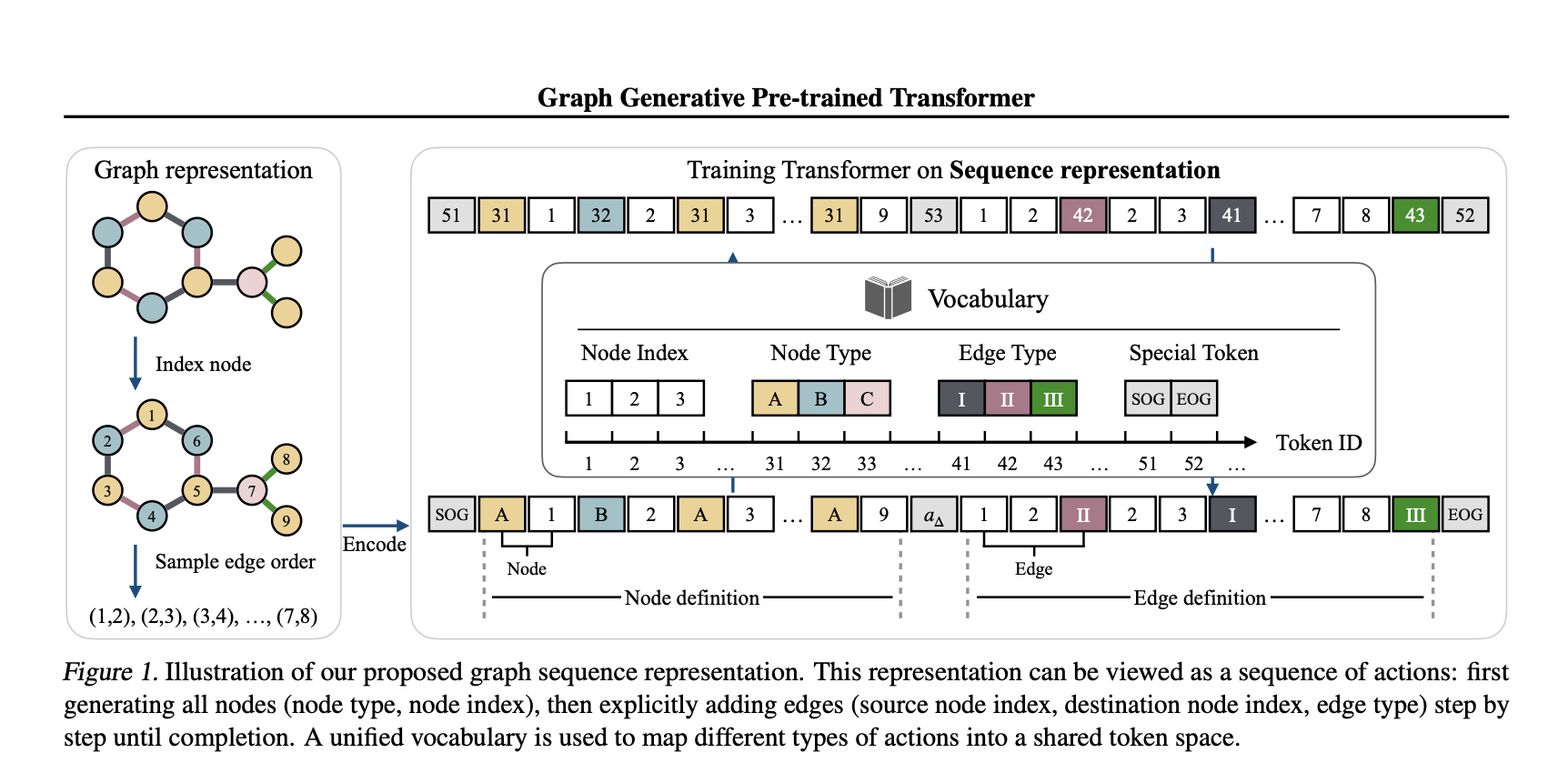

In a recent study, a team of researchers from Tufts University, Northeastern University, and Cornell University introduces the Graph Generative Pre-trained Transformer (G2PT), an auto-regressive model designed to learn graph structures through next-token prediction. Unlike traditional methods, G2PT employs a sequence-based representation of graphs, encoding nodes and edges as sequences of tokens. This approach streamlines the modeling process, making it more efficient and scalable. By leveraging a transformer decoder for token prediction, G2PT generates graphs that maintain structural integrity and flexibility. Moreover, G2PT can be readily adapted to downstream tasks, such as goal-oriented graph generation and graph property prediction, serving as a versatile tool for various applications.

Technical Insights and Benefits

G2PT introduces a novel sequence-based representation that decomposes graphs into node and edge definitions. Node definitions specify indices and types, whereas edge definitions outline connections and labels. This approach fundamentally differs from adjacency matrix representations, which focus on all possible edges, by considering only the existing edges, thereby reducing sparsity and computational complexity. The transformer decoder effectively models these sequences through next-token prediction, offering several advantages:

The researchers also explored fine-tuning methods for tasks like goal-oriented generation and graph property prediction, broadening the model’s applicability.

Experimental Results and Insights

G2PT has been evaluated on various datasets and tasks, demonstrating strong performance. In general graph generation, it matched or exceeded the state-of-the-art performance across seven datasets. In molecular graph generation, G2PT achieved high validity and uniqueness scores, reflecting its ability to accurately capture structural details. For instance, on the MOSES dataset, G2PTbase attained a validity score of 96.4% and a uniqueness score of 100%.

In a goal-oriented generation, G2PT aligned generated graphs with desired properties using fine-tuning techniques like rejection sampling and reinforcement learning. These methods enabled the model to adapt its outputs effectively. Similarly, in predictive tasks, G2PT’s embeddings delivered competitive results across molecular property benchmarks, reinforcing its suitability for both generative and predictive tasks.

Conclusion

The Graph Generative Pre-trained Transformer (G2PT) represents a thoughtful step forward in graph generation. By employing a sequence-based representation and transformer-based modeling, G2PT addresses many limitations of traditional approaches. Its combination of efficiency, scalability, and adaptability makes it a valuable resource for researchers and practitioners. While G2PT shows sensitivity to graph orderings, further exploration of universal and expressive edge-ordering mechanisms could enhance its robustness. G2PT exemplifies how innovative representations and modeling approaches can advance the field of graph generation.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 60k+ ML SubReddit.

🚨 FREE UPCOMING AI WEBINAR (JAN 15, 2025): Boost LLM Accuracy with Synthetic Data and Evaluation Intelligence–Join this webinar to gain actionable insights into boosting LLM model performance and accuracy while safeguarding data privacy.

免責聲明:info@kdj.com

所提供的資訊並非交易建議。 kDJ.com對任何基於本文提供的資訊進行的投資不承擔任何責任。加密貨幣波動性較大,建議您充分研究後謹慎投資!

如果您認為本網站使用的內容侵犯了您的版權,請立即聯絡我們(info@kdj.com),我們將及時刪除。

-

- 罕見的20p硬幣帶有微小錯誤的售價超過230倍。

- 2025-04-12 09:10:14

- 一枚未註明日期的20便幣在eBay上的錘子下面,價格高達49.04英鎊。

-

- 加密市場不僅僅是趨勢,還與時機有關。

- 2025-04-12 09:10:14

- Qubetics($ TICS)已成為這次對話中最受關注的名字之一。

-

-

-

- 特朗普簽署法案,以阻止IRS Defi稅收法規

- 2025-04-12 09:00:12

- 美國國稅局設計了一項規則,該規則旨在通過將分散的加密貨幣交換為定義來重新定義什麼構成經紀人。

-

-

- #PI硬幣價格的自由度超過80%

- 2025-04-12 08:55:13

- #PI硬幣價格從過去30天的高度從2.98美元到0.4012美元中的自由落體超過80%。但是,隨著市場情緒變成看漲

-

- 直接的金色評論 - 是騙局還是合法的?

- 2025-04-12 08:55:13

- 在貿易機器人的擁擠領域中,立即的戈爾達蒂(Goldarity)以兩個顯著的優勢來區分自己。

-