|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Graph generation is a critical task in diverse fields like molecular design and social network analysis, owing to its capacity to model intricate relationships and structured data. Despite recent advances, many graph generative models heavily rely on adjacency matrix representations. While effective, these methods can be computationally demanding and often lack flexibility, making it challenging to efficiently capture the complex dependencies between nodes and edges, especially for large and sparse graphs. Current approaches, including diffusion-based and auto-regressive models, encounter difficulties in terms of scalability and accuracy, highlighting the need for more refined solutions.

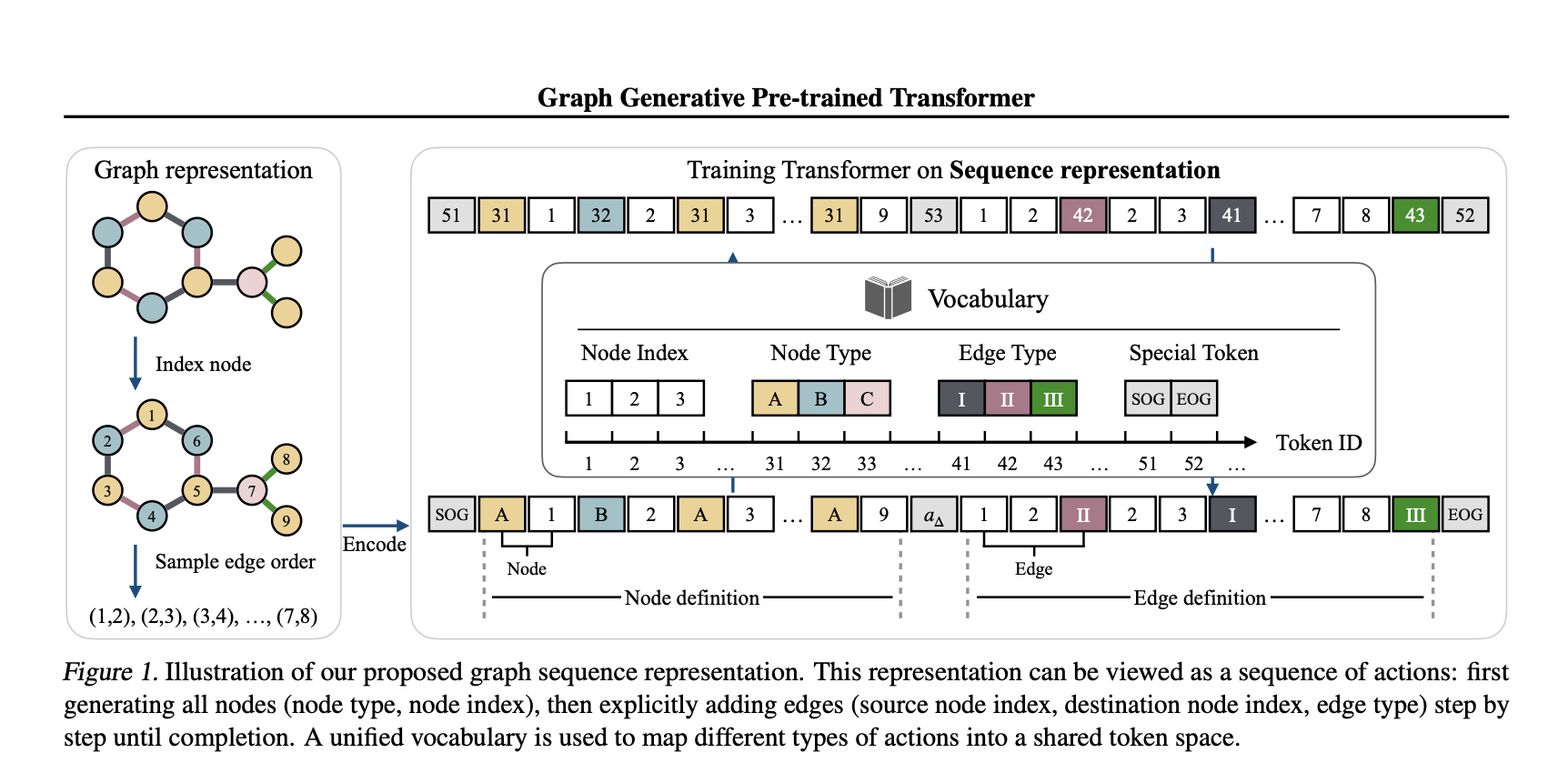

In a recent study, a team of researchers from Tufts University, Northeastern University, and Cornell University introduces the Graph Generative Pre-trained Transformer (G2PT), an auto-regressive model designed to learn graph structures through next-token prediction. Unlike traditional methods, G2PT employs a sequence-based representation of graphs, encoding nodes and edges as sequences of tokens. This approach streamlines the modeling process, making it more efficient and scalable. By leveraging a transformer decoder for token prediction, G2PT generates graphs that maintain structural integrity and flexibility. Moreover, G2PT can be readily adapted to downstream tasks, such as goal-oriented graph generation and graph property prediction, serving as a versatile tool for various applications.

Technical Insights and Benefits

G2PT introduces a novel sequence-based representation that decomposes graphs into node and edge definitions. Node definitions specify indices and types, whereas edge definitions outline connections and labels. This approach fundamentally differs from adjacency matrix representations, which focus on all possible edges, by considering only the existing edges, thereby reducing sparsity and computational complexity. The transformer decoder effectively models these sequences through next-token prediction, offering several advantages:

The researchers also explored fine-tuning methods for tasks like goal-oriented generation and graph property prediction, broadening the model’s applicability.

Experimental Results and Insights

G2PT has been evaluated on various datasets and tasks, demonstrating strong performance. In general graph generation, it matched or exceeded the state-of-the-art performance across seven datasets. In molecular graph generation, G2PT achieved high validity and uniqueness scores, reflecting its ability to accurately capture structural details. For instance, on the MOSES dataset, G2PTbase attained a validity score of 96.4% and a uniqueness score of 100%.

In a goal-oriented generation, G2PT aligned generated graphs with desired properties using fine-tuning techniques like rejection sampling and reinforcement learning. These methods enabled the model to adapt its outputs effectively. Similarly, in predictive tasks, G2PT’s embeddings delivered competitive results across molecular property benchmarks, reinforcing its suitability for both generative and predictive tasks.

Conclusion

The Graph Generative Pre-trained Transformer (G2PT) represents a thoughtful step forward in graph generation. By employing a sequence-based representation and transformer-based modeling, G2PT addresses many limitations of traditional approaches. Its combination of efficiency, scalability, and adaptability makes it a valuable resource for researchers and practitioners. While G2PT shows sensitivity to graph orderings, further exploration of universal and expressive edge-ordering mechanisms could enhance its robustness. G2PT exemplifies how innovative representations and modeling approaches can advance the field of graph generation.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 60k+ ML SubReddit.

? FREE UPCOMING AI WEBINAR (JAN 15, 2025): Boost LLM Accuracy with Synthetic Data and Evaluation Intelligence–Join this webinar to gain actionable insights into boosting LLM model performance and accuracy while safeguarding data privacy.

免责声明:info@kdj.com

所提供的信息并非交易建议。根据本文提供的信息进行的任何投资,kdj.com不承担任何责任。加密货币具有高波动性,强烈建议您深入研究后,谨慎投资!

如您认为本网站上使用的内容侵犯了您的版权,请立即联系我们(info@kdj.com),我们将及时删除。

-

- 黄金特朗普,比特币和一个雕像:有什么交易?

- 2025-09-18 18:05:16

- 华盛顿出现了一个持有比特币的黄金特朗普雕像,引发了有关加密货币,金融和特朗普的亲克赖特托立场的辩论。这是未来吗?

-

-

- Binance,CZ和Hyproliquid:加密货币的新时代?

- 2025-09-18 18:00:55

- 探索Binance的影响力,CZ的潜在回归以及超级流动性在不断发展的加密景观中的统治地位。

-

- 杜格供应,稀缺叙事和模因硬币躁狂症:有什么交易?

- 2025-09-18 18:00:30

- 探索围绕门多格供应量减少,稀缺性叙事和模因硬币市场的嗡嗡声,包括潜在的转变和竞争者。

-

-

- Schuyler Police K-9计划:挑战硬币是有理由的

- 2025-09-18 17:59:56

- 发现舒勒警察局如何使用挑战硬币来支持其K-9计划,并有可能将第二只狗添加到部队中。

-

- 通过圈子投资:价格预测更新

- 2025-09-18 17:59:26

- 超流动性(HYPE)违反了市场趋势,这是由于Circle的投资和平台增长所推动的新高度。 $ 100看吗?让我们深入研究分析。

-

- Mymonero Wallet:安全和财务自由的生命线

- 2025-09-18 17:56:43

- 发现Mymonero钱包如何超越其作为加密工具的角色,成为在挑战环境中隐私,安全和财务独立性的重要生命线。

-