|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Articles d’actualité sur les crypto-monnaies

G2PT: Graph Generative Pre-trained Transformer

Jan 06, 2025 at 04:21 am

Graph generation is a critical task in diverse fields like molecular design and social network analysis, owing to its capacity to model intricate relationships and structured data. Despite recent advances, many graph generative models heavily rely on adjacency matrix representations. While effective, these methods can be computationally demanding and often lack flexibility, making it challenging to efficiently capture the complex dependencies between nodes and edges, especially for large and sparse graphs. Current approaches, including diffusion-based and auto-regressive models, encounter difficulties in terms of scalability and accuracy, highlighting the need for more refined solutions.

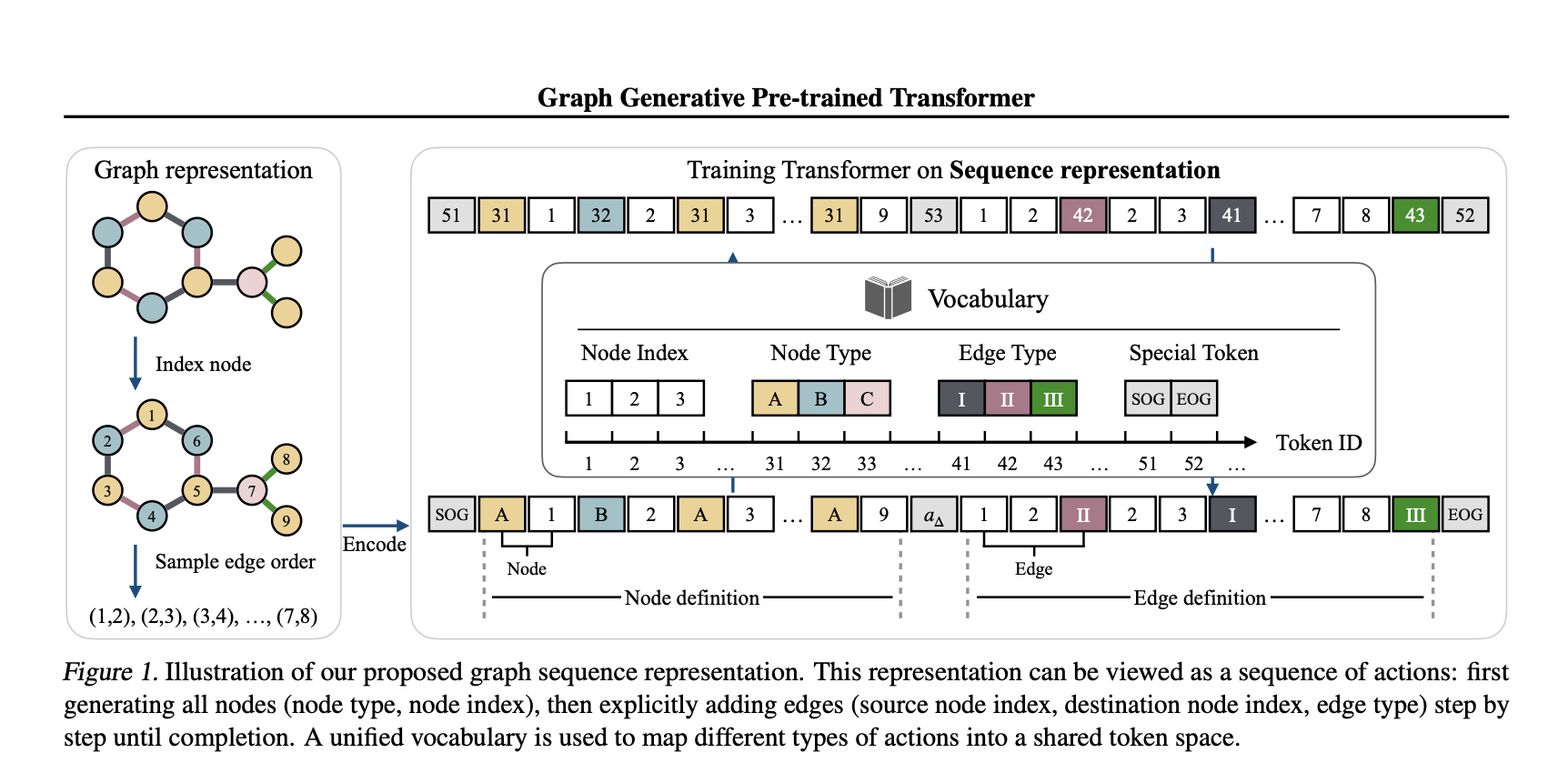

In a recent study, a team of researchers from Tufts University, Northeastern University, and Cornell University introduces the Graph Generative Pre-trained Transformer (G2PT), an auto-regressive model designed to learn graph structures through next-token prediction. Unlike traditional methods, G2PT employs a sequence-based representation of graphs, encoding nodes and edges as sequences of tokens. This approach streamlines the modeling process, making it more efficient and scalable. By leveraging a transformer decoder for token prediction, G2PT generates graphs that maintain structural integrity and flexibility. Moreover, G2PT can be readily adapted to downstream tasks, such as goal-oriented graph generation and graph property prediction, serving as a versatile tool for various applications.

Technical Insights and Benefits

G2PT introduces a novel sequence-based representation that decomposes graphs into node and edge definitions. Node definitions specify indices and types, whereas edge definitions outline connections and labels. This approach fundamentally differs from adjacency matrix representations, which focus on all possible edges, by considering only the existing edges, thereby reducing sparsity and computational complexity. The transformer decoder effectively models these sequences through next-token prediction, offering several advantages:

The researchers also explored fine-tuning methods for tasks like goal-oriented generation and graph property prediction, broadening the model’s applicability.

Experimental Results and Insights

G2PT has been evaluated on various datasets and tasks, demonstrating strong performance. In general graph generation, it matched or exceeded the state-of-the-art performance across seven datasets. In molecular graph generation, G2PT achieved high validity and uniqueness scores, reflecting its ability to accurately capture structural details. For instance, on the MOSES dataset, G2PTbase attained a validity score of 96.4% and a uniqueness score of 100%.

In a goal-oriented generation, G2PT aligned generated graphs with desired properties using fine-tuning techniques like rejection sampling and reinforcement learning. These methods enabled the model to adapt its outputs effectively. Similarly, in predictive tasks, G2PT’s embeddings delivered competitive results across molecular property benchmarks, reinforcing its suitability for both generative and predictive tasks.

Conclusion

The Graph Generative Pre-trained Transformer (G2PT) represents a thoughtful step forward in graph generation. By employing a sequence-based representation and transformer-based modeling, G2PT addresses many limitations of traditional approaches. Its combination of efficiency, scalability, and adaptability makes it a valuable resource for researchers and practitioners. While G2PT shows sensitivity to graph orderings, further exploration of universal and expressive edge-ordering mechanisms could enhance its robustness. G2PT exemplifies how innovative representations and modeling approaches can advance the field of graph generation.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 60k+ ML SubReddit.

? FREE UPCOMING AI WEBINAR (JAN 15, 2025): Boost LLM Accuracy with Synthetic Data and Evaluation Intelligence–Join this webinar to gain actionable insights into boosting LLM model performance and accuracy while safeguarding data privacy.

Clause de non-responsabilité:info@kdj.com

Les informations fournies ne constituent pas des conseils commerciaux. kdj.com n’assume aucune responsabilité pour les investissements effectués sur la base des informations fournies dans cet article. Les crypto-monnaies sont très volatiles et il est fortement recommandé d’investir avec prudence après une recherche approfondie!

Si vous pensez que le contenu utilisé sur ce site Web porte atteinte à vos droits d’auteur, veuillez nous contacter immédiatement (info@kdj.com) et nous le supprimerons dans les plus brefs délais.

-

-

-

-

- SUI, BFX et l'opportunité crypto : où va l'argent intelligent ?

- Oct 18, 2025 at 06:00 pm

- Naviguer à la croisée des chemins cryptographiques : les turbulences de SUI par rapport à la montée en flèche des préventes de BFX. Découvrez pourquoi les investisseurs avisés se tournent vers BlockchainFX pour des gains potentiels.

-

- Prévision des prix et potentiel de croissance du Zcash (ZEC) : la confidentialité est-elle l'avenir ?

- Oct 18, 2025 at 06:00 pm

- Analyser les prévisions de prix de Zcash, son potentiel de croissance et sa position unique dans le secteur des pièces de confidentialité. L'accent mis par ZEC sur la confidentialité sera-t-il le moteur de son succès futur ?

-

- SHIB, DOGE et MUTM : Meme Coins, Utility et chasse aux gains 100x

- Oct 18, 2025 at 06:00 pm

- Explorez la dynamique de Shiba Inu (SHIB), Dogecoin (DOGE) et Mutuum Finance (MUTM). Des rallyes axés sur les mèmes à l'utilitaire DeFi, découvrez les dernières tendances et informations dans le domaine de la cryptographie.

-

- Blockchain, finance, projets : naviguer dans l'avenir des actifs numériques dans l'agitation de New York

- Oct 18, 2025 at 05:55 pm

- Explorer l'intersection de la blockchain, de la finance et des projets innovants. De l'ascension de Solana aux préoccupations réglementaires d'Ondo Finance, cet article de blog plonge dans les principales tendances qui façonnent le paysage des actifs numériques.

-

-

- Libérer le potentiel de la cryptographie : naviguer dans le paysage de la prévente en 2025

- Oct 18, 2025 at 05:51 pm

- Explorez le paysage évolutif de la prévente de crypto en 2025, en mettant en évidence les tendances clés, les opportunités d'investissement et le passage du battage médiatique à l'utilitaire, en mettant l'accent sur des projets tels que LivLive, Remittix et Ozak AI.

![[4K 60fps] 5upreme par RoyalP (1 pièce) [4K 60fps] 5upreme par RoyalP (1 pièce)](/uploads/2025/10/18/cryptocurrencies-news/videos/k-fps-upreme-royalp-coin/68f2e6c9ef491_image_500_375.webp)