|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Cryptocurrency News Articles

Introducing the Chain-of-Experts (CoE) Approach: A New Paradigm for Sparse Neural Networks

Mar 04, 2025 at 01:57 pm

Large language models have significantly advanced our understanding of artificial intelligence, yet scaling these models efficiently remains challenging.

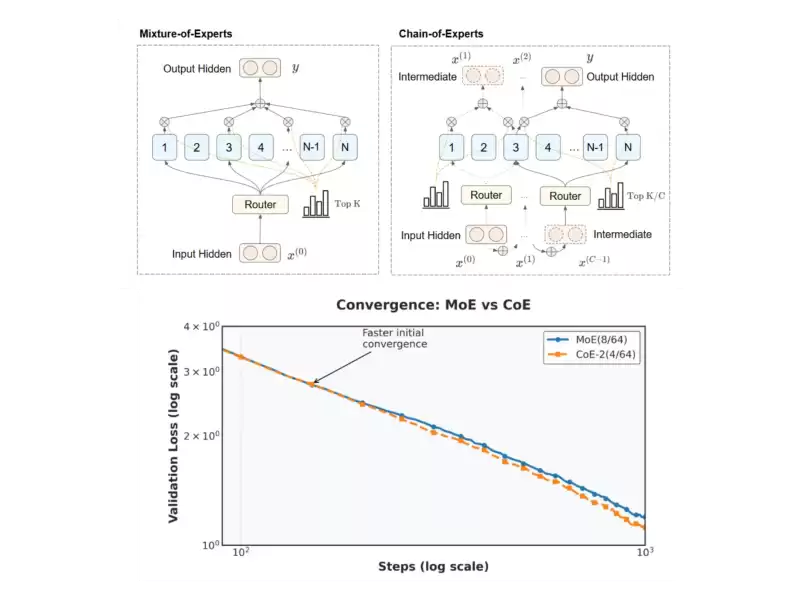

Large language models (LLMs) have revolutionized our understanding of artificial intelligence (AI), yet scaling these models efficiently remains a critical challenge. Traditional Mixture-of-Experts (MoE) architectures are designed to activate only a subset of experts per token in order to economize on computation. However, this design leads to two main issues. Firstly, experts process tokens in complete isolation—each expert performs its task without any cross-communication with others, which may limit the model’s ability to integrate diverse perspectives during processing. Secondly, although MoE models employ a sparse activation pattern, they still require considerable memory. This is because the overall parameter count is high, even if only a few experts are actively used at any given time. These observations suggest that while MoE models are a step forward in scalability, their inherent design may limit both performance and resource efficiency.

Chain-of-Experts (CoE)

Chain-of-Experts (CoE) offers a fresh perspective on MoE architectures by introducing a mechanism for sequential communication among experts. Unlike the independent processing seen in traditional MoE models, CoE allows tokens to be processed in a series of iterations within each layer. In this arrangement, the output of one expert serves as the input for the next, creating a communicative chain that enables experts to build upon one another’s work. This sequential interaction does not simply stack layers; it facilitates a more integrated approach to token processing, where each expert refines the token’s meaning based on previous outputs. The goal is to use memory more efficiently.

Technical Details and Benefits

At the heart of the CoE method is an iterative process that redefines how experts interact. For instance, consider a configuration described as CoE-2(4/64): the model operates with two iterations per token, with four experts selected from a pool of 64 at each cycle. This contrasts with traditional MoE, which uses a single pass through a pre-selected group of experts.

Another key technical element in CoE is the independent gating mechanism. In conventional MoE models, the gating function decides which experts should process a token, and these decisions are made once per token per layer. However, CoE takes this a step further by allowing each expert’s gating decision to be made independently during each iteration. This flexibility encourages a form of specialization, as an expert can adjust its processing based on the information received from earlier iterations.

Furthermore, the use of inner residual connections in CoE enhances the model. Instead of simply adding the original token back after the entire sequence of processing (an outer residual connection), CoE integrates residual connections within each iteration. This design helps to maintain the integrity of the token’s information while allowing for incremental improvements at every step.

These technical innovations combine to create a model that aims to retain performance with fewer resources and provides a more nuanced processing pathway, which could be valuable for tasks requiring layered reasoning.

Experimental Results and Insights

Preliminary experiments, such as pretraining on math-related tasks, show promise for the Chain-of-Experts method. In a configuration denoted as CoE-2(4/64), two iterations of four experts from a pool of 64 were used in each layer. Compared with traditional MoE operating under the same computational constraints, CoE-2(4/64) achieved a lower validation loss (1.12 vs. 1.20) without any increase in memory or computational cost.

The researchers also varied the configurations of Chain-of-Experts and compared them with traditional Mixture-of-Experts (MoE) models. For example, they tested CoE-2(4/64), CoE-1(8/64), and MoE(8) models, all operating within similar computational and memory footprints. Their findings showed that increasing the iteration count in Chain-of-Experts yielded benefits comparable to or even better than increasing the number of experts selected in a single pass. Even when the models were deployed on the same hardware and subjected to the same computational constraints, Chain-of-Experts demonstrated an advantage in terms of both performance and resource utilization.

In one experiment, a single layer of MoE with eight experts was compared with two layers of Chain-of-Experts, each selecting four experts. Despite having fewer experts in each layer, Chain-of-Experts achieved better performance. Moreover, when varying the experts' capacity (output dimension) while keeping the total parameters constant, Chain-of-Experts configurations showed up to an 18% reduction in memory usage while realizing similar or slightly better performance.

Another key finding was the dramatic increase in the number of possible expert combinations. With two iterations of four experts from a pool of 64, there were 3.8 x 10¹⁰⁴ different expert combinations in a single layer of Chain-of-Experts. In contrast, a single layer of MoE with eight experts had only 2.2 x 10⁴² combinations

Disclaimer:info@kdj.com

The information provided is not trading advice. kdj.com does not assume any responsibility for any investments made based on the information provided in this article. Cryptocurrencies are highly volatile and it is highly recommended that you invest with caution after thorough research!

If you believe that the content used on this website infringes your copyright, please contact us immediately (info@kdj.com) and we will delete it promptly.

-

- Spar Supermarket Expands Bitcoin Payment Options to Switzerland, Becoming the Latest to Adopt the Innovation

- Apr 19, 2025 at 01:25 am

- Bitcoin payment options are gradually becoming the norm for businesses globally. Recently, a prominent international grocery chain introduced the payment option to one of its stores in the pro-Bitcoin nation of Switzerland.

-

-

- Shiba Inu whales shift focus to a new crypto with 10,000% growth potential, sparking major investor interest.

- Apr 19, 2025 at 01:20 am

- Large investors in Shiba Inu are shifting their focus to a new cryptocurrency that is gaining rapid popularity. Predictions are emerging of a staggering 10,000% increase in value.

-

-

-

- Canadian Investors Can Now Buy Solana (SOL) as an Exchange-Traded Fund (ETF)

- Apr 19, 2025 at 01:15 am

- The Solana price has surged by 12% this week, massively outpacing rival large-cap cryptos. The ETFs have helped instill excitement in the Solana ecosystem and reinforced its credibility as an institutional-ready product.

-

-

- Straightforward monetary policy decisions may not work in this scenario as lower interest rates would push inflation higher but would help economic activity while higher rates will depress the economy but will keep inflation in check.

- Apr 19, 2025 at 01:10 am

- Straightforward monetary policy decisions may not work in this scenario as lower interest rates would push inflation higher but would help economic activity while higher rates will depress the economy

-