|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

大型語言模型顯著提高了我們對人工智能的理解,但是對這些模型進行有效擴展仍然具有挑戰性。

Large language models (LLMs) have revolutionized our understanding of artificial intelligence (AI), yet scaling these models efficiently remains a critical challenge. Traditional Mixture-of-Experts (MoE) architectures are designed to activate only a subset of experts per token in order to economize on computation. However, this design leads to two main issues. Firstly, experts process tokens in complete isolation—each expert performs its task without any cross-communication with others, which may limit the model’s ability to integrate diverse perspectives during processing. Secondly, although MoE models employ a sparse activation pattern, they still require considerable memory. This is because the overall parameter count is high, even if only a few experts are actively used at any given time. These observations suggest that while MoE models are a step forward in scalability, their inherent design may limit both performance and resource efficiency.

大型語言模型(LLM)徹底改變了我們對人工智能(AI)的理解,但是對這些模型進行有效擴展仍然是一個關鍵的挑戰。傳統的Experts(MOE)架構旨在僅激活每個令牌的一部分專家,以節省計算。但是,此設計導致兩個主要問題。首先,專家以完全隔離的方式處理令牌 - 每個專家無需與他人進行任何交叉通信執行任務,這可能會限制模型在處理過程中整合各種觀點的能力。其次,儘管MOE模型採用了稀疏的激活模式,但它們仍然需要相當大的內存。這是因為總體參數計數很高,即使在任何給定時間僅積極使用少數專家。這些觀察結果表明,雖然MOE模型是可伸縮性的一步,但它們的固有設計可能會限制性能和資源效率。

Chain-of-Experts (CoE)

專家鏈(COE)

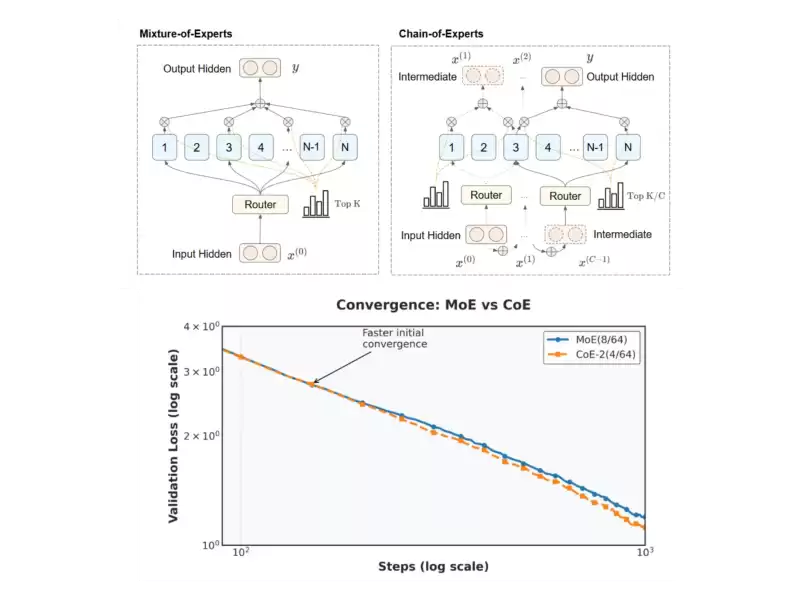

Chain-of-Experts (CoE) offers a fresh perspective on MoE architectures by introducing a mechanism for sequential communication among experts. Unlike the independent processing seen in traditional MoE models, CoE allows tokens to be processed in a series of iterations within each layer. In this arrangement, the output of one expert serves as the input for the next, creating a communicative chain that enables experts to build upon one another’s work. This sequential interaction does not simply stack layers; it facilitates a more integrated approach to token processing, where each expert refines the token’s meaning based on previous outputs. The goal is to use memory more efficiently.

專家鏈(COE)通過引入專家之間的連續溝通機制來提供有關MOE體系結構的新視角。與傳統MOE模型中看到的獨立處理不同,COE允許將令牌在每一層的一系列迭代中進行處理。在這種安排中,一位專家的輸出是下一個專家的輸入,創建了一個溝通鏈,使專家能夠在彼此的工作基礎上建立。這種順序相互作用不僅僅是堆疊層。它促進了一種更集成的代幣處理方法,每個專家都根據先前的輸出來完善令牌的含義。目標是更有效地使用內存。

Technical Details and Benefits

技術細節和好處

At the heart of the CoE method is an iterative process that redefines how experts interact. For instance, consider a configuration described as CoE-2(4/64): the model operates with two iterations per token, with four experts selected from a pool of 64 at each cycle. This contrasts with traditional MoE, which uses a single pass through a pre-selected group of experts.

COE方法的核心是一個迭代過程,它重新定義了專家的相互作用。例如,考慮一個描述為COE-2(4/64)的配置:該模型在每個令牌中以兩次迭代效果運行,每個循環中從一個64個池中選擇了四個專家。這與傳統教育部形成鮮明對比的是,傳統教育部使用了一個通過預先選擇的專家組。

Another key technical element in CoE is the independent gating mechanism. In conventional MoE models, the gating function decides which experts should process a token, and these decisions are made once per token per layer. However, CoE takes this a step further by allowing each expert’s gating decision to be made independently during each iteration. This flexibility encourages a form of specialization, as an expert can adjust its processing based on the information received from earlier iterations.

COE中的另一個關鍵技術要素是獨立的門控機制。在常規的MOE模型中,門控函數決定了哪些專家應處理令牌,並且這些決策是每層代幣一次。但是,COE通過允許在每次迭代期間獨立做出每個專家的門控決定來進一步邁出一步。這種靈活性鼓勵了一種專業化形式,因為專家可以根據早期迭代的信息來調整其處理。

Furthermore, the use of inner residual connections in CoE enhances the model. Instead of simply adding the original token back after the entire sequence of processing (an outer residual connection), CoE integrates residual connections within each iteration. This design helps to maintain the integrity of the token’s information while allowing for incremental improvements at every step.

此外,在COE中使用內部殘留連接可以增強模型。 COE不簡單地將原始令牌添加回整個處理序列(外部殘留連接),而是在每次迭代中整合了殘留連接。這種設計有助於保持令牌信息的完整性,同時在每個步驟都可以進行逐步改進。

These technical innovations combine to create a model that aims to retain performance with fewer resources and provides a more nuanced processing pathway, which could be valuable for tasks requiring layered reasoning.

這些技術創新結合起來創建一個模型,旨在通過更少的資源保留性能並提供更細微的處理途徑,這對於需要分層推理的任務可能很有價值。

Experimental Results and Insights

實驗結果和見解

Preliminary experiments, such as pretraining on math-related tasks, show promise for the Chain-of-Experts method. In a configuration denoted as CoE-2(4/64), two iterations of four experts from a pool of 64 were used in each layer. Compared with traditional MoE operating under the same computational constraints, CoE-2(4/64) achieved a lower validation loss (1.12 vs. 1.20) without any increase in memory or computational cost.

初步實驗,例如在與數學相關的任務上進行預處理,對專家鏈方法顯示了希望。在表示為COE-2(4/64)的配置中,每層使用了來自64個池的四個專家的兩個迭代。與在相同的計算約束下運行的傳統MOE相比,COE-2(4/64)實現了較低的驗證損失(1.12 vs. 1.20),而記憶或計算成本的增加。

The researchers also varied the configurations of Chain-of-Experts and compared them with traditional Mixture-of-Experts (MoE) models. For example, they tested CoE-2(4/64), CoE-1(8/64), and MoE(8) models, all operating within similar computational and memory footprints. Their findings showed that increasing the iteration count in Chain-of-Experts yielded benefits comparable to or even better than increasing the number of experts selected in a single pass. Even when the models were deployed on the same hardware and subjected to the same computational constraints, Chain-of-Experts demonstrated an advantage in terms of both performance and resource utilization.

研究人員還改變了專家鏈的配置,並將其與傳統的專家(MOE)模型進行了比較。例如,他們測試了COE-2(4/64),COE-1(8/64)和MOE(8)模型,它們都在相似的計算和內存足跡中運行。他們的發現表明,增加專家的迭代計數所產生的收益可與增加單次通過中選擇的專家數量相當甚至更好。即使將模型部署在相同的硬件上並受到相同的計算約束,專家鏈也證明了在性能和資源利用率方面都有優勢。

In one experiment, a single layer of MoE with eight experts was compared with two layers of Chain-of-Experts, each selecting four experts. Despite having fewer experts in each layer, Chain-of-Experts achieved better performance. Moreover, when varying the experts' capacity (output dimension) while keeping the total parameters constant, Chain-of-Experts configurations showed up to an 18% reduction in memory usage while realizing similar or slightly better performance.

在一個實驗中,將一層具有八個專家的MOE與兩層專家進行了比較,每個專家都選擇了四個專家。儘管每層專家的專家較少,但專家的鏈條取得了更好的性能。此外,當改變專家的容量(輸出維度)的同時保持總參數持續時,Experts配置的記憶使用量降低了18%,同時實現了相似或稍好的性能。

Another key finding was the dramatic increase in the number of possible expert combinations. With two iterations of four experts from a pool of 64, there were 3.8 x 10¹⁰⁴ different expert combinations in a single layer of Chain-of-Experts. In contrast, a single layer of MoE with eight experts had only 2.2 x 10⁴² combinations

另一個關鍵發現是可能的專家組合數量的急劇增加。有兩個來自64個池的四個專家的迭代,在單層專家鏈中有3.8 x10⁰⁴不同的專家組合。相比之下,一層擁有八個專家的MOE只有2.2 x10⁴²的組合

免責聲明:info@kdj.com

所提供的資訊並非交易建議。 kDJ.com對任何基於本文提供的資訊進行的投資不承擔任何責任。加密貨幣波動性較大,建議您充分研究後謹慎投資!

如果您認為本網站使用的內容侵犯了您的版權,請立即聯絡我們(info@kdj.com),我們將及時刪除。

-

- 冷軟件(冷)價格預測:它可以達到7美元嗎?

- 2025-04-19 01:10:13

- 加密貨幣市場一直在流浪(冷軟件)的出現,這已經看到投資者的興趣穩定增加。

-

-

-

- 當Litecoin(LTC)未來提示時會發生什麼

- 2025-04-19 01:05:13

- 儘管這些網絡竊取了頭條新聞,但另一個網絡正在悄悄地建立改變遊戲規則的動作 - blockdag。

-

-

- 加密貨幣市場於2025年開發了過山車

- 2025-04-19 01:00:12

- 加密貨幣市場在2025年乘坐過山車開始,在今年的頭三個月內喪失了超過6330億美元的價值。

-

-

- 解鎖40m $ trump代幣將循環供應稀釋20%

- 2025-04-19 00:55:14

- 在這個星期五,官方的特朗普代幣(特朗普)將釋放大量令牌。總統紀念總共是

-

- 拖釣者貓白人:早期獲得預售和潛在利潤

- 2025-04-19 00:50:13

- Troller Cat的白名單在公眾可用之前提供了預售的早期訪問。加入使投資者有機會在硬幣打入公開市場之前獲得早期和潛在的利潤。