|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

邁克爾·D·凱特(Michael D. Kats)

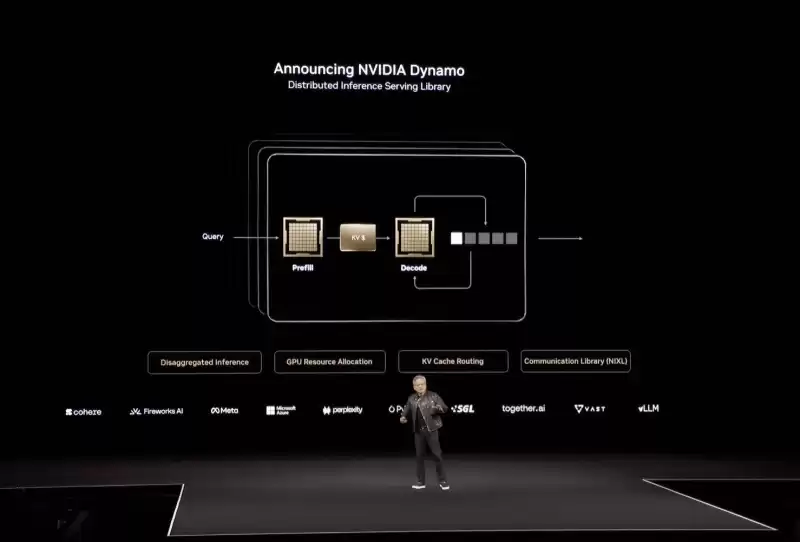

Nvidia's gargantuan Blackwell Ultra and upcoming Vera and Rubin CPUs and GPUs have certainly grabbed plenty of headlines at the corp's GPU Technology Conference this week. But arguably one of the most important announcements of the annual developer event wasn't a chip at all but rather a software framework called Dynamo, designed to tackle the challenges of AI inference at scale.

NVIDIA的Gargantuan Blackwell Ultra以及即將到來的Vera,Rubin CPU和GPU肯定在本週的GPU技術會議上吸引了許多頭條新聞。但是,可以說,年度開發人員活動最重要的公告之一根本不是芯片,而是一個名為Dynamo的軟件框架,旨在應對大規模AI推論的挑戰。

Announced on stage at GTC, it was described by CEO Jensen Huang as the "operating system of an AI factory," and drew comparisons to the real-world dynamo that kicked off an industrial revolution. "The dynamo was the first instrument that started the last industrial revolution," the chief exec said. "The industrial revolution of energy — water comes in, electricity comes out."

首席執行官詹森·黃(Jensen Huang)在GTC的舞台上宣布,它是“ AI工廠的操作系統”,並與現實世界中的發電機進行了比較,該雜音開始了工業革命。首席執行官說:“迪納摩是第一屆工業革命開始的工具。” “能源的工業革命 - 水進來,電力出來了。”

At its heart, the open source inference suite is designed to better optimize inference engines such as TensorRT LLM, SGLang, and vLLM to run across large quantities of GPUs as quickly and efficiently as possible.

開源推理套件的核心旨在更好地優化諸如Tensorrt LLM,Sglang和VLLM等推理引擎,以盡可能快速有效地跨越大量的GPU。

As we've previously discussed, the faster and cheaper you can turn out token after token from a model, the better the experience for users.

正如我們先前討論的那樣,從模型中的代幣之後,您可以更快,更便宜,用戶體驗越好。

Inference is harder than it looks

推論比看起來更難

At a high level, LLM output performance can be broken into two broad categories: Prefill and decode. Prefill is dictated by how quickly the GPU's floating-point matrix math accelerators can process the input prompt. The longer the prompt — say, a summarization task — the longer this typically takes.

在高水平上,LLM輸出性能可以分為兩個廣泛的類別:預填充和解碼。預填充是由GPU的浮點矩陣數學加速器可以處理輸入提示的速度來決定的。提示時間越長(例如,摘要任務),通常需要的時間越長。

Decode, on the other hand, is what most people associate with LLM performance, and equates to how quickly the GPUs can produce the actual tokens as a response to the user's prompt.

另一方面,解碼是大多數人與LLM性能相關聯的方法,並且等同於GPU可以產生實際令牌作為對用戶提示的響應的速度。

So long as your GPU has enough memory to fit the model, decode performance is usually a function of how fast that memory is and how many tokens you're generating. A GPU with 8TB/s of memory bandwidth will churn out tokens more than twice as fast as one with 3.35TB/s.

只要您的GPU具有足夠的內存以適合模型,解碼性能通常是該內存的速度和生成多少代價的函數。具有8TB/s的內存帶寬的GPU將使令牌的速度超過3.35tb/s的速度兩倍。

Where things start to get complicated is when you start looking at serving up larger models to more people with longer input and output sequences, like you might see in an AI research assistant or reasoning model.

事情開始變得複雜的地方是,當您開始考慮為更多具有更長輸入和輸出序列的人提供更大的模型時,就像您在AI研究助理或推理模型中可能看到的那樣。

Large models are typically distributed across multiple GPUs, and the way this is accomplished can have a major impact on performance and throughput, something Huang discussed at length during his keynote.

大型模型通常分佈在多個GPU中,並且完成此操作的方式可能會對性能和吞吐量產生重大影響,Huang在主題演講過程中詳細討論了這一點。

"Under the Pareto frontier are millions of points we could have configured the datacenter to do. We could have parallelized and split the work and sharded the work in a whole lot of different ways," he said.

他說:“在帕累托邊境下,我們本可以配置了數據中心要做的數百萬分。我們可以平行並分割工作,並以多種不同的方式分解了工作。”

What he means is, depending on your model's parallelism you might be able to serve millions of concurrent users but only at 10 tokens a second each. Meanwhile another combination is only be able to serve a few thousand concurrent requests but generate hundreds of tokens in the blink of an eye.

他的意思是,根據模型的並行性,您可能可以為數百萬並髮用戶提供服務,但每秒只有10個令牌。同時,另一種組合只能提供幾千個並發請求,但會眨眼間產生數百個令牌。

According to Huang, if you can figure out where on this curve your workload delivers the ideal mix of individual performance while also achieving the maximum throughput possible, you'll be able to charge a premium for your service and also drive down operating costs. We imagine this is the balancing act at least some LLM providers perform when scaling up their generative applications and services to more and more customers.

根據黃的說法,如果您可以弄清楚在此曲線上的位置,您的工作負載將提供個人績效的理想組合,同時也可以實現最大的吞吐量,那麼您將能夠為您的服務保費並降低運營成本。我們想像這是至少一些LLM提供商在向越來越多的客戶擴展其生成應用和服務時,至少有一些LLM提供商可以執行的。

Cranking the Dynamo

搖動發電機

Finding this happy medium between performance and throughput is one the key capabilities offered by Dynamo, we're told.

我們被告知,在性能和吞吐量之間找到這種快樂的媒介是發電機提供的關鍵功能之一。

In addition to providing users with insights as to what the ideal mix of expert, pipeline, or tensor parallelism might be, Dynamo disaggregates prefill and decode onto different accelerators.

除了向用戶提供有關專家,管道或張量並行性理想組合的見解之外,Dynamo將預填充物分解並解碼到不同的加速器上。

According to Nvidia, a GPU planner within Dynamo determines how many accelerators should be dedicated to prefill and decode based on demand.

根據NVIDIA的說法,Dynamo中的GPU規劃師確定應根據需求進行預填充和解碼多少加速器。

However, Dynamo isn't just a GPU profiler. The framework also includes prompt routing functionality, which identifies and directs overlapping requests to specific groups of GPUs to maximize the likelihood of a key-value (KV) cache hit.

但是,Dynamo不僅僅是GPU剖面。該框架還包括提示路由功能,該功能可以標識並直接將重疊請求到特定的GPU組,以最大程度地提高鍵值(KV)緩存命中的可能性。

If you're not familiar, the KV cache represents the state of the model at any given time. So, if multiple users ask similar questions in short order, the model can pull from this cache rather than recalculating the model state over and over again.

如果您不熟悉,則KV緩存代表任何給定時間的模型狀態。因此,如果多個用戶在短時間內提出類似的問題,則該模型可以從此緩存中拉出,而不是一遍又一遍地重新計算模型狀態。

Alongside the smart router, Dynamo also features a low-latency communication library to speed up GPU-to-GPU data flows, and a memory management subsystem that's responsible for pushing or pulling KV cache data from HBM to or from system memory or cold storage to maximize responsiveness and minimize wait times.

除了智能路由器外,Dynamo還具有低延遲通信庫,以加快GPU到GPU數據流,以及負責將KV CACHE數據從HBM推送到或從系統內存或冷存儲中推動或從冷存儲中推動或將其提取的內存管理子系統,以最大程度地提高響應能力並最大程度地減少等待時間。

For Hopper-based systems running Llama models, Nvidia claims Dynamo can effectively double the inference performance. Meanwhile for larger Blackwell NVL72 systems, the GPU giant claims a 30x advantage in DeepSeek-R1 over Hopper with the framework enabled.

對於基於料斗的系統運行駱駝模型,NVIDIA聲稱Dynamo可以有效地翻倍推理性能。同時,對於較大的Blackwell NVL72系統,GPU巨頭聲稱啟用了框架,在DeepSeek-R1中比Hopper具有30倍的優勢。

Broad compatibility

廣泛的兼容性

While Dynamo is obviously tuned for Nvidia's hardware and software stacks, much like the Triton Inference Server it replaces, the framework is designed to integrate with popular software libraries for model serving, like vLLM, PyTorch, and SGLang.

儘管Dynamo顯然是為NVIDIA的硬件和軟件堆棧調整的,但就像它替換的Triton推理服務器一樣,該框架的設計旨在與流行的軟件庫集成,例如VLLM,Pytorch和Sglang。

This means, if you

這意味著,如果你

免責聲明:info@kdj.com

所提供的資訊並非交易建議。 kDJ.com對任何基於本文提供的資訊進行的投資不承擔任何責任。加密貨幣波動性較大,建議您充分研究後謹慎投資!

如果您認為本網站使用的內容侵犯了您的版權,請立即聯絡我們(info@kdj.com),我們將及時刪除。

-

- 在公司共同探索新的付款用例時,可提供免費收費購買和簡易收費的1:1贖回的共用平台1:1贖回

- 2025-04-24 22:30:12

- 這種合作將為消費者,企業和機構提供價值,因為他們繼續在平台和邊界上利用數字貨幣

-

- 特朗普模因硬幣可能會在私人晚餐活動上推出新的NFT系列,為前220名持有人

- 2025-04-24 22:30:12

- 最近針對特朗普模因硬幣前220名持有人的私人晚宴活動的宣布,如果前總統不參加

-

- Coinbase刪除了購買PayPal的Stablecoin的費用

- 2025-04-24 22:25:12

- 該公司的目標是“加速PayPal USD(PYUSD)的採用,分銷和利用”

-

-

-

-

-

- 創紀錄的利潤率:革命將年收入翻了一番,達到13億美元

- 2025-04-24 22:15:12

- 加密貨幣交易的複興,與加密相關的收入同比增長120%。

-