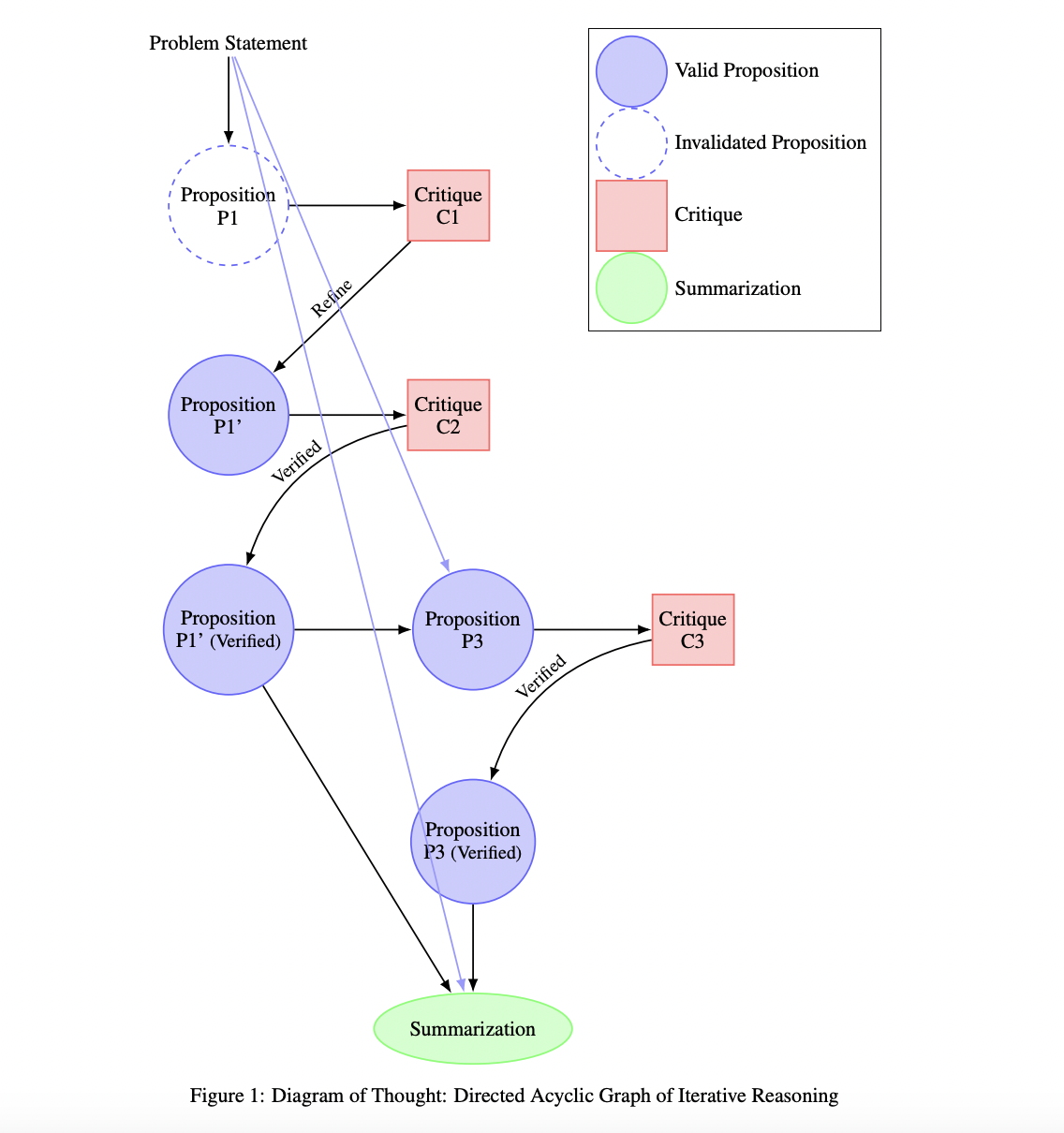

The Diagram of Thought (DoT) framework builds upon these prior approaches, integrating their strengths into a unified model within a single LLM. By representing reasoning as a directed acyclic graph (DAG), DoT captures the nuances of logical deduction while maintaining computational efficiency.

Researchers have proposed a novel framework, Diagram of Thought (DoT), to enhance reasoning capabilities in large language models (LLMs). This framework integrates iterative reasoning, natural language critiques, and auto-regressive next-token prediction with role-specific tokens. The theoretical foundation of DoT in Topos theory ensures logical consistency and soundness in the reasoning process.

This framework is constructed as a directed acyclic graph (DAG) that incorporates propositions, critiques, refinements, and verifications. The methodology employs role-specific tokens for proposing, criticizing, and summarizing, which facilitates iterative improvement of propositions.

Auto-regressive next-token prediction enables seamless transitions between proposing ideas and critical evaluation, enriching the feedback loop without external intervention. This approach streamlines the reasoning process within a single LLM, addressing the limitations of previous frameworks.

The DoT framework is formalized within Topos theory, providing a robust mathematical foundation that ensures logical consistency and soundness in the reasoning process. This formalism clarifies the relationship between reasoning processes and categorical logic, which is crucial for reliable outcomes in LLMs.

While specific experimental results are not detailed, the integration of critiques and dynamic reasoning aspects aims to enhance the model’s ability to handle complex reasoning tasks effectively. The methodology focuses on improving both training and inference processes, potentially advancing the capabilities of next-generation reasoning-specialized models.

The Diagram of Thought (DoT) framework demonstrates enhanced reasoning capabilities in large language models through a directed acyclic graph structure. It facilitates the iterative improvement of propositions via natural language feedback and role-specific contributions. The Topos-theoretic validation ensures logical consistency and soundness. Implemented within a single model, DoT streamlines both training and inference processes, eliminating the need for multiple models or external control mechanisms. This approach enables exploration of complex reasoning pathways, resulting in more accurate conclusions and coherent reasoning processes. The framework's effectiveness positions it as a significant advancement in developing reasoning-specialized models for complex tasks.

In conclusion, the DoT framework integrates iterative reasoning, natural language critiques, and auto-regressive next-token prediction with role-specific tokens. The theoretical foundation in Topos theory ensures logical consistency and soundness, while the practical implementation enables efficient and coherent reasoning processes within a single large language model. This framework advances the development of next-generation reasoning-specialized models for handling complex reasoning tasks effectively.

Disclaimer:info@kdj.com

The information provided is not trading advice. kdj.com does not assume any responsibility for any investments made based on the information provided in this article. Cryptocurrencies are highly volatile and it is highly recommended that you invest with caution after thorough research!

If you believe that the content used on this website infringes your copyright, please contact us immediately (info@kdj.com) and we will delete it promptly.