|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Cryptocurrency News Articles

OpenAI Exodus: Safety Watchdogs Depart, Raising Alarm Bells

May 18, 2024 at 07:03 pm

Following the departure of Chief Scientist Ilya Sutskever, OpenAI's Superalignment team co-lead, Jan Leike, has resigned over concerns about the company's prioritization of product development over AI safety. OpenAI has subsequently dissolved the Superalignment team, integrating its functions into other research projects amid ongoing internal restructuring.

OpenAI Exodus: Departing Researchers Sound Alarm on Safety Concerns

A seismic shift has occurred within the hallowed halls of OpenAI, the pioneering artificial intelligence (AI) research laboratory. In a chorus of resignations, key figures tasked with safeguarding the existential dangers posed by advanced AI have bid farewell, leaving an ominous void in the organization's ethical foundation.

Following the departure of Ilya Sutskever, OpenAI's esteemed chief scientist and co-founder, the company has been rocked by the resignation of Jan Leike, another prominent researcher who co-led the "superalignment" team. Leike's departure stems from deep-rooted concerns about OpenAI's priorities, which he believes have shifted away from AI safety and towards a relentless pursuit of product development.

In a series of thought-provoking public posts, Leike lambasted OpenAI's leadership for prioritizing short-term deliverables over the urgent need to establish a robust safety culture and mitigate the potential risks associated with the development of artificial general intelligence (AGI). AGI, a hypothetical realm of AI, holds the promise of surpassing human capabilities across a broad spectrum of tasks, but also raises profound ethical and existential questions.

Leike's critique centers around the glaring absence of adequate resources allocated to his team's safety research, particularly in terms of computing power. He maintains that OpenAI's management has consistently overlooked the critical importance of investing in safety initiatives, despite the looming threat posed by AGI.

"I have been disagreeing with OpenAI leadership about the company's core priorities for quite some time until we finally reached a breaking point. Over the past few months, my team has been sailing against the wind," Leike lamented.

In a desperate attempt to address the mounting concerns surrounding AI safety, OpenAI established a dedicated research team in July 2023, tasking them with preparing for the advent of advanced AI systems that could potentially outmaneuver and even overpower their creators. Sutskever was appointed as the co-lead of this newly formed team, which was granted a generous allocation of 20% of OpenAI's computational resources.

However, the recent departures of Sutskever and Leike have cast a long shadow over the future of OpenAI's AI safety research program. In a move that has sent shockwaves throughout the AI community, OpenAI has reportedly disbanded the "superalignment" team, effectively integrating its functions into other research projects within the organization. This decision is widely seen as a consequence of the ongoing internal restructuring, which was initiated in response to a governance crisis that shook OpenAI to its core in November 2023.

Sutskever, who played a pivotal role in the effort that briefly ousted Sam Altman as CEO before he was reinstated amidst employee backlash, has consistently emphasized the paramount importance of ensuring that OpenAI's AGI developments align with the interests of humanity. As a member of OpenAI's six-member board, Sutskever has repeatedly stressed the need to align the organization's goals with the greater good.

Leike's resignation serves as a stark reminder of the profound challenges facing OpenAI and the broader AI community as they grapple with the immense power and ethical implications of AGI. His departure signals a growing concern that OpenAI's priorities have become misaligned, potentially jeopardizing the safety and well-being of humanity in the face of rapidly advancing AI technologies.

The exodus of key researchers from OpenAI's AI safety team should serve as a wake-up call to all stakeholders, including policymakers, industry leaders, and the general public. It is imperative that we heed the warnings of these experts and prioritize the implementation of robust safety measures as we venture into the uncharted territory of AGI. The future of humanity may depend on it.

Disclaimer:info@kdj.com

The information provided is not trading advice. kdj.com does not assume any responsibility for any investments made based on the information provided in this article. Cryptocurrencies are highly volatile and it is highly recommended that you invest with caution after thorough research!

If you believe that the content used on this website infringes your copyright, please contact us immediately (info@kdj.com) and we will delete it promptly.

-

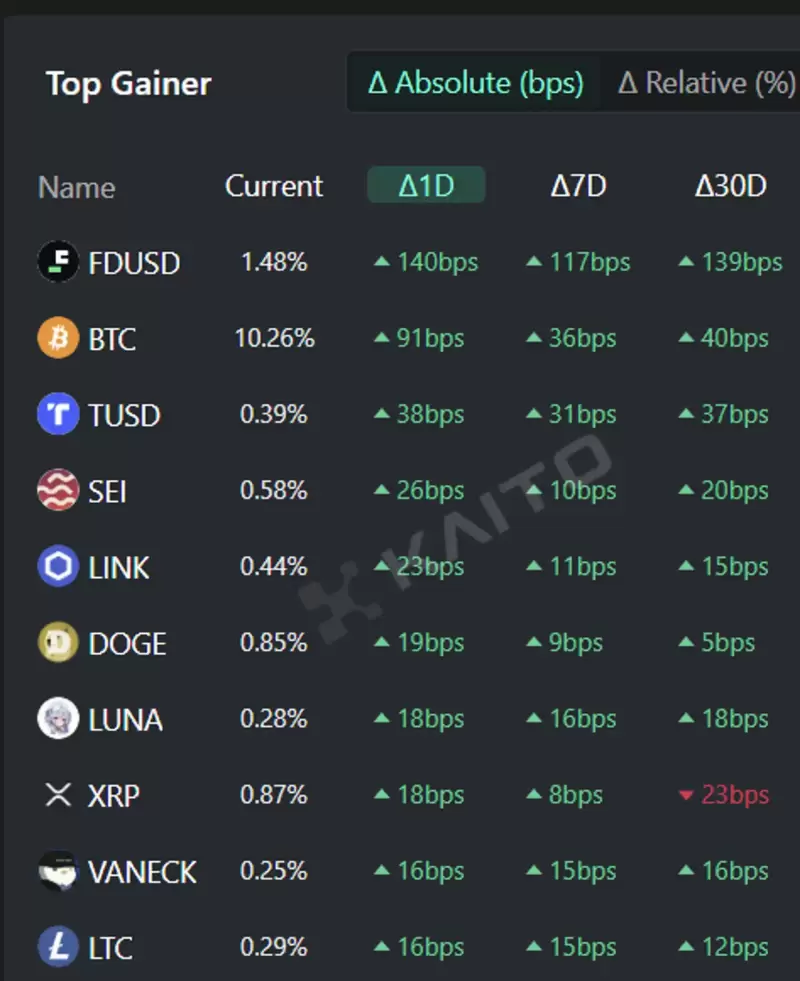

- FDUSD, BTC, TUSD, SEI, and LINK are the top 5 virtual asset-related keywords attracting the most interest

- Apr 03, 2025 at 03:45 pm

- According to the Token Mindshare (a metric quantifying the influence of specific tokens in the virtual asset market) top gainers from the AI-based Web3 search platform Kaito

-

-

-

-

-

- Meme Cryptocurrency Dogecoin DOGE/USD Falls After President Donald Trump's Tariff Shock, Extending Weekly Losses to Over 16%

- Apr 03, 2025 at 03:35 pm

- Popular dog-themed cryptocurrency Dogecoin DOGE/USD fell Wednesday after President Donald Trump's tariff shock, extending its weekly losses to over 16%.

-

-

-