If you buy through a BGR link, we may earn an affiliate commission, helping support our expert product labs. Apple and NVIDIA shared details of a collaboration

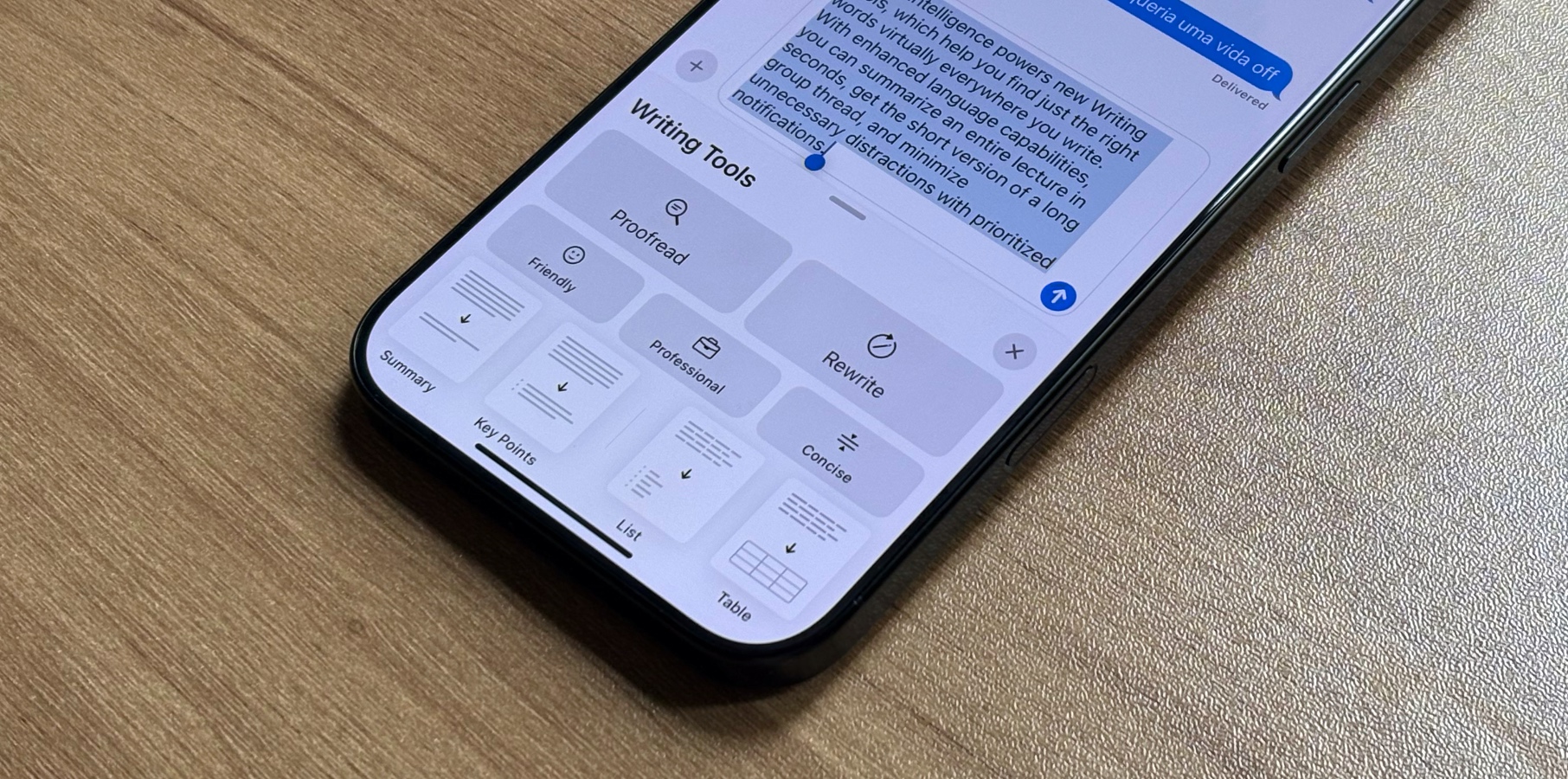

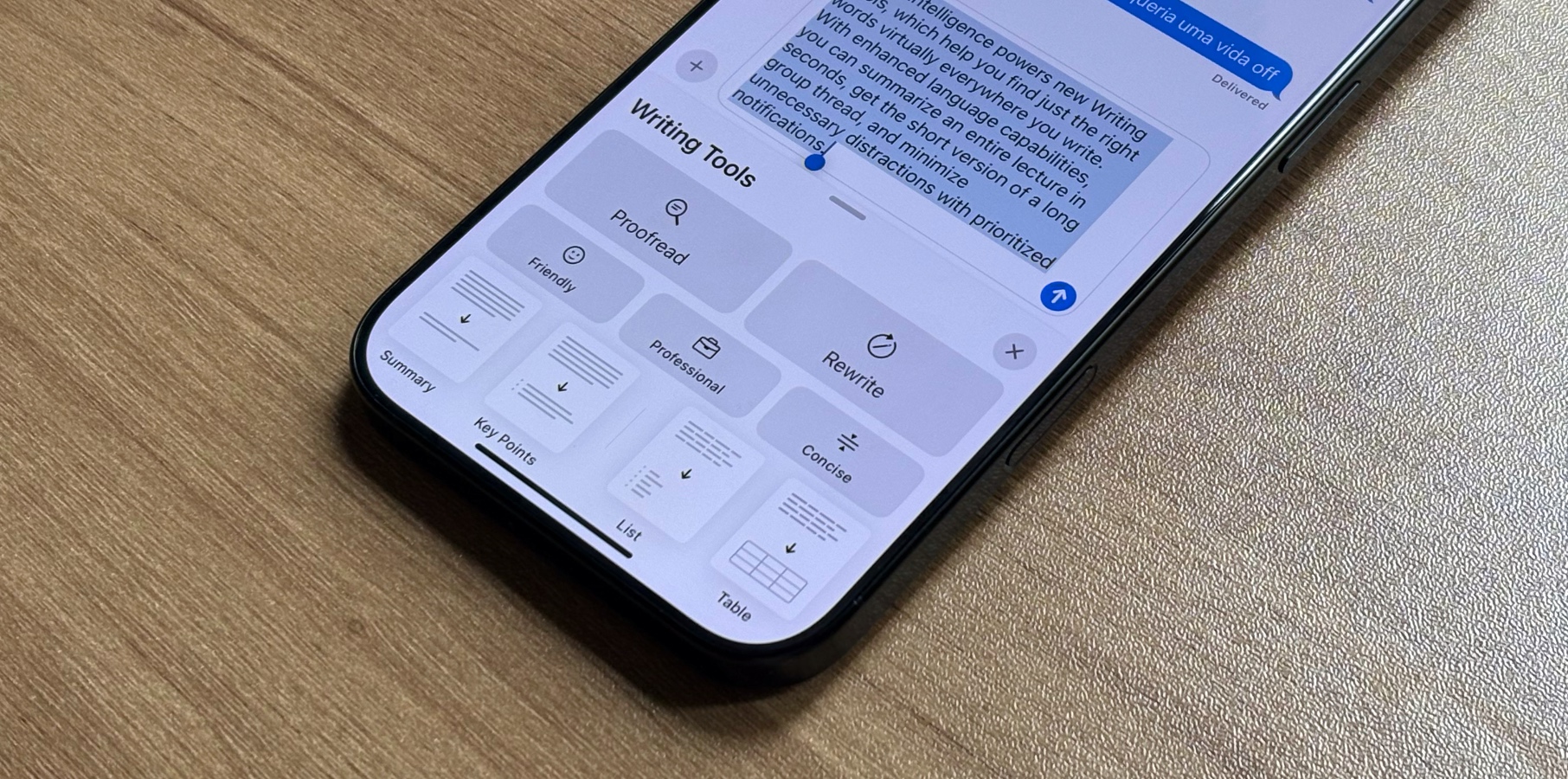

Tech giants Apple and NVIDIA have joined forces to enhance the performance of Large Language Models (LLMs) by introducing a new text generation technique for AI.

According to Apple, accelerating LLM inference is a crucial ML research problem. This is because auto-regressive token generation is computationally expensive and relatively slow. As a result, improving inference efficiency can reduce latency for users.

In addition to ongoing efforts to accelerate inference on Apple silicon, the company has recently made significant progress in accelerating LLM inference for the NVIDIA GPUs widely used for production applications across the industry, the company writes in a research paper.

Earlier this year, Apple published and open-sourced Recurrent Drafter (ReDrafter), which is a novel approach to speculative decoding that “achieves state of the art performance.”

According to the company, ReDrafter uses an RNN draft model, and combines beam search with dynamic tree attention to speed up LLM token generation by up to 3.5 tokens per generation step for open source models, surpassing the performance of prior speculative decoding techniques.

“In benchmarking a tens-of-billions parameter production model on NVIDIA GPUs, using the NVIDIA TensorRT-LLM inference acceleration framework with ReDrafter, we have seen 2.7x speed-up in generated tokens per second for greedy decoding,” Apple papers show.

With that, this technology could signifanctly reduce latency users may experience, while also using fewer GPUs and consuming less power.

Disclaimer:info@kdj.com

The information provided is not trading advice. kdj.com does not assume any responsibility for any

investments made based on the information provided in this article. Cryptocurrencies are highly volatile

and it is highly recommended that you invest with caution after thorough research!

If you believe that the content used on this website infringes your copyright, please contact us

immediately (info@kdj.com) and we will delete it promptly.